Build Your Voice Assistant With OpenAI's Latest Innovations

Table of Contents

Understanding OpenAI's APIs for Voice Assistant Development

Building a robust voice assistant requires a suite of powerful APIs. OpenAI provides several crucial components:

- Whisper: This powerful speech-to-text API converts spoken language into written text with remarkable accuracy, forming the foundation of your voice assistant's ability to understand user input. It handles various accents and noise levels effectively.

- GPT Models (e.g., GPT-3.5-turbo, GPT-4): These large language models are the brain of your voice assistant, responsible for natural language understanding (NLU) and natural language generation (NLG). They interpret user requests, formulate appropriate responses, and manage the conversational flow.

Strengths and Weaknesses:

| API | Strengths | Weaknesses | Cost |

|---|---|---|---|

| Whisper | High accuracy, multilingual support, robust noise handling | Can be computationally expensive for long audio clips | Usage-based |

| GPT Models | Powerful NLU/NLG capabilities, context understanding, adaptable responses | Cost varies by model; context window limitations exist | Usage-based |

<br>

Choosing the Right OpenAI Model for Your Voice Assistant

Selecting the appropriate GPT model is crucial for performance and cost-effectiveness. Consider these factors:

- Context Window Size: Larger context windows allow the model to remember more of the conversation history, leading to more coherent and relevant responses.

- Response Quality: More advanced models generally produce higher-quality, more nuanced responses.

- Cost: More powerful models typically have higher usage costs.

Here's a comparison to guide your choice:

- GPT-3.5-turbo: Ideal for simple commands and basic conversational tasks. Cost-effective for many applications.

- GPT-4: Best suited for complex conversations, nuanced understanding, and sophisticated responses. Higher cost but superior performance.

Designing the Architecture of Your Voice Assistant

A typical voice assistant architecture consists of several key components:

- Speech Recognition (Whisper): Converts audio input to text.

- Natural Language Understanding (NLU) (GPT Model): Interprets the meaning of the transcribed text.

- Dialogue Management: Manages the conversation flow, keeping track of context and user history.

- Natural Language Generation (NLG) (GPT Model): Generates the text response.

- Text-to-Speech (TTS): Converts the text response back into audio output (often using a third-party library like Google Cloud Text-to-Speech).

You'll likely integrate third-party libraries and services for components like TTS and cloud storage.

Implementing Speech Recognition with OpenAI Whisper

Here's a Python code example demonstrating Whisper's transcription capabilities:

import openai

openai.api_key = "YOUR_API_KEY"

audio_file = open("audio.mp3", "rb")

transcript = openai.Audio.transcribe("whisper-1", audio_file)

print(transcript["text"])

Remember to replace "YOUR_API_KEY" with your actual OpenAI API key. Error handling and techniques for real-time transcription will add robustness to your application.

Building the Conversational AI with OpenAI's GPT Models

GPT models power the conversational aspect of your voice assistant. You'll design prompts that guide the model to understand user requests and generate appropriate replies.

Creating a Natural and Engaging Conversational Flow

To enhance the user experience, consider these techniques:

- Context Management: Maintain conversational context by passing previous turns to the GPT model.

- Personality Design: Define the voice assistant's personality through prompt engineering.

- Handling Interruptions and Ambiguity: Design prompts to handle incomplete or unclear user input gracefully.

- User Preferences and History: Incorporate user preferences and past interactions to personalize the experience.

prompt = f"""User: {user_input}

Assistant: """

response = openai.Completion.create(

engine="text-davinci-003", #or your chosen GPT model

prompt=prompt,

max_tokens=150,

n=1,

stop=None,

temperature=0.7,

)

assistant_response = response.choices[0].text.strip()

print(assistant_response)

Deploying and Testing Your Voice Assistant

Deployment options range from cloud services (AWS, Google Cloud, Azure) to local deployments on a Raspberry Pi.

Choosing a Deployment Platform

| Platform | Pros | Cons |

|---|---|---|

| Cloud Services | Scalability, reliability, easy maintenance | Cost, dependency on internet connectivity |

| Raspberry Pi | Low cost, local processing | Limited resources, requires more technical expertise |

Testing involves evaluating accuracy, response time, and overall user experience. Iterative development based on user feedback is essential for continuous improvement.

Conclusion: Building Your Dream Voice Assistant with OpenAI

Building a voice assistant with OpenAI's tools involves leveraging Whisper for speech-to-text, GPT models for NLU/NLG, and carefully designing the overall architecture. By following the steps outlined above and experimenting with different models and architectures, you can create a truly engaging and helpful voice assistant. Start building your own cutting-edge voice assistant today with OpenAI's powerful innovations! Explore the APIs and unleash the potential of AI-powered voice interaction.

(Replace with relevant tutorial link)

Featured Posts

-

Putins Victory Day Ceasefire A Temporary Truce

May 10, 2025

Putins Victory Day Ceasefire A Temporary Truce

May 10, 2025 -

Todays Stock Market China Tariffs And Uk Trade Deal Developments

May 10, 2025

Todays Stock Market China Tariffs And Uk Trade Deal Developments

May 10, 2025 -

Nyt Spelling Bee April 9 2025 Strands Full Solution And Analysis

May 10, 2025

Nyt Spelling Bee April 9 2025 Strands Full Solution And Analysis

May 10, 2025 -

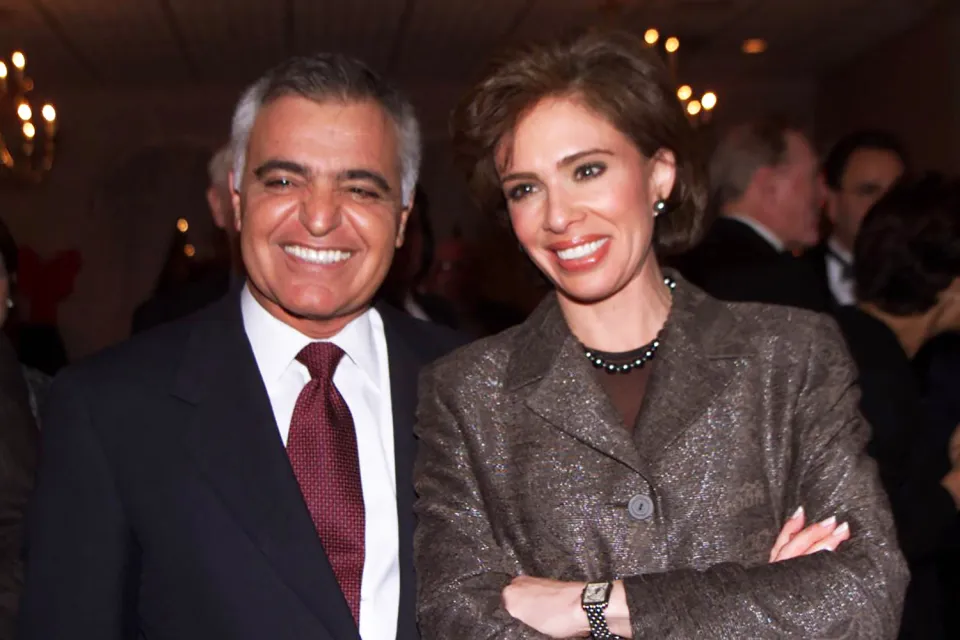

Jeanine Pirro A Behind The Scenes Look At The Fox News Personality

May 10, 2025

Jeanine Pirro A Behind The Scenes Look At The Fox News Personality

May 10, 2025 -

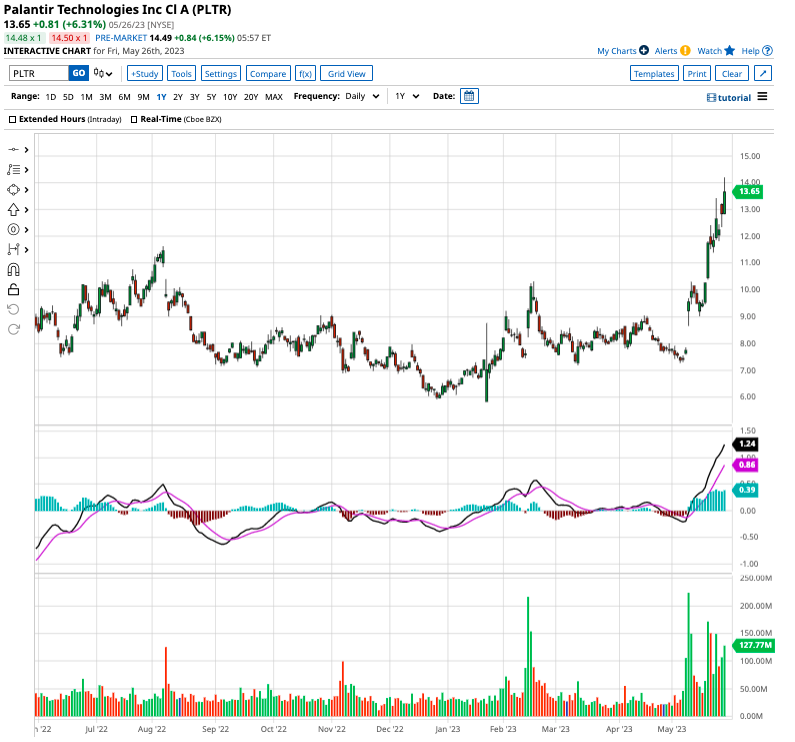

Is A 40 Increase In Palantir Stock Realistic By 2025

May 10, 2025

Is A 40 Increase In Palantir Stock Realistic By 2025

May 10, 2025