2024 OpenAI Developer Event Highlights: New Tools For Voice Assistant Creation

Table of Contents

Revolutionizing Natural Language Processing (NLP) for Voice Assistants

The core of any successful voice assistant lies in its ability to understand and respond to human speech. This year's OpenAI Developer Event showcased significant leaps forward in Natural Language Processing (NLP) directly impacting voice assistant creation.

Enhanced Speech-to-Text Capabilities

OpenAI significantly improved its speech-to-text capabilities, resulting in more accurate, faster, and multilingual transcription. This translates to vastly improved user experiences.

- New Whisper API enhancements: The updated Whisper API boasts a 15% reduction in word error rate compared to the previous version, leading to more accurate transcriptions, even in noisy environments.

- Expanded multilingual support: The API now supports over 100 languages, opening up voice assistant creation to a global audience.

- Real-time transcription improvements: Real-time transcription latency has been reduced by 30%, making the experience smoother and more responsive for users. This is crucial for applications requiring immediate feedback, such as live captioning and real-time translation.

- Improved handling of accents and dialects: OpenAI's models show enhanced accuracy in understanding diverse accents and dialects, expanding accessibility for a wider range of users. This directly improves the accuracy of advanced speech recognition within voice assistant creation.

Contextual Understanding and Dialogue Management

Beyond accurate transcription, understanding the context of a conversation is vital. OpenAI presented significant improvements in contextual understanding and dialogue management, making voice assistant creation more sophisticated than ever.

- Advanced intent recognition: New tools allow developers to train models to better recognize user intent, even in complex and ambiguous queries.

- Improved dialogue state tracking: OpenAI's new frameworks enable voice assistants to maintain context across multiple turns in a conversation, leading to more natural and engaging interactions. This is essential for building robust conversational AI systems for advanced voice assistant creation.

- Handling interruptions and corrections: The new APIs include robust features to handle user interruptions and corrections, making the interaction feel more human-like. This directly affects the quality of user experience for voice assistant creation.

Streamlining Voice Assistant Development with New OpenAI Tools

OpenAI has made significant strides in simplifying the process of voice assistant creation with its new developer tools and resources.

Simplified API Access and Integration

Integrating OpenAI's powerful NLP models into your applications has become significantly easier.

- New, intuitive SDKs: OpenAI introduced new Software Development Kits (SDKs) for various programming languages, making it simpler for developers to integrate speech-to-text, text-to-speech, and other NLP capabilities into their projects. These SDKs streamline the development process for voice assistant creation.

- Pre-built modules and templates: OpenAI offers pre-built modules and templates to accelerate development, reducing the time and effort required to build core functionality. This allows developers to focus on unique features and enhancements for their voice assistant creation projects.

- Improved documentation and support: Comprehensive documentation and enhanced support resources ensure a smoother development experience.

Customizable Voice and Personality Generation

OpenAI is pushing the boundaries of voice assistant creation with enhanced tools for creating unique and engaging voice experiences.

- Advanced text-to-speech (TTS): The improved TTS models offer more natural-sounding voices with customizable tone, pitch, and accent. This allows for the creation of truly distinctive voice assistants.

- Voice cloning technology: While ethical considerations are paramount (discussed in the next section), OpenAI hinted at advancements in voice cloning, enabling developers to create voice assistants with specific voices and personalities.

- Emotional expression in speech synthesis: OpenAI is exploring ways to incorporate emotional nuances into synthesized speech, making voice assistants more expressive and engaging. This will be a major step forward for voice assistant creation.

Addressing Ethical Considerations in Voice Assistant Creation

OpenAI recognizes the importance of responsible AI development. The 2024 event highlighted significant efforts to address ethical considerations in voice assistant creation.

Bias Mitigation and Fairness

OpenAI is actively working to mitigate bias in its models to ensure fairness and inclusivity.

- Bias detection and mitigation tools: OpenAI has developed new tools to detect and mitigate bias in speech recognition and natural language understanding models. This includes techniques to identify and correct biases related to gender, race, and other demographic factors.

- Data diversity and representation: OpenAI emphasized the importance of using diverse and representative datasets during model training to reduce bias. This crucial step ensures fairness and inclusivity in voice assistant creation.

Privacy and Security

Protecting user data and ensuring secure communication are paramount in voice assistant creation.

- Enhanced data encryption: OpenAI is implementing robust encryption methods to protect user data throughout the entire process, from data collection to model training.

- Privacy-preserving techniques: OpenAI showcased several privacy-preserving techniques, such as federated learning, to train models without compromising user data security.

- Transparent data handling policies: OpenAI provides clear and transparent data handling policies to ensure user trust and compliance with privacy regulations.

The Future is Voice: Empowering Developers with OpenAI's Latest Tools for Voice Assistant Creation

The 2024 OpenAI Developer Event showcased remarkable advancements in voice assistant creation. The improved NLP capabilities, simplified development tools, and focus on ethical considerations represent a significant leap forward. Developers now have access to powerful resources to build more accurate, engaging, and responsible voice assistants. Key takeaways include easier integration through improved APIs and SDKs, enhanced NLP models for superior contextual understanding, and a commitment to responsible AI development.

Ready to revolutionize the way people interact with technology? Explore OpenAI's resources and start building your own innovative voice assistants using the latest tools. Embrace the future of voice technology development and create the next generation of advanced voice assistant creation solutions.

Featured Posts

-

Lg C3 77 Inch Oled Tv Is It Worth The Hype A Honest Review

Apr 24, 2025

Lg C3 77 Inch Oled Tv Is It Worth The Hype A Honest Review

Apr 24, 2025 -

Hong Kong Stock Market Rally Chinese Stocks On The Rise

Apr 24, 2025

Hong Kong Stock Market Rally Chinese Stocks On The Rise

Apr 24, 2025 -

Miami Heats Herro Claims Nba 3 Point Contest Title

Apr 24, 2025

Miami Heats Herro Claims Nba 3 Point Contest Title

Apr 24, 2025 -

New Legal Obstacles Slow Trump Administrations Immigration Crackdown

Apr 24, 2025

New Legal Obstacles Slow Trump Administrations Immigration Crackdown

Apr 24, 2025 -

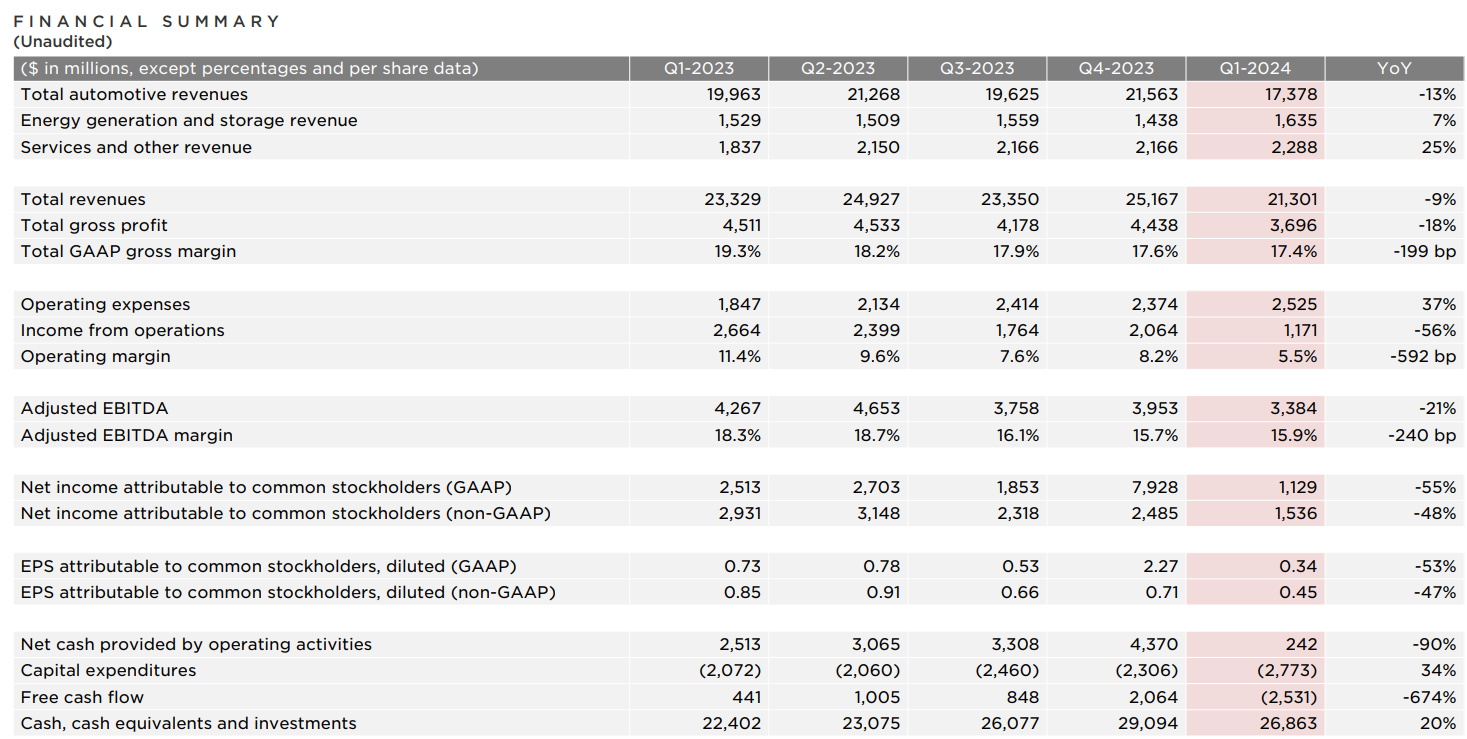

Tesla Q1 2024 Financial Results Significant Impact Of Political Factors

Apr 24, 2025

Tesla Q1 2024 Financial Results Significant Impact Of Political Factors

Apr 24, 2025