The Reality Of AI Learning: Navigating The Challenges For Responsible Use

Table of Contents

What is AI Learning?

AI learning, encompassing machine learning, deep learning, and other related subfields, refers to the ability of computer systems to learn from data without explicit programming. These systems analyze vast datasets, identify patterns, and make predictions or decisions based on these patterns. The potential benefits are immense, ranging from improved medical diagnoses and personalized education to more efficient transportation systems and enhanced scientific discovery. However, the increasing sophistication of AI systems also raises serious concerns about bias, ethics, security, and transparency. Understanding and addressing these concerns is crucial for harnessing the full potential of AI learning while mitigating its risks.

Data Bias and its Impact on AI Learning Outcomes

One of the most significant challenges in AI learning is data bias. AI systems learn from the data they are trained on, and if this data reflects existing societal biases, the resulting AI system will likely perpetuate and even amplify those biases. This can lead to unfair or discriminatory outcomes in various applications.

-

Real-world examples: Facial recognition systems have shown higher error rates for people with darker skin tones, while loan application algorithms have been found to discriminate against certain demographic groups. These biases stem from skewed datasets used to train these systems.

-

Mitigation Strategies: Addressing data bias requires a multi-pronged approach:

- Careful data curation and preprocessing: This involves identifying and correcting biases in the training data, ensuring diverse and representative datasets.

- Algorithmic fairness techniques: Employing algorithms designed to mitigate bias and promote fairness in decision-making.

- Regular auditing and bias detection: Continuously monitoring AI systems for bias and implementing corrective actions as needed. This requires AI bias detection tools and techniques.

The Ethical Implications of AI Learning

The ethical implications of AI learning are profound and far-reaching. The deployment of AI systems raises complex ethical dilemmas concerning:

- Privacy concerns: AI systems often rely on vast amounts of personal data, raising concerns about privacy violations and data security.

- Job displacement: Automation driven by AI could lead to significant job losses in various sectors, requiring proactive measures to address workforce transition.

- Autonomous weapons systems: The development of lethal autonomous weapons systems raises serious ethical questions about accountability and the potential for unintended consequences.

Addressing these ethical concerns requires establishing clear ethical frameworks and guidelines for AI development and deployment, emphasizing:

- Transparency and explainability: Understanding how AI systems arrive at their decisions is critical for building trust and accountability.

- Accountability and responsibility: Establishing clear lines of responsibility for the actions of AI systems.

- Human oversight and control: Ensuring that humans retain ultimate control over AI systems and their decisions. The development of responsible AI is paramount.

The Security Risks Associated with AI Learning

AI systems are not immune to security threats. Their vulnerabilities can be exploited by malicious actors, leading to serious consequences. Key risks include:

- Adversarial examples: Subtly manipulated inputs that can fool AI systems into making incorrect predictions.

- Data poisoning: Introducing malicious data into the training datasets to compromise the accuracy or reliability of AI systems.

- Model theft: Stealing or copying AI models to gain unauthorized access to sensitive information or intellectual property.

Robust security measures are essential to protect AI systems and their data. This includes:

- Data encryption and access control: Protecting sensitive data from unauthorized access.

- Regular security audits and penetration testing: Identifying and addressing vulnerabilities before they can be exploited.

- Secure model development and deployment practices: Employing secure coding practices and deploying AI systems in secure environments. AI security best practices must be implemented.

The Need for Transparency and Explainability in AI Learning

Many AI systems operate as "black boxes," making it difficult to understand how they arrive at their decisions. This lack of transparency poses significant challenges for trust, accountability, and regulation. Explainable AI (XAI) aims to address this challenge by developing techniques to make AI systems more interpretable and understandable.

The benefits of explainable AI (XAI) are substantial:

- Increased trust and acceptance: Users are more likely to trust and accept AI systems if they understand how they work.

- Improved debugging and troubleshooting: Explainability makes it easier to identify and correct errors in AI systems.

- Enhanced accountability and regulation: Transparent AI systems are easier to regulate and hold accountable for their actions.

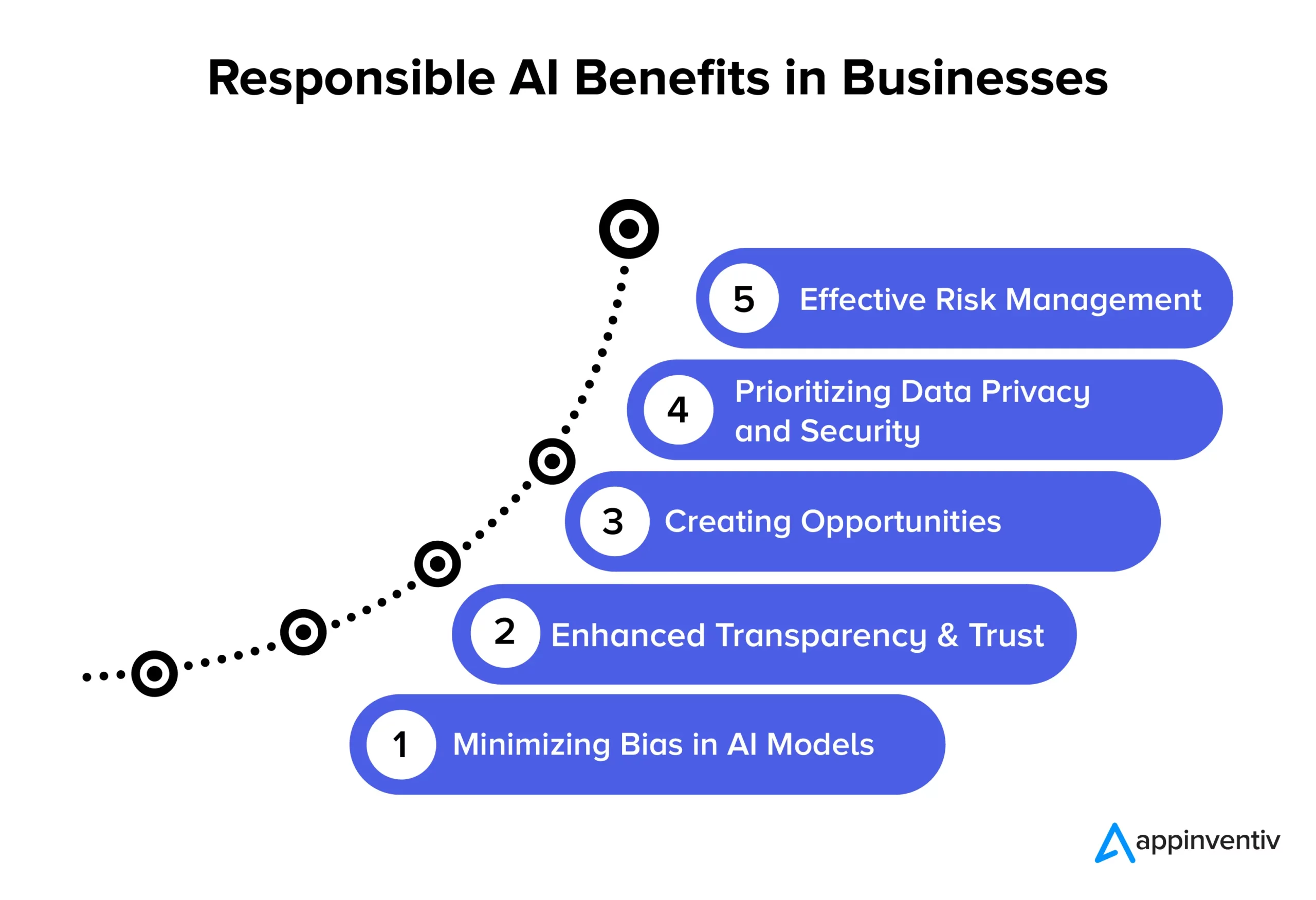

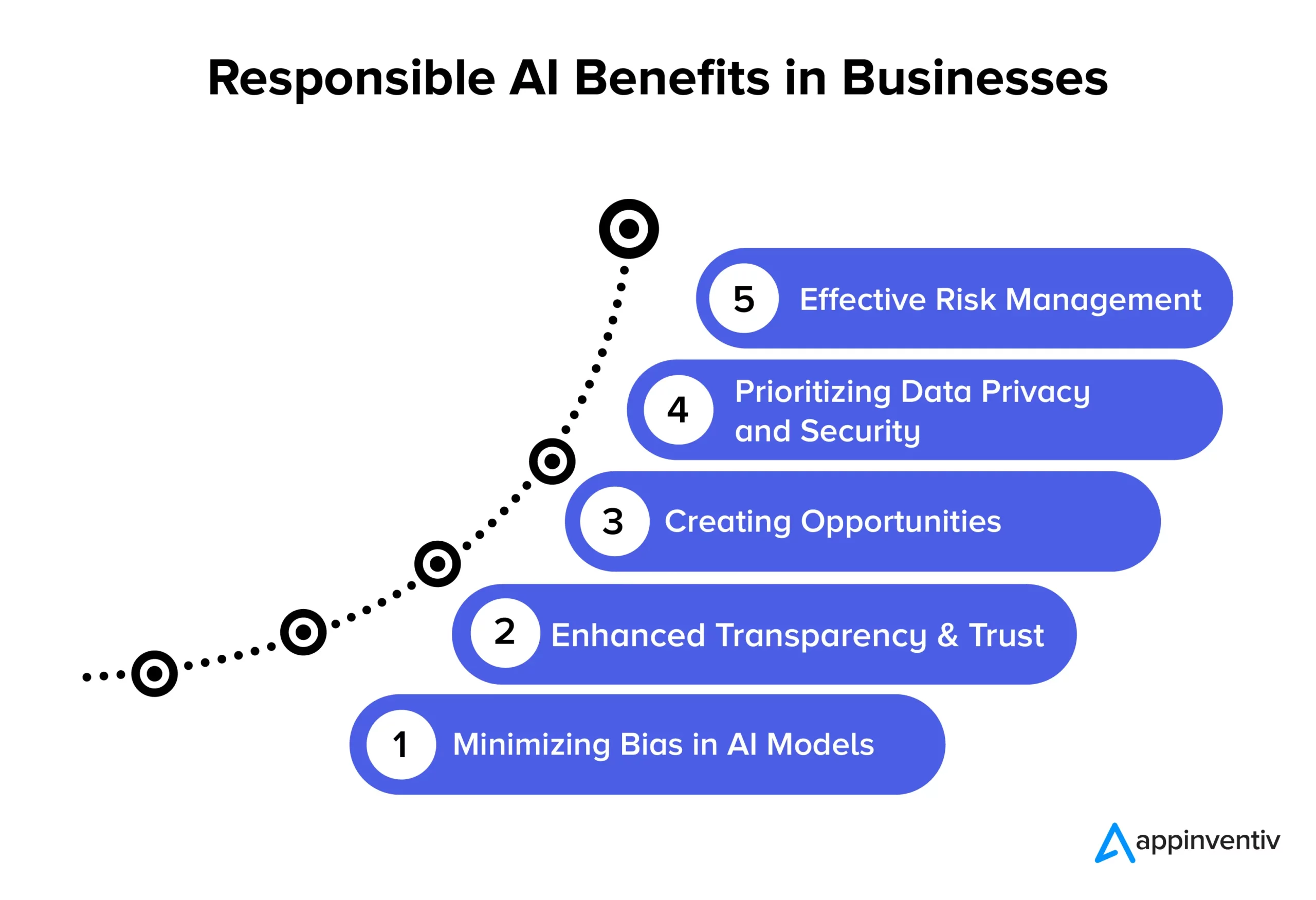

Addressing the Challenges of Responsible AI Learning

The responsible use of AI learning requires addressing the interconnected challenges of data bias, ethical concerns, security risks, and the need for transparency. Building trust in AI necessitates proactive measures to mitigate these risks. Individuals and organizations working with AI must adopt responsible AI learning strategies that prioritize fairness, accountability, and transparency. This includes investing in robust data governance practices, implementing ethical guidelines, and utilizing XAI techniques to enhance transparency and understanding.

By embracing responsible AI development and actively engaging in discussions about the ethical and societal implications of AI learning, we can harness the transformative potential of this technology while mitigating its risks and ensuring a future where AI benefits all of humanity. Learn more about navigating the challenges of AI and participate in shaping a future powered by responsible AI.

Featured Posts

-

Bernard Keriks Legacy From Nypd Commissioner To Prison

May 31, 2025

Bernard Keriks Legacy From Nypd Commissioner To Prison

May 31, 2025 -

Zverevs Early Indian Wells Exit I M Just Not Playing Good Tennis

May 31, 2025

Zverevs Early Indian Wells Exit I M Just Not Playing Good Tennis

May 31, 2025 -

Game De Dahu 1 Le Jeu Et Concours De Saint Die Des Vosges

May 31, 2025

Game De Dahu 1 Le Jeu Et Concours De Saint Die Des Vosges

May 31, 2025 -

Rosemary And Thyme Benefits For Health And Well Being

May 31, 2025

Rosemary And Thyme Benefits For Health And Well Being

May 31, 2025 -

Was Banksy A Woman All Along Investigating The Clues

May 31, 2025

Was Banksy A Woman All Along Investigating The Clues

May 31, 2025