The Link Between Algorithmic Radicalization And Mass Shootings: Should Tech Companies Be Blamed?

Table of Contents

The Mechanisms of Algorithmic Radicalization

Social media algorithms, designed to maximize user engagement, can inadvertently create echo chambers and filter bubbles. These mechanisms significantly contribute to algorithmic radicalization, exposing users to increasingly extreme content. Keywords like "filter bubbles," "echo chambers," "recommendation algorithms," and "reinforcement learning" are central to understanding this process.

-

Recommendation Systems and the Rabbit Hole: Recommendation systems personalize content feeds, suggesting material similar to what users have previously engaged with. This can lead users down a "rabbit hole" of increasingly extreme viewpoints, reinforcing existing biases and exposing them to radical ideologies they might not otherwise encounter.

-

Reinforcement Learning and Radical Beliefs: Reinforcement learning algorithms reward engagement, meaning that platforms are incentivized to show users content that elicits strong reactions, even if that content is hateful or violent. This creates a feedback loop that strengthens radical beliefs and promotes the spread of extremist ideologies.

-

Misinformation and Propaganda: Algorithms can be exploited to spread misinformation and propaganda related to violence, creating a fertile ground for the propagation of extremist narratives and potentially inciting real-world violence. Examples include the spread of conspiracy theories and manipulated videos that fuel hatred and incite action.

The Role of Social Media Platforms in Amplifying Hate Speech

Despite efforts at content moderation, social media platforms struggle to effectively combat the proliferation of hate speech and extremist ideologies. Keywords such as "hate speech," "online harassment," "extremist groups," "content moderation," and "de-platforming" highlight the challenges faced.

-

Challenges of Content Moderation at Scale: The sheer volume of content uploaded to social media platforms makes effective content moderation incredibly difficult and resource-intensive. Human moderators can't possibly review every piece of content, leaving a significant amount unmoderated and potentially harmful.

-

Ineffective Content Moderation Policies: Existing content moderation policies often lack clarity and consistency, leading to inconsistent enforcement and allowing extremist content to slip through the cracks. The criteria for removing content often vary across platforms and are subject to interpretation.

-

The De-platforming Debate: De-platforming, or removing individuals or groups from online platforms, is a controversial strategy. While it can prevent the spread of hate speech, it also raises concerns about freedom of speech and the potential for driving extremism underground.

The Link Between Online Radicalization and Real-World Violence

A growing body of evidence links online radicalization to real-world acts of violence, including mass shootings. Understanding this link requires examining keywords like "incel communities," "online-to-offline violence," "radicalization pathways," "violent extremism," and "mass shooter profiles."

-

Case Studies: Numerous case studies demonstrate a clear pathway from online radicalization to offline violence. Individuals exposed to extremist content online have been shown to become increasingly isolated, radicalized, and prone to violence.

-

Psychological Factors: The transition from online radicalization to offline action is complex and involves a range of psychological factors, including feelings of isolation, resentment, and a sense of belonging within the online community.

-

Online Communities and Support for Violence: Online communities provide a breeding ground for radicalization, offering support, validation, and encouragement for violent acts. These communities can foster a sense of shared identity and purpose, leading to a heightened sense of entitlement and a diminished regard for human life.

The Debate on Tech Company Accountability

The legal and ethical responsibilities of tech companies in preventing the spread of extremist content and mitigating the consequences of algorithmic radicalization are fiercely debated. This discussion involves keywords like "legal responsibility," "ethical considerations," "regulatory frameworks," "Section 230," and "government regulation."

-

Section 230 and its Implications: Section 230 of the Communications Decency Act (in the US) shields online platforms from liability for content posted by their users. This provision is frequently cited in debates about tech company responsibility, with critics arguing that it allows platforms to evade accountability for harmful content. Similar legal protections exist in other countries.

-

Legal Avenues for Accountability: Several legal avenues are being explored to hold tech companies accountable, including lawsuits from victims of online violence and increased regulatory scrutiny.

-

Ethical Arguments for Regulation: Ethical arguments for greater regulation center on the need to protect users from harm and to prevent the spread of dangerous ideologies. The argument is that tech companies have a moral obligation to mitigate the risks associated with their platforms.

Conclusion

The complex link between algorithmic radicalization and mass shootings is undeniable. While social media algorithms themselves are not directly responsible for acts of violence, their role in amplifying hate speech and creating echo chambers cannot be ignored. The question of whether tech companies are complicit is nuanced, but their responsibility to mitigate the risks associated with their platforms is clear. Section 230 and similar legal protections need careful reevaluation in light of the significant societal harm caused by online radicalization.

The fight against algorithmic radicalization and its contribution to mass shootings requires a multifaceted approach. We must demand accountability from tech companies, advocate for stronger regulations, and support organizations dedicated to combating online extremism. Participate in the ongoing conversation to ensure a safer online environment and prevent future tragedies. Let's work together to dismantle the mechanisms of algorithmic radicalization and build a more responsible digital world.

Featured Posts

-

March Rainfall Insufficient To Alleviate Water Deficit

May 30, 2025

March Rainfall Insufficient To Alleviate Water Deficit

May 30, 2025 -

Investigating The Role Of Algorithms In Radicalizing Mass Shooters Corporate Responsibility

May 30, 2025

Investigating The Role Of Algorithms In Radicalizing Mass Shooters Corporate Responsibility

May 30, 2025 -

Manchester United Elogia Bruno Fernandes O Magnifico Portugues

May 30, 2025

Manchester United Elogia Bruno Fernandes O Magnifico Portugues

May 30, 2025 -

Atp Madrid Open Jack Draper Storms Into Final

May 30, 2025

Atp Madrid Open Jack Draper Storms Into Final

May 30, 2025 -

Update Susquehanna River Assault Case Proceeds To Court

May 30, 2025

Update Susquehanna River Assault Case Proceeds To Court

May 30, 2025

Latest Posts

-

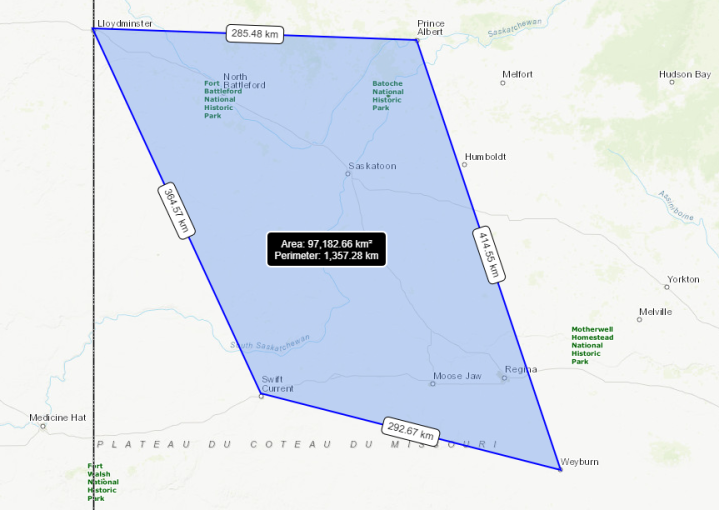

Hotter Temperatures Increase Saskatchewans Wildfire Threat

May 31, 2025

Hotter Temperatures Increase Saskatchewans Wildfire Threat

May 31, 2025 -

Saskatchewan Wildfires Preparing For A More Intense Season

May 31, 2025

Saskatchewan Wildfires Preparing For A More Intense Season

May 31, 2025 -

Saskatchewan Wildfire Season Hotter Summer Fuels Concerns

May 31, 2025

Saskatchewan Wildfire Season Hotter Summer Fuels Concerns

May 31, 2025 -

Urgent Action Needed Early Wildfire Season In Canada And Minnesota

May 31, 2025

Urgent Action Needed Early Wildfire Season In Canada And Minnesota

May 31, 2025 -

Deadly Wildfires Rage In Eastern Manitoba Emergency Crews Respond

May 31, 2025

Deadly Wildfires Rage In Eastern Manitoba Emergency Crews Respond

May 31, 2025