Investigating The Role Of Algorithms In Radicalizing Mass Shooters: Corporate Responsibility

Table of Contents

The Amplification Effect of Algorithms

Algorithms, the invisible engines driving our online experiences, are not neutral arbiters of information. They actively shape what we see, read, and interact with online, often with unintended – and devastating – consequences.

Echo Chambers and Filter Bubbles

Algorithm-driven social media platforms create echo chambers and filter bubbles, reinforcing pre-existing beliefs and isolating users from diverse perspectives. This effect is particularly dangerous when it comes to extremist ideologies.

- Examples of algorithms promoting extremist content: Algorithms prioritizing engagement often prioritize sensational and inflammatory content, including extremist viewpoints, leading to increased visibility and reach.

- Lack of content moderation: Inadequate content moderation policies and enforcement allow extremist content to proliferate unchecked, further exacerbating the problem.

- Impact on susceptible individuals: Individuals prone to radicalization are particularly vulnerable within these echo chambers, as their views are constantly reinforced and amplified, leading to further isolation and potentially violent actions. This algorithmic bias pushes susceptible individuals further down the rabbit hole of extremism.

Personalized Recommendations and Targeted Advertising

Personalized recommendations and targeted advertising, features designed to enhance user experience, can inadvertently expose individuals to radicalizing content they might never have encountered otherwise.

- Examples of targeted ads promoting extremist ideologies: Sophisticated targeting allows extremist groups to reach specific demographics with tailored messages, furthering their recruitment efforts.

- The role of data collection in personalized recommendations: The vast amount of data collected by social media platforms allows for highly targeted recommendations, potentially exposing individuals to extremist content based on their online activity.

- Lack of transparency in algorithmic processes: The lack of transparency surrounding how these algorithms function makes it difficult to assess their potential for harm and to implement effective countermeasures. This opacity hinders efforts to understand and regulate the spread of online extremism.

The Spread of Misinformation and Conspiracy Theories

Algorithms play a significant role in the rapid dissemination of misinformation and conspiracy theories, which often serve as a breeding ground for extremist ideologies.

The Role of Algorithms in Disseminating False Information

Algorithms, designed to maximize engagement, frequently prioritize sensational and emotionally charged content, regardless of its accuracy. This prioritization of engagement over accuracy has devastating consequences.

- Examples of algorithms prioritizing engagement over accuracy: The spread of fake news related to mass shootings can fuel existing grievances and incite further violence. Algorithms often prioritize sensationalized or misleading narratives, regardless of their factual basis.

- The spread of fake news related to mass shootings: False narratives about the causes and motivations behind mass shootings can easily spread rapidly, fueling extremist beliefs and conspiracy theories.

- The difficulty in fact-checking online content: The speed at which misinformation spreads online far outpaces the ability of fact-checkers to debunk it, creating a constant stream of false narratives that can radicalize individuals.

The Impact of Viral Content and Online Communities

Viral videos, memes, and online communities, all facilitated and amplified by algorithms, create breeding grounds for extremist ideologies to spread rapidly.

- Examples of viral content promoting violence: Graphic content glorifying violence or promoting extremist ideologies can go viral quickly, reaching millions of users and influencing vulnerable individuals.

- The role of online forums in radicalization: Online forums and echo chambers provide spaces for extremists to connect, share their beliefs, and radicalize each other.

- The challenges of monitoring and regulating online communities: The sheer volume of content and the decentralized nature of many online communities make it extremely difficult to effectively monitor and regulate the spread of extremist ideologies.

Corporate Responsibility and Accountability

The power of algorithms to influence individuals and shape online narratives necessitates a critical examination of corporate responsibility and accountability.

The Ethical Obligations of Tech Companies

Tech companies have an ethical obligation to mitigate the risks associated with their algorithms' contribution to radicalization.

- Examples of corporate negligence: Failure to adequately address the spread of extremist content, lack of transparency in algorithmic processes, and insufficient investment in content moderation are examples of corporate negligence.

- Calls for increased transparency and accountability: Increased algorithmic transparency and independent audits are crucial steps towards fostering accountability.

- The need for improved content moderation strategies: Improved content moderation strategies, including more effective detection of extremist content and quicker response times, are necessary.

Legal and Regulatory Frameworks

Existing legal and regulatory frameworks need significant strengthening to hold tech companies accountable for their role in the spread of online extremism.

- Existing laws related to online hate speech and violence: Current laws often struggle to keep pace with the rapid evolution of online platforms and the sophistication of extremist tactics.

- Limitations of current regulations: Regulations often lack clarity, enforcement mechanisms, and the resources to effectively address the complexities of online radicalization.

- Suggestions for improved legal frameworks: Stronger regulations are needed, ensuring better content moderation, increased transparency, and robust penalties for non-compliance. Data protection and cybersecurity must also be prioritized.

Conclusion

The evidence overwhelmingly suggests that algorithms play a significant role in radicalizing mass shooters. From creating echo chambers and amplifying extremist content to facilitating the spread of misinformation, the impact of these technologies is undeniable. Tech companies bear a substantial corporate responsibility to address this issue. We must demand greater transparency and accountability regarding their algorithms and support policies that promote responsible technology development and usage. Failure to do so will only further empower those who seek to exploit algorithms for harmful purposes. Let's collectively work towards mitigating the dangers of algorithms in radicalizing mass shooters and build a safer, more responsible online environment.

Featured Posts

-

Regreso De Bts Cuanto Tiempo Despues Del Servicio Militar

May 30, 2025

Regreso De Bts Cuanto Tiempo Despues Del Servicio Militar

May 30, 2025 -

Andre Agassi Marturie Uimitoare Stresul Competitiei

May 30, 2025

Andre Agassi Marturie Uimitoare Stresul Competitiei

May 30, 2025 -

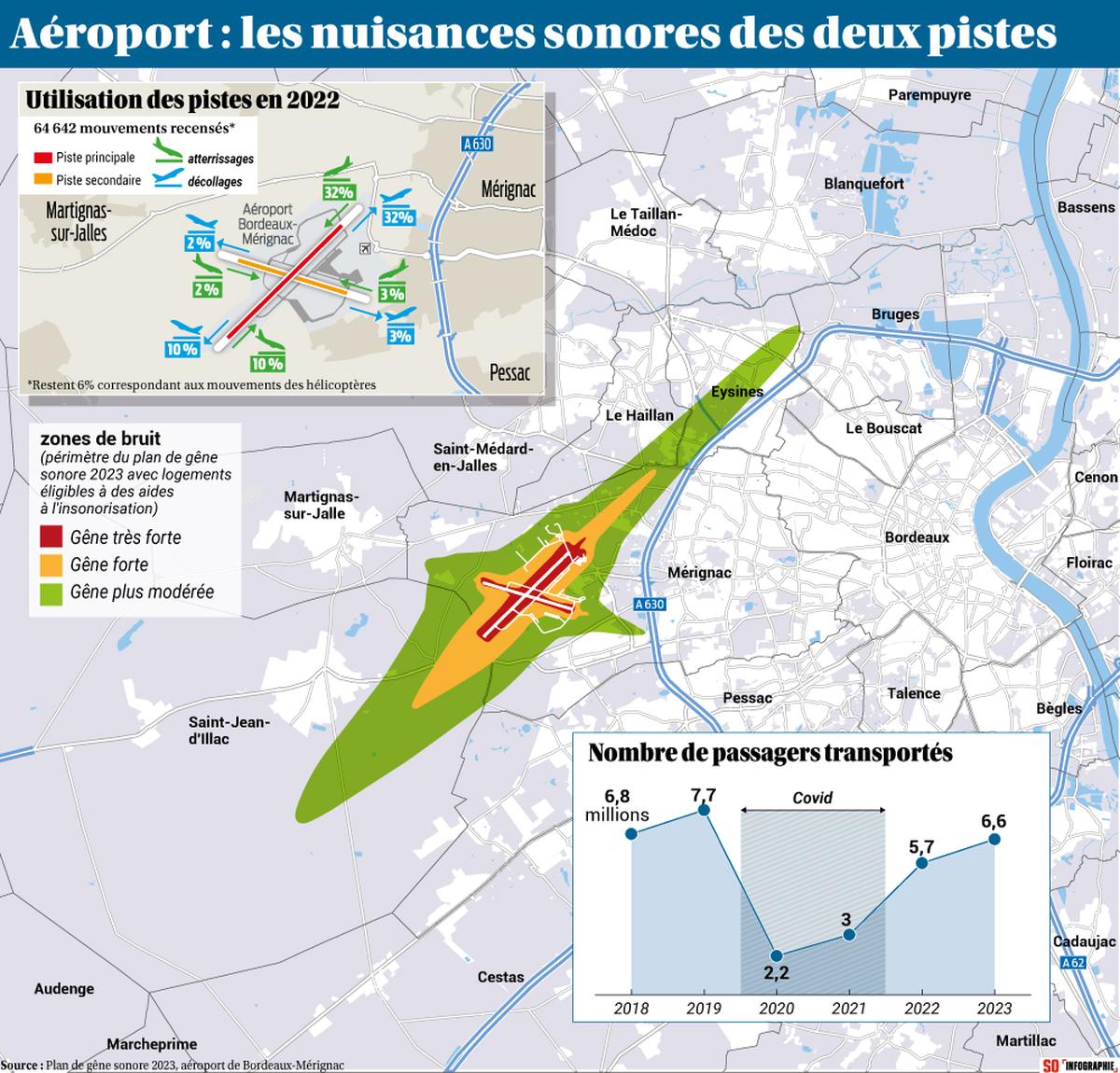

Aeroport De Bordeaux Manifestation Contre Le Maintien De La Piste Secondaire

May 30, 2025

Aeroport De Bordeaux Manifestation Contre Le Maintien De La Piste Secondaire

May 30, 2025 -

Inflation And Unemployment Driving Forces Behind Increased Uncertainty

May 30, 2025

Inflation And Unemployment Driving Forces Behind Increased Uncertainty

May 30, 2025 -

The Closure Of Anchor Brewing Company Impact And Aftermath

May 30, 2025

The Closure Of Anchor Brewing Company Impact And Aftermath

May 30, 2025

Latest Posts

-

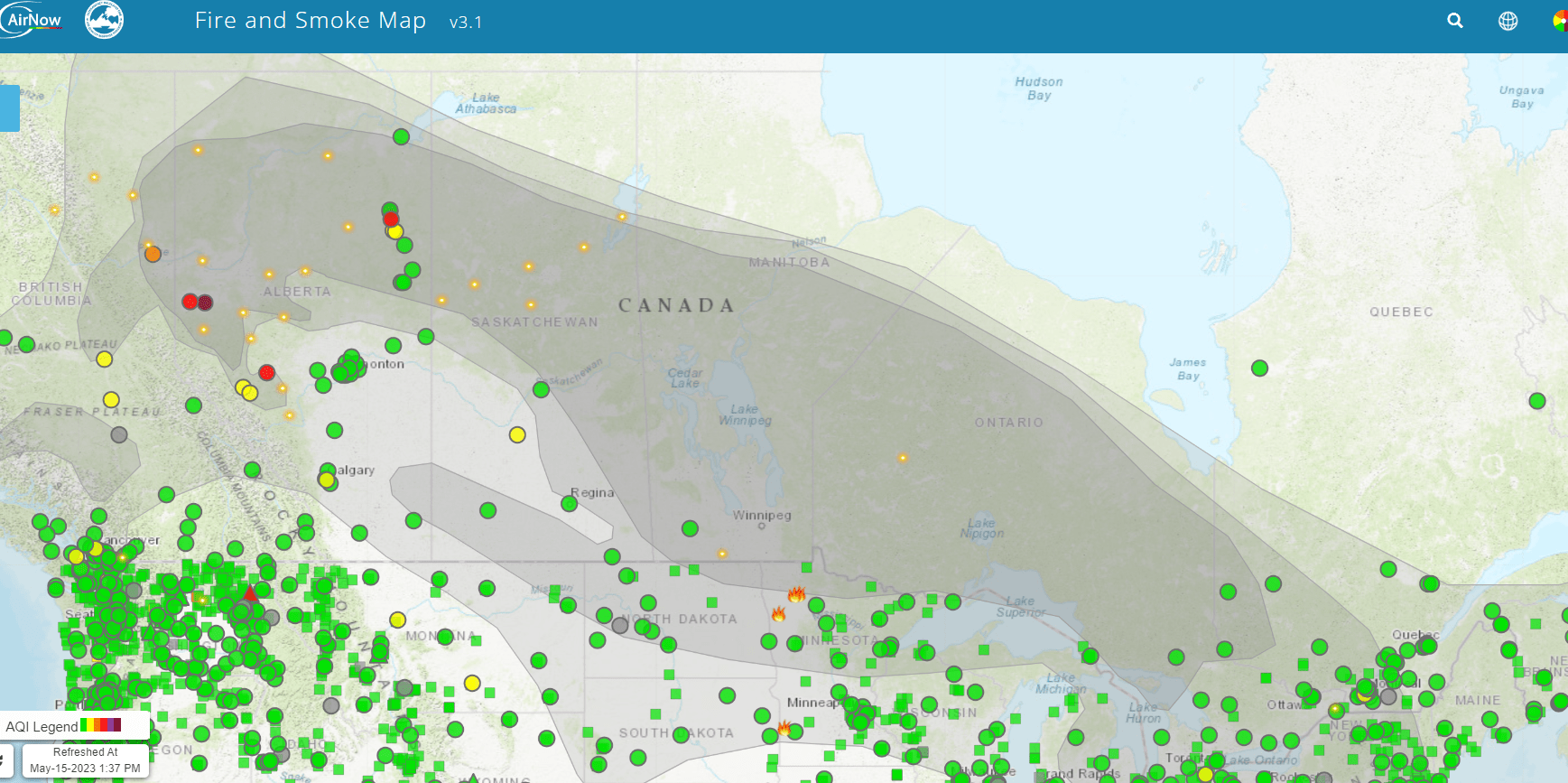

Saskatchewan Wildfire Season Hotter Summer Fuels Concerns

May 31, 2025

Saskatchewan Wildfire Season Hotter Summer Fuels Concerns

May 31, 2025 -

Urgent Action Needed Early Wildfire Season In Canada And Minnesota

May 31, 2025

Urgent Action Needed Early Wildfire Season In Canada And Minnesota

May 31, 2025 -

Deadly Wildfires Rage In Eastern Manitoba Emergency Crews Respond

May 31, 2025

Deadly Wildfires Rage In Eastern Manitoba Emergency Crews Respond

May 31, 2025 -

Eastern Manitoba Wildfires Ongoing Battle Against Deadly Flames

May 31, 2025

Eastern Manitoba Wildfires Ongoing Battle Against Deadly Flames

May 31, 2025 -

Wildfires Ignite Early Canada And Minnesota Battle Blazes

May 31, 2025

Wildfires Ignite Early Canada And Minnesota Battle Blazes

May 31, 2025