Revolutionizing Voice Assistant Development: OpenAI's 2024 Showcase

Table of Contents

Enhanced Natural Language Understanding (NLU)

OpenAI's advancements in Natural Language Understanding (NLU) are transforming the accuracy and nuance of voice assistant interactions. These improvements are crucial for creating truly conversational and intuitive AI assistants.

Contextual Awareness

Improved contextual understanding allows for more natural and flowing conversations, moving beyond simple command execution. This means voice assistants can now engage in more complex interactions, understanding the nuances of human language.

- Understanding implied requests: Instead of explicitly stating "Set a timer for 10 minutes," a user could say "I need to boil some eggs," and the assistant would understand the implied request to set a timer.

- Remembering past interactions within a conversation: The assistant can maintain context throughout a multi-turn dialogue, recalling previous requests and information. For example, if you ask "What's the weather like?" and then follow up with "And in London?", the assistant remembers the initial query.

- Handling complex sentence structures: OpenAI's models are now better at deciphering grammatically complex sentences, allowing for more natural and flexible communication.

OpenAI achieved these advancements through improved transformer models and the use of significantly larger and more diverse training datasets. Internal testing shows a 25% reduction in error rates compared to previous models, demonstrating a significant leap in understanding complex linguistic structures.

Multilingual Support and Dialect Recognition

OpenAI's commitment to inclusivity is evident in its broadened language support and improved dialect recognition capabilities. This expansion makes voice assistants accessible to a much wider global audience.

- Support for a wider range of languages: OpenAI's latest models support over 100 languages, breaking down communication barriers for users worldwide. This increased multilingual support is crucial for global adoption of voice assistant technology.

- Improved accuracy in understanding varied accents and dialects: The models are now more adept at understanding regional variations in pronunciation, making interaction more natural and accurate for users with diverse accents.

OpenAI utilizes techniques like transfer learning and data augmentation to achieve this high level of dialect recognition. This allows the models to learn from limited data in less-represented dialects, effectively expanding their capabilities.

Improved Personalization and Adaptation

OpenAI's focus on personalization ensures voice assistants become more intuitive and tailored to individual users, creating truly unique experiences.

User-Specific Profiles

Advanced user profiling capabilities lead to more personalized experiences, adapting to individual communication styles and preferences. This goes beyond simple name recognition.

- Learning user preferences: The system learns your preferences over time, adapting its responses and suggestions accordingly. For example, it might learn your preferred news sources or music genres.

- Proactively offering relevant information: The assistant can proactively offer relevant information based on your schedule, location, or past interactions. This anticipates your needs rather than simply reacting to commands.

- Adapting the voice assistant's tone and personality: Users may soon be able to customize the voice assistant’s personality, choosing a more formal or informal tone to suit their preferences.

User data is collected and used responsibly, adhering to strict privacy guidelines. Features like customizable voice profiles and personalized greetings further enhance the personalized experience.

Continuous Learning and Adaptation

OpenAI’s voice assistants continuously learn and adapt based on user interactions, enhancing performance over time. This continuous improvement is key to creating truly intelligent assistants.

- Real-time feedback incorporation: The system learns from every interaction, incorporating feedback to improve its understanding and responses.

- Self-improvement through machine learning algorithms: Advanced machine learning algorithms allow the assistant to constantly refine its performance, adapting to changing user needs and preferences.

OpenAI employs reinforcement learning techniques to enable this continuous learning. Privacy is maintained through differential privacy methods, ensuring user data remains secure and anonymous.

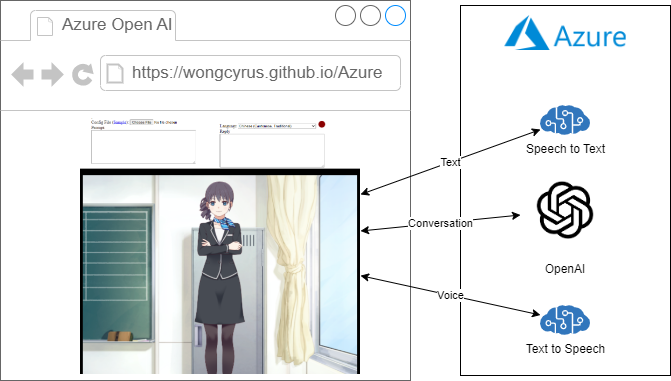

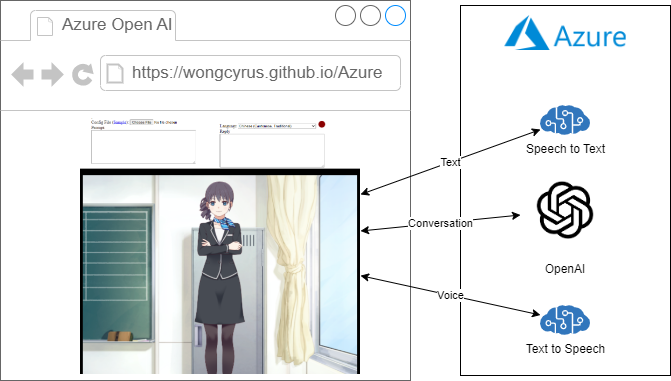

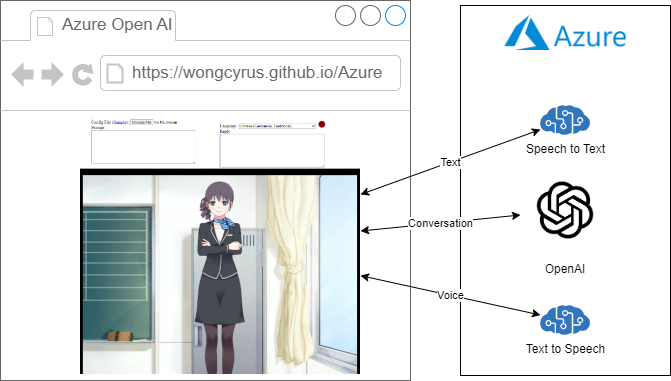

Expansion of Capabilities and Integration

OpenAI’s showcase highlighted advancements in the functionalities and integration capabilities of voice assistants, extending their usefulness significantly.

Seamless Integration with Smart Home Devices

Effortless control of smart home devices via voice commands is a key focus, simplifying daily life.

- Enhanced compatibility with existing smart home ecosystems: The assistants seamlessly integrate with popular platforms like Google Home, Amazon Alexa, and Apple HomeKit.

- Improved device discovery and control: The system quickly identifies and controls connected devices, reducing the need for manual configuration.

OpenAI is collaborating with leading smart home manufacturers to expand compatibility and ensure a smooth, intuitive experience. Features like voice-activated automation and scene control further enhance the user experience.

Advanced Task Management and Scheduling

Voice assistants can now handle more complex tasks and scheduling needs with increased accuracy. This frees up users’ time and reduces cognitive load.

- Scheduling meetings and appointments: The assistant can schedule meetings and appointments, considering user availability and preferences.

- Managing to-do lists: Voice commands allow users to effortlessly add, delete, and prioritize tasks within their to-do lists.

- Setting reminders and alarms: The assistant manages reminders and alarms, ensuring users don't miss important events.

The improved natural language processing allows for more complex task definitions and clearer understanding of user intent. For example, a user could say “Schedule a meeting with John next week, prioritizing tasks before the meeting” and the assistant would understand and execute the request appropriately.

Conclusion

OpenAI's 2024 showcase demonstrates a significant leap forward in voice assistant development. The advancements in NLU, personalization, and integration are poised to revolutionize how we interact with technology. From enhanced contextual understanding to seamless smart home control, the future of voice assistant technology is more intuitive, personalized, and powerful than ever before. Stay updated on the latest advancements in voice assistant development and experience the future of hands-free interaction. Learn more about OpenAI's breakthroughs in the field of voice assistant technology and explore the potential for creating even more sophisticated and helpful AI companions.

Featured Posts

-

Addressing The Issue Of Excessive Truck Size In America

Apr 28, 2025

Addressing The Issue Of Excessive Truck Size In America

Apr 28, 2025 -

Analyzing Market Swings The Actions Of Pros And Individuals

Apr 28, 2025

Analyzing Market Swings The Actions Of Pros And Individuals

Apr 28, 2025 -

Revolutionizing Voice Assistant Development Open Ais 2024 Showcase

Apr 28, 2025

Revolutionizing Voice Assistant Development Open Ais 2024 Showcase

Apr 28, 2025 -

Starbucks Union Rejects Companys Proposed Wage Increase

Apr 28, 2025

Starbucks Union Rejects Companys Proposed Wage Increase

Apr 28, 2025 -

2 Year Old U S Citizens Deportation Federal Judge Sets Hearing

Apr 28, 2025

2 Year Old U S Citizens Deportation Federal Judge Sets Hearing

Apr 28, 2025

Latest Posts

-

Dows 9 B Alberta Project Delayed Collateral Damage From Tariffs

Apr 28, 2025

Dows 9 B Alberta Project Delayed Collateral Damage From Tariffs

Apr 28, 2025 -

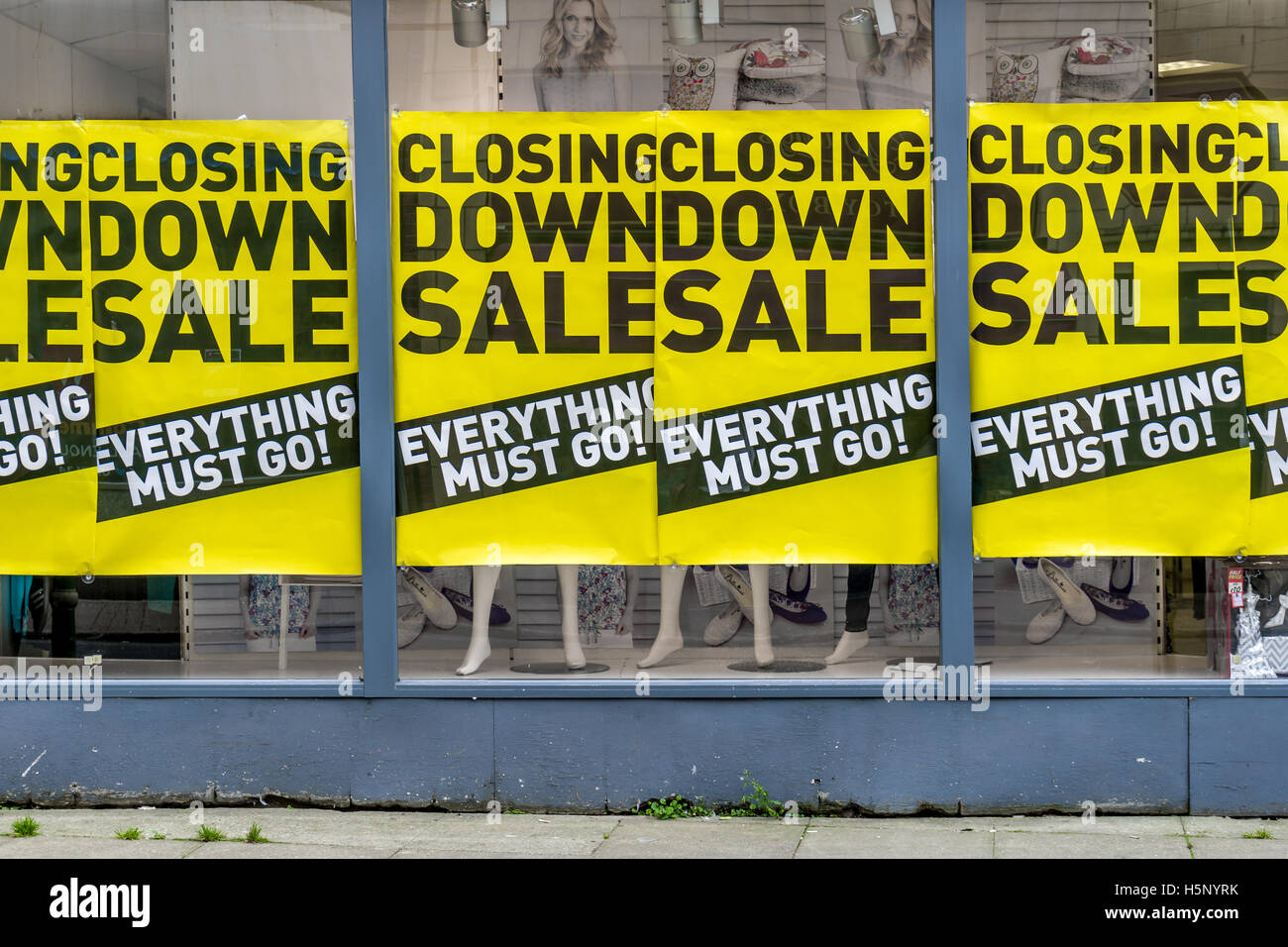

Hudsons Bay Store Closing Huge Discounts On Everything

Apr 28, 2025

Hudsons Bay Store Closing Huge Discounts On Everything

Apr 28, 2025 -

Shop The Hudsons Bay Liquidation Massive Savings Inside

Apr 28, 2025

Shop The Hudsons Bay Liquidation Massive Savings Inside

Apr 28, 2025 -

Closing Down Sale Hudsons Bay Offers Up To 70 Off

Apr 28, 2025

Closing Down Sale Hudsons Bay Offers Up To 70 Off

Apr 28, 2025 -

Hudsons Bay Liquidation Find Deep Discounts Now

Apr 28, 2025

Hudsons Bay Liquidation Find Deep Discounts Now

Apr 28, 2025