OpenAI's 2024 Developer Conference: Streamlined Voice Assistant Creation

Table of Contents

New APIs and Tools for Simplified Voice Assistant Development

OpenAI's 2024 conference highlighted a suite of new APIs and tools designed to drastically reduce the complexity and time involved in voice assistant development. These advancements focus on three key areas: enhanced speech-to-text capabilities, advanced natural language understanding (NLU), and simplified integration with existing platforms.

Enhanced Speech-to-Text Capabilities

OpenAI unveiled significant improvements in its speech recognition technology. The new models boast higher accuracy rates, even in challenging environments. This translates to:

- Increased accuracy: Reducing the need for extensive data cleaning and post-processing, saving developers valuable time and resources. The improved accuracy leads to a more reliable and efficient voice assistant.

- Multilingual support: Opening up global market opportunities. Developers can now target a broader audience without the need to build separate models for each language. This expands the potential reach and impact of voice assistant applications significantly.

- Improved noise reduction: Allowing for more robust performance in real-world scenarios. The system can now filter out background noise more effectively, ensuring accurate transcriptions even in noisy environments. This is crucial for creating voice assistants that function reliably in diverse settings.

Advanced Natural Language Understanding (NLU)

The conference showcased groundbreaking advancements in OpenAI's NLU models. These models can now understand complex queries, nuanced language, and contextual information with unprecedented precision. This means:

- Improved intent recognition: Leading to more accurate and helpful responses. The voice assistant can better understand the user's needs and provide more relevant information or actions.

- Enhanced entity extraction: Allowing for more efficient data processing. The system can extract key information from user queries more effectively, making it easier to perform specific tasks or provide targeted responses.

- Contextual understanding: Enabling more natural and engaging conversations. The voice assistant can maintain context throughout a conversation, leading to a smoother and more human-like interaction. This improves the overall user experience considerably.

Simplified Integration with Existing Platforms

OpenAI emphasized simplified integration with popular platforms and frameworks, a key factor in accelerating the development lifecycle. This includes:

- Seamless integration with cloud platforms: Such as AWS, Azure, and GCP, making deployment and scaling easier for developers.

- Pre-built components and templates: Accelerating the development process and reducing the amount of custom code required. This allows developers to focus on unique features and functionalities rather than spending time on basic integrations.

- Improved documentation and support resources: Making the process smoother and more accessible for developers of all skill levels. Comprehensive documentation and readily available support are vital for efficient development.

Focus on Ethical Considerations and Responsible AI in Voice Assistant Design

OpenAI's commitment to responsible AI development was a prominent theme throughout the conference. The company highlighted its dedication to building ethical and inclusive voice assistants.

Bias Mitigation Techniques

OpenAI discussed strategies for mitigating biases in training data and models, aiming to create fairer and more inclusive voice assistants. This includes:

- Techniques for identifying and addressing bias in speech data: Ensuring the data used to train the models is representative and avoids perpetuating existing societal biases.

- Tools for evaluating and improving fairness in NLU models: Helping developers assess and address potential biases in their models' responses.

- Best practices for responsible data collection and usage: Guiding developers in ethically collecting, using, and protecting user data.

Privacy and Security Enhancements

Protecting user privacy and data security is paramount. OpenAI outlined several enhancements focused on these critical areas:

- Enhanced encryption and secure data handling protocols: Ensuring user data is protected throughout its lifecycle.

- Methods for anonymizing user data: Protecting user privacy while still allowing for the analysis and improvement of the voice assistant's performance.

- Best practices for complying with data privacy regulations: Helping developers navigate the complex landscape of data privacy regulations.

Showcase of Innovative Voice Assistant Applications

The conference showcased real-world examples of streamlined voice assistant development using OpenAI's tools. This included successful implementations across diverse industries, highlighting the practical applications of these advancements.

- Examples in healthcare, finance, education, and entertainment: Demonstrating the versatility and broad applicability of OpenAI's technologies.

- Case studies highlighting cost savings and efficiency improvements: Showing the tangible benefits of using OpenAI's streamlined development tools.

- Success stories demonstrating the improved user experience: Highlighting the positive impact on user satisfaction and engagement.

Conclusion

OpenAI's 2024 Developer Conference has clearly charted a course towards streamlined voice assistant creation. The new APIs, tools, and focus on ethical considerations empower developers to build more accurate, efficient, and responsible voice interfaces. By leveraging these advancements, developers can unlock new possibilities and create innovative voice-powered applications that enhance user experiences across various sectors. Don't miss the opportunity to explore OpenAI's resources and begin your journey in streamlined voice assistant creation today!

Featured Posts

-

Ukrainskie Bezhentsy I S Sh A Ugroza Novogo Krizisa Dlya Germanii

May 10, 2025

Ukrainskie Bezhentsy I S Sh A Ugroza Novogo Krizisa Dlya Germanii

May 10, 2025 -

Transgender Experiences Under Trump Executive Orders

May 10, 2025

Transgender Experiences Under Trump Executive Orders

May 10, 2025 -

Wynne Evans Dropped From Go Compare Advert After Strictly Controversy

May 10, 2025

Wynne Evans Dropped From Go Compare Advert After Strictly Controversy

May 10, 2025 -

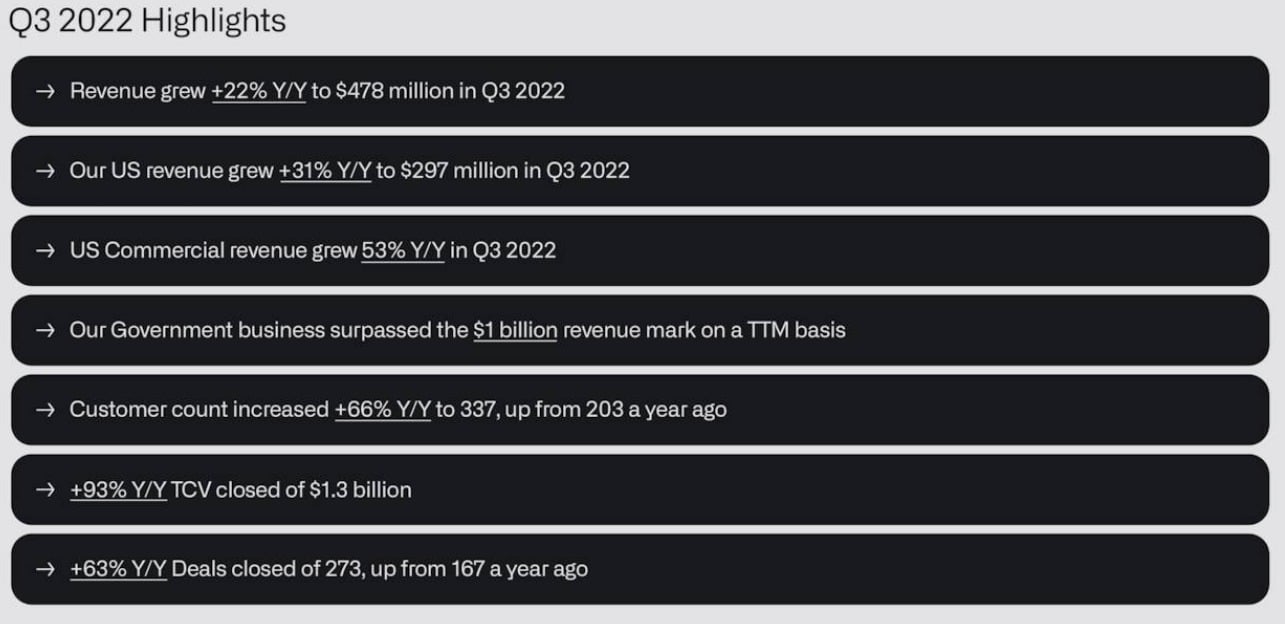

Palantir Stock Forecast Revised A Deep Dive Into The Market Shift

May 10, 2025

Palantir Stock Forecast Revised A Deep Dive Into The Market Shift

May 10, 2025 -

Why High Stock Valuations Shouldnt Deter Investors A Bof A Analysis

May 10, 2025

Why High Stock Valuations Shouldnt Deter Investors A Bof A Analysis

May 10, 2025