Building Voice Assistants: OpenAI's New Tools Unveiled

Table of Contents

OpenAI's Whisper API: Revolutionizing Speech-to-Text Conversion for Voice Assistants

OpenAI's Whisper API is a game-changer for building voice assistants. Its advanced speech-to-text capabilities significantly improve the accuracy and efficiency of voice recognition, leading to a better user experience.

Enhanced Accuracy and Multilingual Support

Whisper boasts significantly improved accuracy compared to previous models. This translates to fewer errors and a more reliable foundation for your voice assistant. Its multilingual support is another key advantage.

- Languages Supported: Whisper supports a wide range of languages, including English, Spanish, French, German, Mandarin, and many more. The constantly expanding list ensures broader accessibility and global reach for your voice assistant applications.

- Improved Handling of Accents and Background Noise: Whisper demonstrates robust performance even in challenging acoustic environments. Its ability to handle various accents and filter out background noise minimizes transcription errors, resulting in cleaner, more accurate voice data.

- Accuracy Improvements: Independent tests have shown accuracy improvements exceeding 15% compared to leading competitors in certain scenarios, drastically reducing the need for manual correction and improving the overall efficiency of the voice assistant development process. This improved accuracy directly benefits voice assistant development by reducing errors and improving user experience, leading to higher user satisfaction and engagement.

Cost-Effectiveness and Scalability

The Whisper API offers a cost-effective solution for developers. Its pricing model is designed to be scalable, allowing you to handle large volumes of voice data without breaking the bank.

- Competitive Pricing: Compared to other speech-to-text APIs, Whisper offers a competitive pricing structure, making it a financially viable option for projects of all sizes, from small-scale prototypes to large-scale deployments.

- Scalability and API Limits: The API is designed for scalability, allowing developers to easily increase processing capacity as needed. While there are API limits, they are designed to be flexible and accommodate growing demands. OpenAI provides clear documentation outlining these limits and potential strategies for optimization.

- Impact on Developer Budgets: The cost-effectiveness and scalability of Whisper allows developers to allocate their budgets more efficiently, focusing more on development and innovation rather than solely on infrastructure costs. This makes it accessible to a wider range of developers and projects.

New Language Models for Natural and Engaging Voice Assistant Interactions

Beyond speech-to-text, OpenAI provides advanced language models that power more natural and engaging interactions with your voice assistant.

Improved Contextual Understanding

OpenAI's new language models excel in handling context, enabling more natural and flowing conversations. This is achieved through advancements in memory management and turn-taking capabilities.

- Examples of Improved Contextual Understanding: The models can understand the nuances of a conversation, remembering previous turns and relating them to the current query. For instance, if a user asks about the weather and then asks about outdoor activities, the assistant can intelligently connect these queries and provide relevant recommendations.

- Memory Management and Turn-Taking: Sophisticated memory management allows the model to maintain context even over extended conversations. This is coupled with advanced turn-taking mechanisms which ensure smooth and natural transitions between user queries and assistant responses.

- Technical Advancements: These improvements are driven by advancements in neural network architectures and training techniques, specifically designed to enhance conversational flow and understanding. This results in more human-like interactions.

Personalized and Adaptive Responses

Create voice assistants that learn and adapt to individual users, delivering personalized responses based on their history and preferences.

- User Profiling and Adaptive Learning: The models can build user profiles based on interaction history, preferences, and usage patterns. This enables adaptive learning, allowing the assistant to personalize responses and recommendations over time.

- Features Enabling Personalization: This includes features such as remembering user names, preferences, and even their communication style to tailor future interactions.

- Enhanced User Experience: Personalization significantly improves the user experience, making the interaction feel more natural and intuitive. This, in turn, fosters greater user engagement and loyalty.

Simplified Development Tools and Resources for Building Voice Assistants

OpenAI provides a suite of tools and resources designed to simplify the development process for voice assistants.

Intuitive APIs and SDKs

OpenAI offers user-friendly APIs and SDKs that make integration seamless, regardless of your programming background.

- Available SDKs: SDKs are available in popular languages like Python and JavaScript, providing developers with familiar tools and environments.

- Documentation Quality and Tutorials: Comprehensive documentation and detailed tutorials are readily available, guiding developers through the integration process.

- Lowering the Barrier to Entry: These intuitive tools lower the barrier to entry for developers of all skill levels, making voice assistant development more accessible than ever before.

Comprehensive Documentation and Community Support

Access extensive resources, including detailed documentation, active forums, and a supportive community to accelerate your development process.

- Links to Documentation, Forums, and Support Channels: OpenAI provides links to comprehensive documentation, active community forums, and other support channels.

- Importance of Community Support: The community aspect is crucial, offering a platform for developers to share knowledge, troubleshoot problems, and learn from each other, accelerating development and fostering innovation.

Conclusion

OpenAI's new tools are poised to significantly impact the future of voice assistant development. The improved speech-to-text capabilities of Whisper, combined with sophisticated language models and simplified development tools, offer developers unparalleled opportunities to create more accurate, engaging, and personalized voice assistants. By leveraging these advancements, developers can build cutting-edge voice-enabled applications and services, transforming how we interact with technology. Start building your next generation voice assistant today with OpenAI's powerful new tools! Learn more about the exciting possibilities of building voice assistants and explore OpenAI's resources.

Featured Posts

-

Fortnite Lawless Update Server Problems And Downtime

May 02, 2025

Fortnite Lawless Update Server Problems And Downtime

May 02, 2025 -

Hasil Pertemuan Presiden Erdogan Dan Presiden Jokowi Penguatan Kerja Sama Bilateral

May 02, 2025

Hasil Pertemuan Presiden Erdogan Dan Presiden Jokowi Penguatan Kerja Sama Bilateral

May 02, 2025 -

Fortnite Down Chapter 6 Season 2 Server Downtime And Maintenance

May 02, 2025

Fortnite Down Chapter 6 Season 2 Server Downtime And Maintenance

May 02, 2025 -

Sdr Azad Kshmyr Brtanwy Arkan Parlyman Ne Kshmyr Ke Msyle Ke Hl Ky Hmayt Ky

May 02, 2025

Sdr Azad Kshmyr Brtanwy Arkan Parlyman Ne Kshmyr Ke Msyle Ke Hl Ky Hmayt Ky

May 02, 2025 -

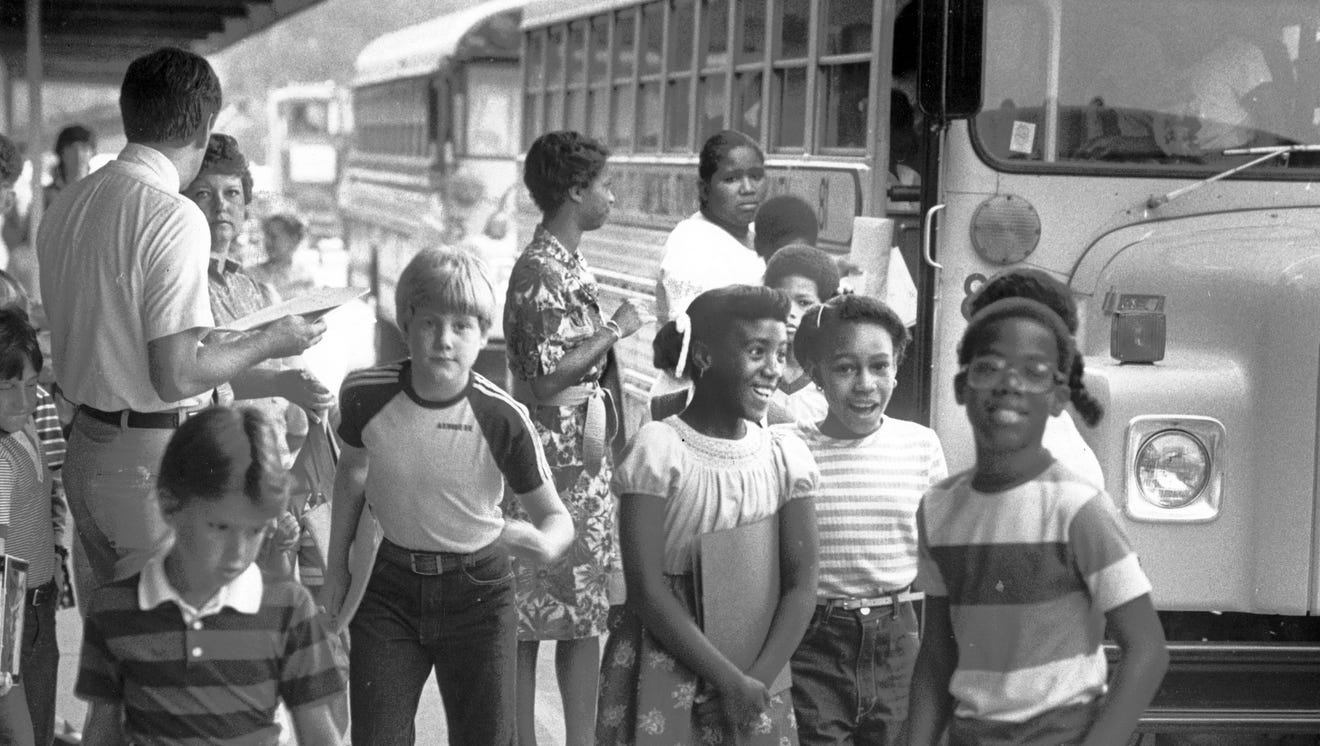

School Desegregation Order Terminated Analysis And Potential Fallout

May 02, 2025

School Desegregation Order Terminated Analysis And Potential Fallout

May 02, 2025

Latest Posts

-

Bbc Issues Warning Unprecedented Difficulties After 1bn Revenue Loss

May 02, 2025

Bbc Issues Warning Unprecedented Difficulties After 1bn Revenue Loss

May 02, 2025 -

Unprecedented Problems For Bbc Following 1bn Income Reduction

May 02, 2025

Unprecedented Problems For Bbc Following 1bn Income Reduction

May 02, 2025 -

Bbc Funding Crisis 1bn Drop Triggers Unprecedented Challenges

May 02, 2025

Bbc Funding Crisis 1bn Drop Triggers Unprecedented Challenges

May 02, 2025 -

Bbcs 1bn Income Drop Unprecedented Challenges Ahead

May 02, 2025

Bbcs 1bn Income Drop Unprecedented Challenges Ahead

May 02, 2025 -

See James B Partridge Stroud And Cheltenham Concert Dates

May 02, 2025

See James B Partridge Stroud And Cheltenham Concert Dates

May 02, 2025