Building Voice Assistants Made Easy: Key Announcements From OpenAI's 2024 Developer Conference

Table of Contents

Simplified Voice Assistant Development with OpenAI's New APIs

OpenAI unveiled a suite of new APIs designed to streamline the entire process of building voice assistants. These APIs significantly reduce development time and complexity, making it easier than ever to create powerful and engaging voice experiences.

Streamlined Natural Language Processing (NLP)

The new APIs offer substantial improvements in both speech-to-text and text-to-speech capabilities.

- Improved Speech-to-Text: The enhanced

WhisperAPI (assuming this is the updated API name, replace if needed) boasts increased accuracy, particularly in noisy environments and with diverse accents. It also supports a wider range of languages, significantly expanding the potential reach of your voice assistant. - Advanced Text-to-Speech: The new

TTSAPI (assuming this is the updated API name, replace if needed) provides more natural-sounding speech with improved intonation and emotional expression. This leads to a more engaging and human-like interaction for users.

Integrating these APIs is remarkably straightforward. OpenAI provides comprehensive documentation and readily available code samples in popular programming languages like Python and JavaScript. Developers can choose between utilizing powerful pre-trained models for rapid prototyping or customizing models for highly specialized applications. This flexibility empowers developers to tailor their solutions to specific needs while maintaining efficiency.

Enhanced Contextual Understanding and Dialogue Management

OpenAI has made significant strides in improving the contextual understanding and dialogue management capabilities of its voice assistant tools.

- Improved Context Handling: The new APIs effectively manage the context of conversations, remembering previous turns and allowing for more natural, flowing interactions. This significantly reduces the need for repetitive prompts and allows for more complex and nuanced conversations.

- Advanced Intent Recognition: The improved intent recognition system accurately identifies user requests, even with ambiguous or incomplete phrasing. This enables voice assistants to respond appropriately to a wider range of user inputs.

- Memory Management: Advanced memory management features allow the voice assistant to retain information across multiple turns, facilitating more complex tasks and personalized experiences.

These advancements enable developers to create voice assistants that can handle highly intricate user requests and maintain context throughout extended conversations, leading to more intuitive and satisfying user experiences. For example, a user could ask for directions, then subsequently inquire about nearby restaurants, all within a single, natural conversation.

Pre-built Modules for Common Voice Assistant Features

To further accelerate development, OpenAI is offering a range of pre-built modules for common voice assistant features. These modules can be easily integrated into your application, saving significant development time and reducing code complexity.

Ready-to-use Components for Faster Development

These pre-built modules provide ready-to-use functionality for a wide array of common tasks:

- Calendar Integration: Seamlessly integrate with calendar applications to schedule appointments, set reminders, and manage events.

- Music Playback Control: Control music playback services like Spotify or Apple Music via voice commands.

- Weather Updates: Provide real-time weather information based on location.

- News Updates: Deliver current news headlines from trusted sources.

Using these pre-built modules significantly reduces development time, allows for faster prototyping, and leads to more reliable applications. Developers can focus on creating unique features and differentiating their voice assistants rather than spending time on foundational components.

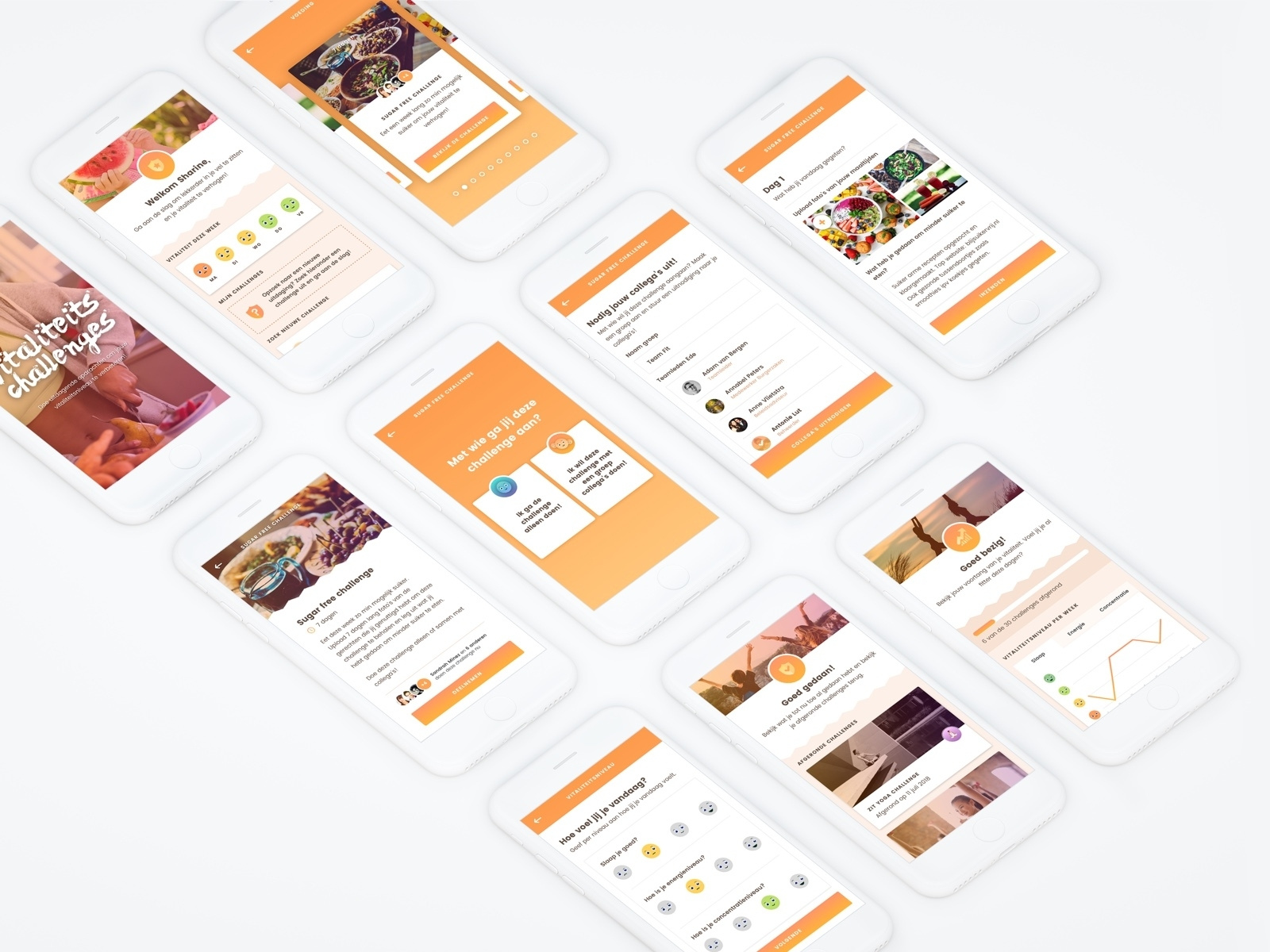

Customization Options for Unique Voice Assistant Personalities

OpenAI recognizes the importance of brand identity and user experience. Developers can now extensively customize the voice, tone, and responses of their voice assistants.

- Voice Customization: Select from a range of voices or even create custom voice profiles tailored to your application.

- Tone Adjustment: Fine-tune the tone of the voice assistant to reflect the brand's personality – playful, professional, or anything in between.

- Response Customization: Design custom responses for specific scenarios and user requests.

These customization options enable developers to create unique and engaging voice assistant personalities that resonate with their target audiences. A well-defined personality can drastically increase user engagement and overall satisfaction. Imagine a playful voice assistant for children's games, contrasted with a professional and efficient assistant for business applications.

Improved Accessibility and Multilingual Support

OpenAI is committed to creating inclusive technologies. The new tools offer expanded language support and accessibility features to reach a wider audience.

Expanding Reach with Broader Language Support

OpenAI's voice assistant tools now support a significantly broader range of languages, including (list specific languages added). This expansion dramatically increases global accessibility and opens up new market opportunities for developers.

The underlying technical improvements include advanced language models trained on massive multilingual datasets and innovative techniques for handling low-resource languages.

Accessibility Features for Diverse User Needs

OpenAI is dedicated to inclusive design. The new tools offer features designed to enhance accessibility for users with disabilities:

- Text-Based Interactions: Allow users to interact with the voice assistant via text input as an alternative to voice commands.

- Assistive Technology Support: Ensure compatibility with various assistive technologies, providing a more accessible experience for users with visual or auditory impairments.

Building accessible voice assistants is crucial for inclusivity. OpenAI's commitment to this aspect ensures that its tools can be used by everyone.

Conclusion: Revolutionizing Voice Assistant Development with OpenAI

OpenAI's announcements at its 2024 Developer Conference have fundamentally changed the landscape of voice assistant development. The new APIs, pre-built modules, and expanded accessibility features make it easier, faster, and more efficient to create powerful and engaging voice experiences. Key takeaways include streamlined NLP capabilities, significantly improved dialogue management, customizable personalities, and enhanced multilingual support. This translates to reduced development time, reduced costs, and the ability to reach a wider audience.

Start building your own voice assistant today with OpenAI's powerful new tools and resources! Learn more at [link to relevant OpenAI resources].

Featured Posts

-

Follow The F1 Monaco Grand Prix Live Real Time Timing Data

May 26, 2025

Follow The F1 Monaco Grand Prix Live Real Time Timing Data

May 26, 2025 -

Mzahrat Hashdt Btl Abyb Llmtalbt Bitlaq Srah Alasra

May 26, 2025

Mzahrat Hashdt Btl Abyb Llmtalbt Bitlaq Srah Alasra

May 26, 2025 -

Spectator Confesses To Throwing Bottle At Mathieu Van Der Poel In Paris Roubaix

May 26, 2025

Spectator Confesses To Throwing Bottle At Mathieu Van Der Poel In Paris Roubaix

May 26, 2025 -

Bottle Thrown At Mathieu Van Der Poel During Paris Roubaix Legal Action Underway

May 26, 2025

Bottle Thrown At Mathieu Van Der Poel During Paris Roubaix Legal Action Underway

May 26, 2025 -

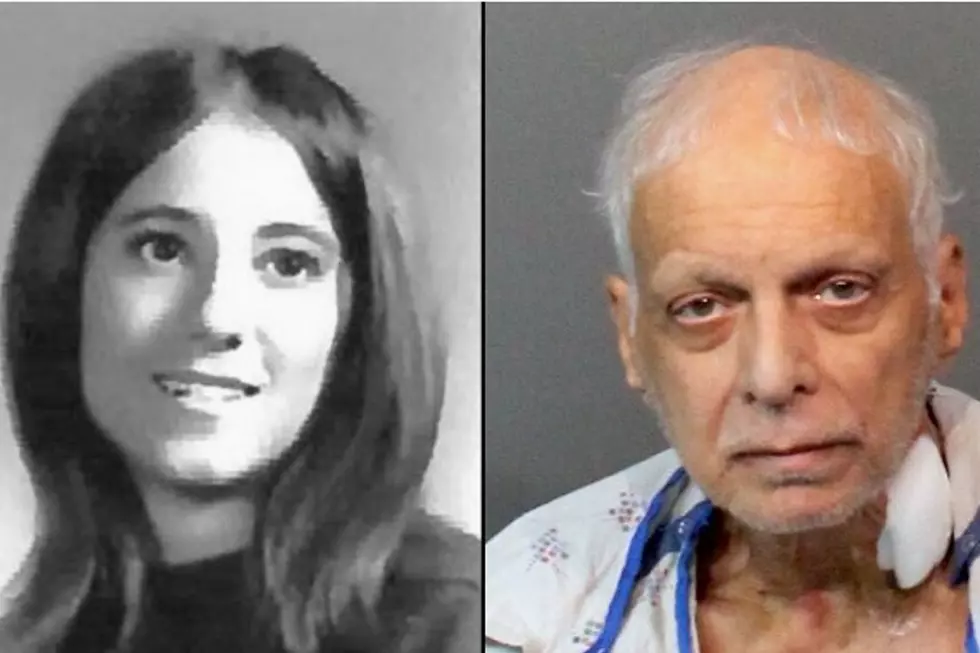

19 Year Old Cold Case Georgia Man Arrested For Wifes Murder Nanny Missing

May 26, 2025

19 Year Old Cold Case Georgia Man Arrested For Wifes Murder Nanny Missing

May 26, 2025

Latest Posts

-

Manila Bays Vitality Challenges And Opportunities For Long Term Health

May 30, 2025

Manila Bays Vitality Challenges And Opportunities For Long Term Health

May 30, 2025 -

The Future Of Manila Bay A Look At Its Vibrancy And Sustainability

May 30, 2025

The Future Of Manila Bay A Look At Its Vibrancy And Sustainability

May 30, 2025 -

Manila Bay A Vibrant Ecosystem How Long Will It Last

May 30, 2025

Manila Bay A Vibrant Ecosystem How Long Will It Last

May 30, 2025 -

Recent Toxic Algae Bloom Assessing The Damage To Californias Marine Wildlife

May 30, 2025

Recent Toxic Algae Bloom Assessing The Damage To Californias Marine Wildlife

May 30, 2025 -

Combating The Killer Seaweed Protecting Australias Marine Biodiversity

May 30, 2025

Combating The Killer Seaweed Protecting Australias Marine Biodiversity

May 30, 2025