Why AI Isn't Truly Learning: A Guide To Responsible AI Implementation

Table of Contents

AI learning, in its simplest form, refers to the ability of a machine to improve its performance on a specific task based on experience. This typically involves machine learning (ML) algorithms that identify patterns in data and deep learning (DL) algorithms that use artificial neural networks to analyze complex data. However, this "learning" is fundamentally different from human learning, which involves understanding, reasoning, and adaptation in diverse contexts. This key distinction is at the heart of why responsible AI implementation is so crucial.

The Limitations of Current AI Learning Models

Current AI learning models, despite their impressive capabilities, suffer from significant limitations that hinder their ability to truly "learn" like humans.

Data Dependency and Bias

AI models are heavily reliant on the data they are trained on. This dependency creates a significant vulnerability: if the data is biased, the AI's outputs will also be biased. This is a critical issue in the field of AI ethics.

- Examples of biased AI: Facial recognition systems exhibiting higher error rates for people with darker skin tones; loan application algorithms discriminating against certain demographic groups.

- Mitigating bias: Achieving responsible AI development requires careful attention to data diversity and pre-processing techniques. This includes actively seeking diverse datasets and employing algorithms designed to identify and mitigate biases. Addressing algorithmic bias is essential for fair and equitable AI systems.

Lack of Generalization and Transfer Learning

Most AI models struggle to generalize their knowledge to new, unseen situations or domains. While transfer learning aims to address this by applying knowledge learned in one domain to another, its effectiveness is limited.

- An AI trained to identify cats in images might fail to recognize a cat in a video due to differences in visual context.

- Human learning, in contrast, allows for greater adaptability and generalization. We can readily apply knowledge learned in one context to entirely new and unforeseen situations. This adaptability is currently absent in most AI systems. The limitations of transfer learning highlight this fundamental difference.

Absence of True Understanding and Reasoning

Current AI models lack genuine understanding of the information they process. They operate based on statistical correlations, not comprehension. This is a major distinction between AI and human intelligence.

- Correlation vs. Causation: An AI might identify a correlation between ice cream sales and crime rates, but it won't understand the underlying causal relationship (both increase during warmer months).

- The pursuit of explainable AI (XAI) aims to address this lack of transparency, allowing us to understand the reasoning behind AI decisions. Symbolic AI approaches attempt to move beyond statistical correlations toward more explicit representations of knowledge. Improving AI reasoning capabilities is a crucial area for future research.

The Ethical Implications of Misrepresenting AI Capabilities

Overstating the capabilities of current AI systems has significant ethical implications.

Overreliance and Automation Bias

Overreliance on AI systems can lead to human error and reduced critical thinking. Automation bias, the tendency to favor automated system suggestions even when they are incorrect, is a serious concern.

- Examples of automation bias: Pilots ignoring their own judgment and relying on flawed autopilot systems; medical professionals relying solely on AI diagnostic tools without considering other factors.

- Maintaining human oversight and critical thinking is essential for mitigating the risks associated with automation bias and ensuring AI safety.

Transparency and Explainability

Transparency and explainability are crucial for building trust and accountability in AI systems. However, making complex AI models understandable is a significant challenge.

- The "black box" nature of some AI algorithms makes it difficult to understand their decision-making processes.

- Explainable AI (XAI) techniques aim to address this by providing insights into how AI systems arrive at their conclusions. This transparency is essential for responsible AI deployment.

Job Displacement and Societal Impact

The widespread adoption of AI has the potential to displace workers in various industries. Understanding and mitigating the societal impact of AI is crucial.

- Industries at risk include manufacturing, transportation, and customer service.

- Addressing this challenge requires proactive measures such as retraining and education initiatives to help workers adapt to the changing job market. This responsible AI deployment considers the broader societal implications.

Conclusion: Towards a Responsible Future with AI

Current AI systems, while powerful, do not truly "learn" in the human sense. They are limited by data dependency, a lack of generalization, and an absence of true understanding. Misrepresenting these limitations has significant ethical implications, including automation bias, opacity, and job displacement. Understanding the limitations of "AI learning" is crucial for building a responsible and beneficial future with AI. Learn more about responsible AI implementation and advocate for ethical AI development to ensure this powerful technology is used for the good of humanity. Addressing the challenges of AI bias, generalization in AI, and AI reasoning will be key to realizing the true potential of artificial intelligence.

Featured Posts

-

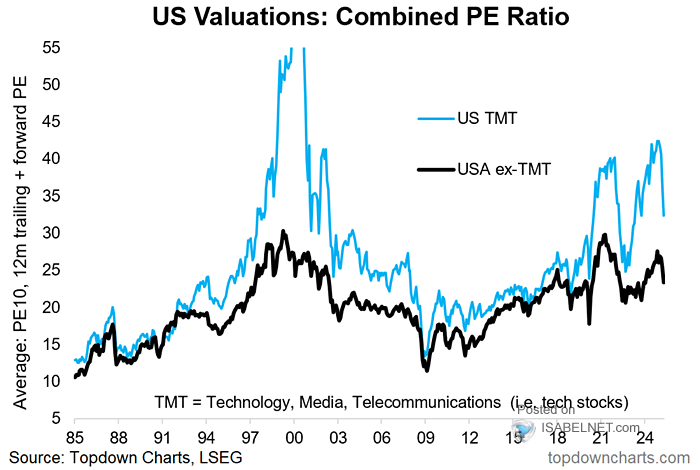

Stock Market Valuations Bof As Argument For Why Investors Shouldnt Worry

May 31, 2025

Stock Market Valuations Bof As Argument For Why Investors Shouldnt Worry

May 31, 2025 -

Massive Wildfire Smoke Engulfs Us From Canadas Largest Evacuation

May 31, 2025

Massive Wildfire Smoke Engulfs Us From Canadas Largest Evacuation

May 31, 2025 -

Us China Trade Flows Steady Amidst Tariff Truce

May 31, 2025

Us China Trade Flows Steady Amidst Tariff Truce

May 31, 2025 -

Elon Musks Departure From Trump Administration The End Of An Era

May 31, 2025

Elon Musks Departure From Trump Administration The End Of An Era

May 31, 2025 -

Gratis Wohnen In Deutschland Diese Stadt Sucht Neue Einwohner

May 31, 2025

Gratis Wohnen In Deutschland Diese Stadt Sucht Neue Einwohner

May 31, 2025