Unlocking Insights: How AI Creates Podcasts From Repetitive Scatological Data

Table of Contents

The Challenge of Repetitive Scatological Data

The sheer volume and unstructured nature of repetitive scatological data present significant challenges.

The sheer volume and unstructured nature of this data:

- Sources: This data originates from diverse sources, including online forums, social media platforms (Twitter, Reddit, etc.), patient medical records, and even literature reviews. The data is often unstructured, messy, and contains slang, misspellings, and informal language.

- Challenges in manual analysis: Manually analyzing this data is incredibly time-consuming, expensive, and prone to subjective interpretation. The sheer volume makes it practically impossible to extract meaningful insights through traditional methods.

The Potential for Hidden Insights:

Despite the challenges, this data holds immense potential:

- Revealing trends and patterns: Analyzing this data can reveal crucial trends and patterns in human behavior, shedding light on social norms, cultural influences, and even public health concerns. For instance, tracking mentions of specific symptoms could provide early warning signs of outbreaks.

- Understanding public sentiment and behavior: Analyzing the emotional tone and contextual information associated with scatological references can offer unique insights into public sentiment, attitudes towards taboo subjects, and overall societal shifts.

AI's Role in Data Processing and Analysis

AI plays a crucial role in overcoming the hurdles associated with repetitive scatological data analysis.

Natural Language Processing (NLP) for text analysis:

- Pattern and theme identification: NLP techniques, such as sentiment analysis and topic modeling, enable AI to sift through vast amounts of text data, identifying recurring themes, prevalent sentiments, and significant patterns. This helps to categorize and organize the otherwise chaotic data.

- Specific NLP algorithms: Advanced NLP algorithms like Latent Dirichlet Allocation (LDA) for topic modeling, and various sentiment analysis models (e.g., VADER, TextBlob) are employed for effective analysis.

Machine Learning for Pattern Recognition:

- Identifying recurring themes and anomalies: Machine learning algorithms, particularly unsupervised learning techniques like clustering, can identify recurring themes and anomalies in the data that might be missed by human analysts.

- Supervised vs. unsupervised learning: Supervised learning can be used to classify data based on pre-defined categories, while unsupervised learning helps to discover hidden structures and patterns within the data.

Data Cleaning and Preprocessing:

- Importance of data cleaning: Before analysis, crucial data cleaning steps are necessary. This includes removing irrelevant information, handling missing data, and correcting inconsistencies.

- Data preprocessing techniques: Techniques like stemming (reducing words to their root form) and lemmatization (reducing words to their dictionary form) are employed to standardize the text and improve the accuracy of NLP algorithms.

Transforming Data into Engaging Podcast Content

Once the data is processed and analyzed, AI can transform it into compelling podcast content.

Structuring the Narrative:

- Logical flow and structure: AI algorithms can create a logical flow and structure for the podcast episodes, ensuring a coherent and engaging narrative. This might involve identifying key storylines and arranging them chronologically or thematically.

- Engaging storytelling: AI can help weave the data into a captivating story, using techniques like cliffhangers, unexpected twists, and compelling character development (even if the "characters" are represented by aggregated data points).

Generating Voiceovers and Sound Effects:

- Text-to-speech technology: Advanced text-to-speech (TTS) technology can generate natural-sounding voiceovers, converting the analyzed data into an audible format.

- Sound design for listener experience: Sound design plays a vital role. The right sound effects can enhance the listener's experience, making the podcast more immersive and engaging.

Podcast Production and Distribution:

- Publishing the podcast: Once the audio is produced, it needs to be edited, mastered, and prepared for publication.

- Podcast distribution platforms: Finally, the podcast needs to be distributed through popular platforms like Spotify, Apple Podcasts, Google Podcasts, etc., to reach a wider audience.

Ethical Considerations and Privacy

The use of AI with sensitive data like scatological information requires careful consideration of ethical implications and privacy concerns.

Anonymization and Data Privacy:

- Protecting user privacy: Robust anonymization techniques are crucial to protect user privacy and ensure compliance with data protection regulations like GDPR and HIPAA. This might involve removing identifying information, aggregating data, or using differential privacy methods.

- Compliance with data protection regulations: Strict adherence to all relevant data protection regulations is paramount.

Responsible Data Usage:

- Ethical implications of using sensitive data: It is essential to consider the potential ethical implications of using this type of sensitive data and to ensure that the research and resulting podcast content are used responsibly.

- Best practices for responsible data handling: Following best practices for responsible data handling, including transparency about data collection and usage, is crucial to maintain ethical standards.

Unlocking the Power of Repetitive Scatological Data through AI-Powered Podcasts

In conclusion, AI offers a powerful approach to unlock the hidden insights within repetitive scatological data. By combining advanced data processing techniques, sophisticated NLP and machine learning algorithms, and creative podcast production methods, we can transform seemingly insignificant data into valuable knowledge. This approach offers the potential to gain deeper understandings of human behavior, improve public health initiatives, and inform public policy. We encourage further exploration into this field. Contact us to learn more about AI-driven podcast creation from this type of data and how you can leverage its power to transform your understanding of complex social phenomena.

Featured Posts

-

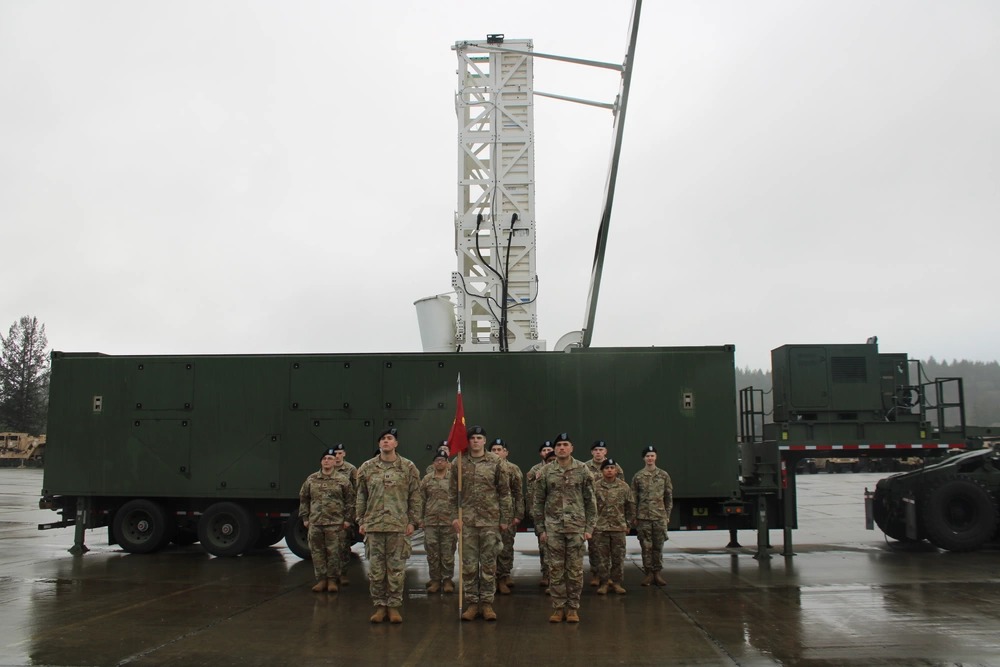

The Strategic Implications Of The Us Typhon Missile System In The Philippines A Case Study Of Chinas Growing Influence

May 20, 2025

The Strategic Implications Of The Us Typhon Missile System In The Philippines A Case Study Of Chinas Growing Influence

May 20, 2025 -

Towards Zero Episode 1 Analyzing The Absence Of Murder

May 20, 2025

Towards Zero Episode 1 Analyzing The Absence Of Murder

May 20, 2025 -

The Impact Of Shrinking Enrollment On College Town Economies

May 20, 2025

The Impact Of Shrinking Enrollment On College Town Economies

May 20, 2025 -

Incendio Na Tijuca Escola Em Luto Pais E Ex Alunos Emocionados

May 20, 2025

Incendio Na Tijuca Escola Em Luto Pais E Ex Alunos Emocionados

May 20, 2025 -

Istoriya Uspekha Mirry Andreevoy Biografiya I Analiz Igr

May 20, 2025

Istoriya Uspekha Mirry Andreevoy Biografiya I Analiz Igr

May 20, 2025

Latest Posts

-

The Wayne Gretzky Loyalty Debate Examining The Impact Of Trumps Policies On Canada Us Relations

May 20, 2025

The Wayne Gretzky Loyalty Debate Examining The Impact Of Trumps Policies On Canada Us Relations

May 20, 2025 -

Trumps Tariffs Gretzkys Loyalty A Canada Us Hockey Debate

May 20, 2025

Trumps Tariffs Gretzkys Loyalty A Canada Us Hockey Debate

May 20, 2025 -

Wayne Gretzky Fast Facts A Quick Look At The Great Ones Life And Career

May 20, 2025

Wayne Gretzky Fast Facts A Quick Look At The Great Ones Life And Career

May 20, 2025 -

Trumps Trade Policies And Gretzkys Allegiance A Look At The Stirred Debate

May 20, 2025

Trumps Trade Policies And Gretzkys Allegiance A Look At The Stirred Debate

May 20, 2025 -

Paulina Gretzkys Latest Look A Leopard Dress Inspired By The Sopranos

May 20, 2025

Paulina Gretzkys Latest Look A Leopard Dress Inspired By The Sopranos

May 20, 2025