Understanding And Implementing The Updated CNIL Guidelines For AI Models

Table of Contents

Key Changes in the Updated CNIL Guidelines for AI Models

The CNIL's updated guidelines on AI, [insert date of update if applicable], represent a significant shift towards a more robust and responsible approach to AI development and deployment. These changes reflect a growing global concern about the ethical and societal implications of AI technologies. The updated guidelines aim to ensure fairness, transparency, and accountability in the use of AI systems.

-

Increased focus on transparency and explainability: The CNIL now emphasizes the need for users to understand how AI systems make decisions affecting them. This includes providing clear and accessible explanations of AI processes, especially in high-stakes situations. This increased transparency is crucial for building trust and ensuring accountability. Techniques like Explainable AI (XAI) are becoming increasingly vital for compliance.

-

Stricter rules on data protection and user rights: The guidelines reinforce the importance of respecting fundamental data protection rights, aligning closely with the GDPR. This includes obtaining explicit consent, ensuring data minimization, and providing individuals with greater control over their data. The focus is on ensuring AI systems don't infringe on privacy rights.

-

Emphasis on risk assessment and mitigation strategies: Before deploying AI systems, organizations must conduct thorough risk assessments to identify and mitigate potential biases, discriminatory outcomes, and other negative impacts. This requires a proactive approach to responsible AI development. The CNIL expects detailed documentation of these assessments.

-

New requirements for data governance and accountability: The updated guidelines place a strong emphasis on establishing clear lines of responsibility for AI systems. This includes defining roles, responsibilities, and accountability mechanisms throughout the AI lifecycle. Robust data governance structures are essential.

-

Clarification on the use of AI in specific sectors (e.g., healthcare, finance): The CNIL provides sector-specific guidance, addressing the unique challenges and considerations associated with AI applications in sensitive areas such as healthcare and finance. This tailored approach ensures compliance across various domains.

Understanding the Principles of Responsible AI under CNIL Guidelines

The updated CNIL guidelines are built upon core principles of responsible AI. Adherence to these principles is not merely advisable; it’s a requirement for compliance.

-

Fairness: AI systems must be designed and implemented to avoid bias and ensure fair treatment for all individuals. This requires careful consideration of data sets, algorithms, and the potential for discriminatory outcomes. Bias mitigation techniques, like data augmentation and algorithmic fairness methods, are essential.

-

Accountability: Clear lines of responsibility must be established for AI systems. This includes mechanisms for auditing AI processes, identifying potential issues, and rectifying errors. Algorithmic accountability and auditing are crucial for demonstrating compliance.

-

Human Oversight: Human oversight is paramount, ensuring that AI systems are used responsibly and ethically. This means human intervention is possible, especially in high-stakes decisions. "Human-in-the-loop" systems are becoming increasingly important for ethical AI development.

-

Transparency: AI systems should be transparent in their operation. This means making the decision-making processes of AI understandable, allowing individuals to understand how decisions impacting them are made. This builds trust and accountability.

Practical Implementation Steps for Compliance with CNIL AI Guidelines

Compliance with the updated CNIL guidelines requires a proactive and comprehensive approach. Here are actionable steps businesses can take:

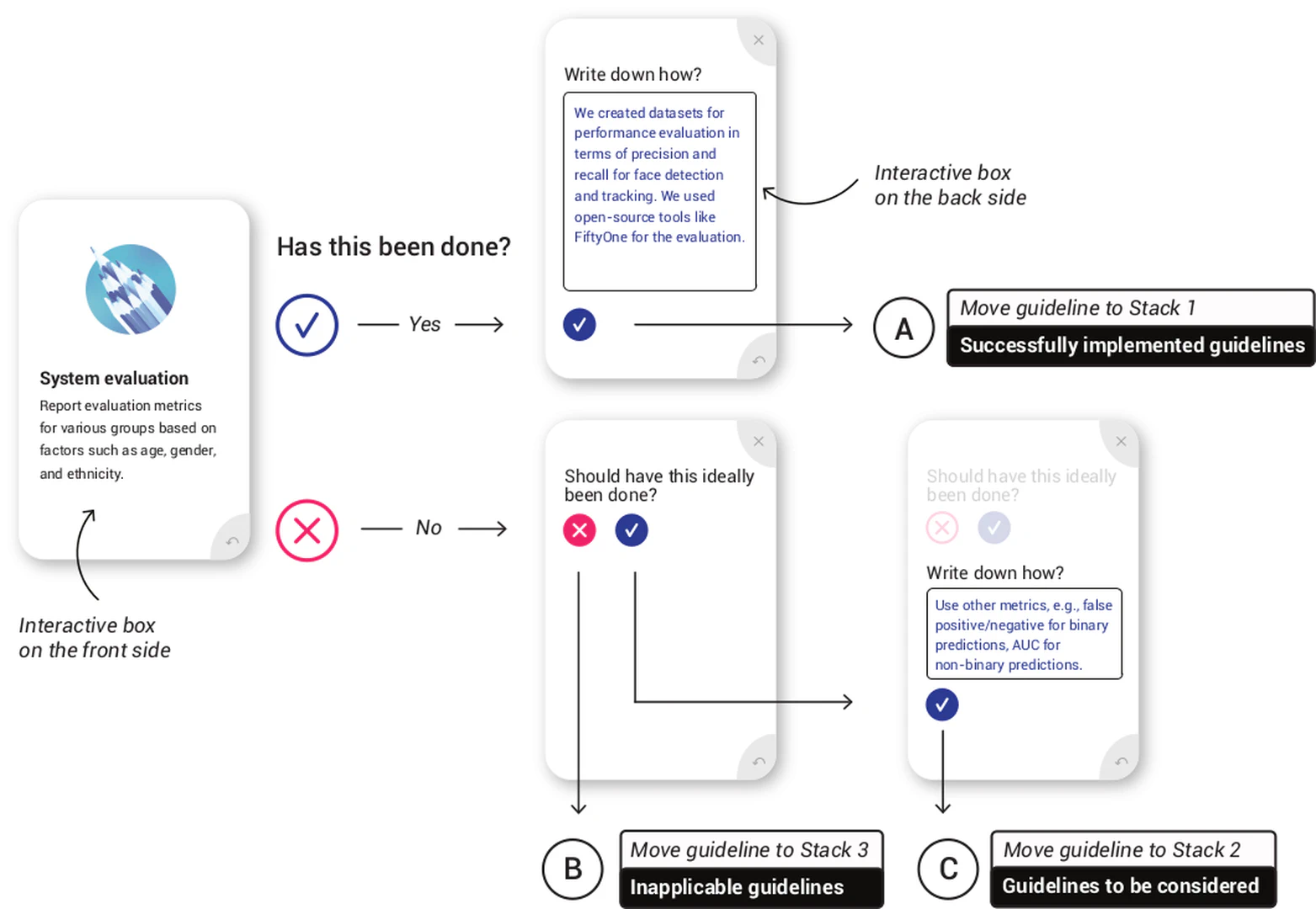

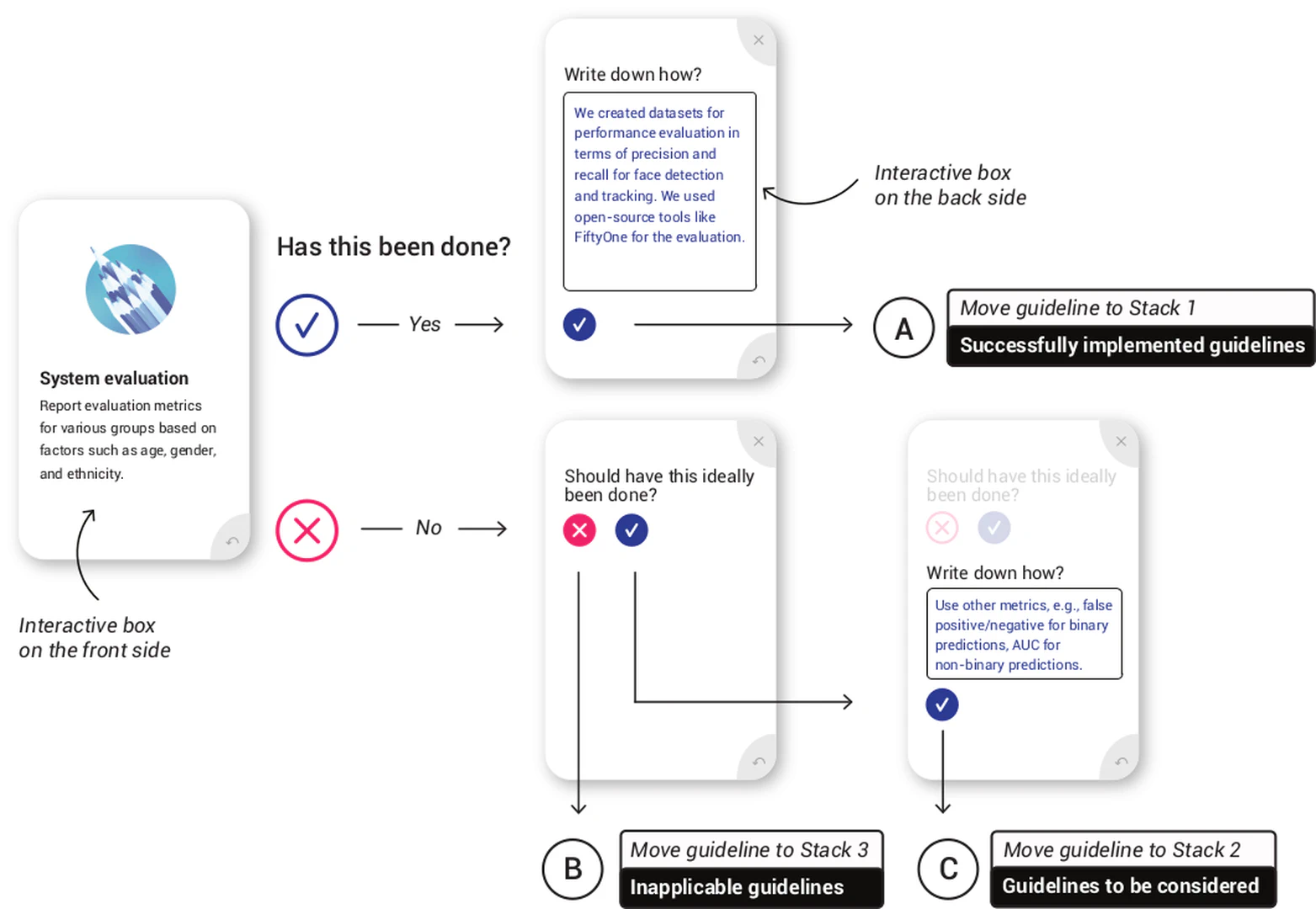

- Conduct a thorough risk assessment of your AI systems: Identify potential risks and vulnerabilities, focusing on bias, data protection, and other ethical considerations.

- Develop a comprehensive data governance framework: Establish clear policies and procedures for data collection, storage, processing, and use in AI systems.

- Implement robust data protection measures (e.g., encryption, access control): Securely protect user data throughout the AI lifecycle.

- Document your AI development and deployment processes: Maintain detailed records of all stages of AI development, deployment, and monitoring. This documentation is critical for demonstrating compliance.

- Establish mechanisms for user data access, correction, and deletion: Ensure individuals have control over their data and can exercise their rights under the GDPR.

- Train employees on the updated CNIL guidelines and responsible AI practices: Ensure your workforce understands and adheres to the updated regulations.

Avoiding Penalties and Ensuring Ongoing Compliance with CNIL AI Regulations

Non-compliance with the CNIL guidelines can lead to significant consequences:

- Financial penalties: Substantial fines can be levied for violations.

- Reputational damage: Non-compliance can severely damage an organization's reputation and erode public trust.

- Legal challenges: Organizations may face lawsuits from individuals affected by non-compliant AI systems.

To avoid penalties and ensure ongoing compliance:

- Implement a robust monitoring and evaluation system: Regularly review and update your AI systems and processes to ensure ongoing compliance with the CNIL guidelines.

- Stay updated on CNIL announcements and guidance: The regulatory landscape is constantly evolving. Stay informed about changes and updates through official CNIL channels. Consult with legal experts specializing in data protection and AI.

Conclusion

The updated CNIL Guidelines for AI Models demand a proactive approach to responsible AI development and deployment. Compliance is not just a legal obligation; it's essential for building trust, maintaining a positive brand image, and mitigating legal risks. Understanding and implementing the updated CNIL Guidelines for AI Models is crucial for organizations leveraging AI technology. Take the necessary steps today to ensure your compliance and build a responsible AI strategy. Learn more about CNIL AI compliance, and related resources to stay ahead of the curve. Failure to do so could lead to significant financial and reputational consequences.

Featured Posts

-

Bibee Settles In Despite Early Homer Guardians Defeat Yankees 3 2

Apr 30, 2025

Bibee Settles In Despite Early Homer Guardians Defeat Yankees 3 2

Apr 30, 2025 -

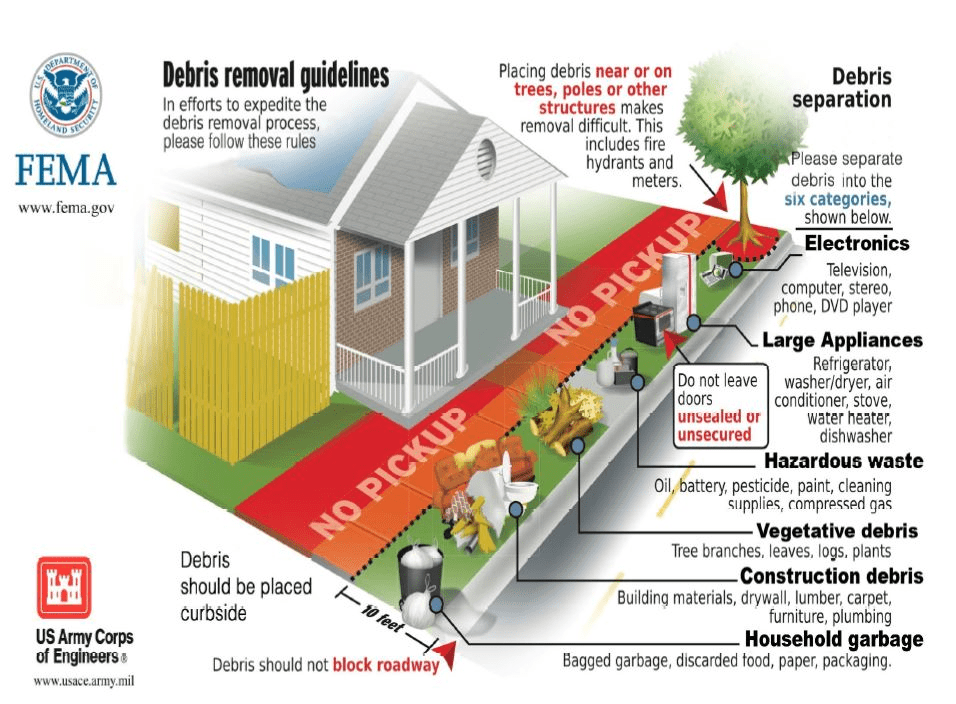

Louisville Launches Storm Debris Pickup Request System

Apr 30, 2025

Louisville Launches Storm Debris Pickup Request System

Apr 30, 2025 -

Emotional Coronation Street Departure Jordan And Fallons Joint Thank You Update

Apr 30, 2025

Emotional Coronation Street Departure Jordan And Fallons Joint Thank You Update

Apr 30, 2025 -

Giai Bong Da Thanh Nien Sinh Vien Tran Dau Mo Man Vong Chung Ket Hap Dan

Apr 30, 2025

Giai Bong Da Thanh Nien Sinh Vien Tran Dau Mo Man Vong Chung Ket Hap Dan

Apr 30, 2025 -

The Reality Of 9 Children Amanda Owens Honest Family Photos

Apr 30, 2025

The Reality Of 9 Children Amanda Owens Honest Family Photos

Apr 30, 2025