Understanding AI's Learning Process: Implications For Responsible Technology

Table of Contents

Types of AI Learning

AI learning isn't a monolithic process; it encompasses several distinct approaches, each with its own strengths, weaknesses, and ethical considerations. Understanding these differences is key to responsible AI development.

Supervised Learning

In supervised learning, AI algorithms learn from labeled data. This means the algorithm is trained on input data where the desired output is already known. The algorithm identifies patterns in the data to map inputs to outputs.

- Example: Image recognition – training an AI to identify cats in images by showing it thousands of labeled images of cats and other animals. The algorithm learns to associate specific visual features (e.g., pointy ears, whiskers) with the label "cat."

- Keywords: Supervised learning, labeled data, training data, classification, regression, prediction.

- Bullet Points:

- High accuracy with sufficient, high-quality data.

- Requires significant labeled datasets, which can be time-consuming and expensive to create.

- Prone to bias if the training data is biased; if the dataset predominantly features a certain breed of cat, the AI might struggle to recognize other breeds.

- Well-suited for tasks like image classification, spam filtering, and medical diagnosis.

Unsupervised Learning

Unlike supervised learning, unsupervised learning involves training AI on unlabeled data. The algorithm is not given explicit instructions on what to look for; instead, it identifies patterns, structures, and relationships within the data itself.

- Example: Customer segmentation – grouping customers based on their purchasing behavior without pre-defined categories. The algorithm might identify distinct customer segments based on spending habits, demographics, or product preferences.

- Keywords: Unsupervised learning, clustering, dimensionality reduction, anomaly detection, pattern recognition.

- Bullet Points:

- Discovers hidden patterns and structures in data that might not be apparent to humans.

- Less accurate than supervised learning for specific prediction tasks.

- Can be computationally expensive, especially with large datasets.

- Useful for tasks like customer segmentation, anomaly detection (e.g., identifying fraudulent transactions), and data exploration.

Reinforcement Learning

Reinforcement learning is a type of machine learning where an AI agent learns to interact with an environment by trial and error. The agent receives rewards for desirable actions and penalties for undesirable actions, learning to maximize its cumulative reward over time.

- Example: Game playing – an AI learns to play chess or Go by playing against itself and receiving rewards for winning and penalties for losing. Through countless iterations, it learns optimal strategies.

- Keywords: Reinforcement learning, rewards, penalties, agents, environments, Q-learning, deep reinforcement learning.

- Bullet Points:

- Effective for complex tasks where the optimal solution is not easily defined.

- Can be computationally intensive, requiring significant processing power and time.

- Requires careful design of the reward function to ensure the AI learns the desired behavior; poorly designed reward functions can lead to unexpected or undesirable outcomes.

- Applications include robotics, autonomous driving, and resource management.

Bias and Fairness in AI Learning

A significant challenge in AI learning is the potential for bias. AI systems inherit biases present in their training data, leading to unfair or discriminatory outcomes. This bias can manifest in various ways, impacting different groups disproportionately.

- Keywords: AI bias, algorithmic bias, fairness, equity, accountability, responsible AI, ethical AI.

- Bullet Points:

- Bias Amplification: AI systems can amplify existing biases in the data, leading to significantly skewed results.

- Mitigating Bias: Careful data curation, algorithmic design choices, and the use of diverse datasets are crucial for mitigating bias.

- The Importance of Diverse Datasets: Representing all relevant demographics and perspectives in training data is essential for fairness.

- Ethical Considerations: Developers must actively consider the ethical implications of their AI systems and strive to create fair and equitable outcomes.

Transparency and Explainability in AI

Understanding how an AI arrives at its decisions is crucial for trust and accountability. "Black box" models, where the decision-making process is opaque, hinder this understanding and raise concerns about fairness and potential misuse.

- Keywords: Explainable AI (XAI), transparency, interpretability, model explainability, black box models, AI ethics.

- Bullet Points:

- Challenges in Interpreting Complex Models: Many advanced AI models, such as deep neural networks, are notoriously difficult to interpret.

- Techniques for Increasing Transparency: Researchers are developing techniques to make AI models more transparent, such as feature importance analysis and visualization methods.

- The Importance of Explainability for Regulatory Compliance and Public Trust: Explainability is crucial for building public trust and ensuring regulatory compliance.

The Future of Responsible AI Learning

The responsible development and deployment of AI require a multi-faceted approach, encompassing ethical guidelines, regulations, and ongoing monitoring.

- Keywords: Responsible AI, ethical AI, AI ethics, AI governance, AI regulation, AI safety.

- Bullet Points:

- Collaboration: Collaboration between researchers, policymakers, and industry stakeholders is essential for establishing ethical guidelines and regulations.

- Public Engagement: Fostering public engagement in discussions about AI ethics and governance is crucial for ensuring that AI development aligns with societal values.

- Continuous Monitoring and Evaluation: AI systems need continuous monitoring and evaluation to detect and address biases, inaccuracies, and other potential problems.

Conclusion

Understanding the learning process of AI is not just a technical matter; it’s paramount for building a future where AI benefits all of humanity. From supervised learning to reinforcement learning, each approach presents unique challenges and opportunities regarding bias, transparency, and ethical implications. By embracing responsible AI development practices, we can harness the transformative power of AI while mitigating its potential risks. Let's work together to ensure that the future of AI is shaped by a commitment to understanding AI's learning process and prioritizing responsible innovation. The responsible development of AI requires continuous learning and a commitment to ethical practices in every stage of the process. Let's build a future where AI empowers us all.

Featured Posts

-

Upset In The Desert Griekspoor Defeats Top Seeded Zverev At Indian Wells

May 31, 2025

Upset In The Desert Griekspoor Defeats Top Seeded Zverev At Indian Wells

May 31, 2025 -

Creating The Good Life Practical Steps For A Fulfilling Life

May 31, 2025

Creating The Good Life Practical Steps For A Fulfilling Life

May 31, 2025 -

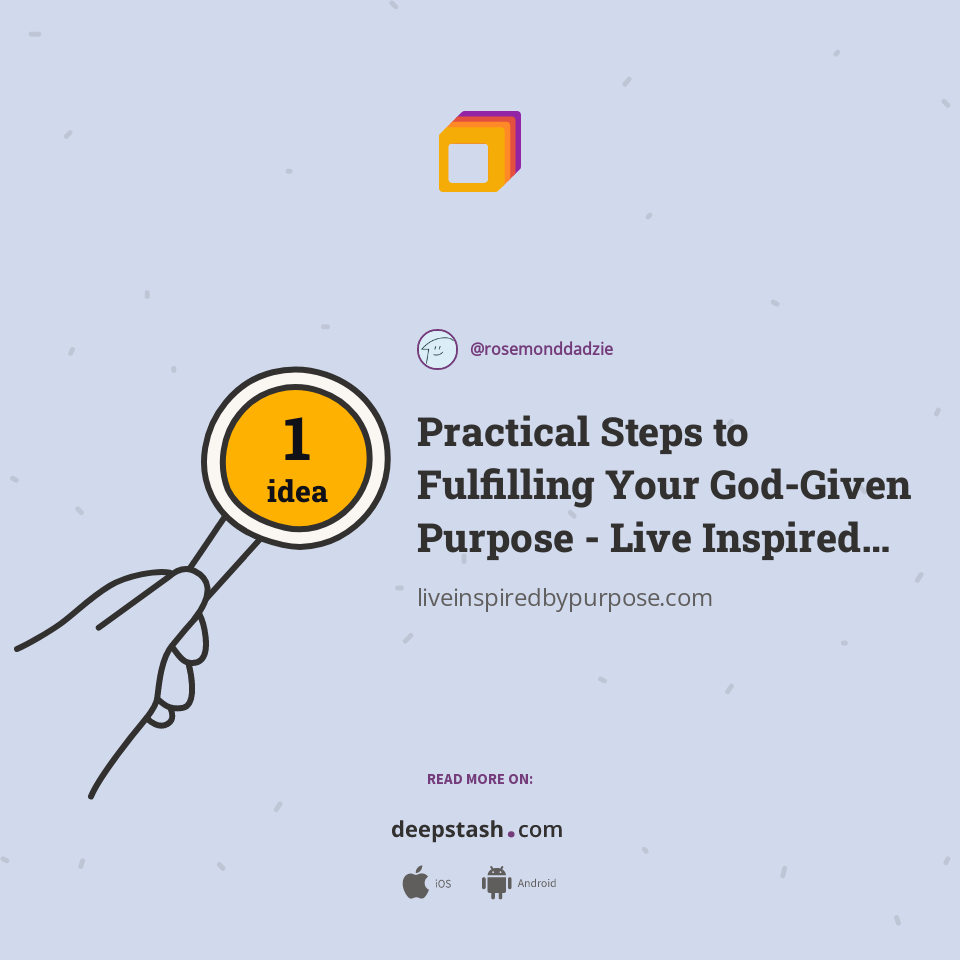

Seattle Weather Soggy Skies Continue Into The Weekend

May 31, 2025

Seattle Weather Soggy Skies Continue Into The Weekend

May 31, 2025 -

Global Cities Under Siege The Impact Of Dangerous Climate Whiplash

May 31, 2025

Global Cities Under Siege The Impact Of Dangerous Climate Whiplash

May 31, 2025 -

Detroit Tigers Vs Minnesota Twins Friday Night Road Trip Opener

May 31, 2025

Detroit Tigers Vs Minnesota Twins Friday Night Road Trip Opener

May 31, 2025