Understanding AI's Learning Limitations: Towards Ethical AI Development

Table of Contents

Data Bias and its Impact on AI Learning

The adage "garbage in, garbage out" is particularly relevant in the context of AI. Data bias, the presence of systematic and repeatable errors in a dataset that can lead to unfair or discriminatory outcomes, is a significant challenge. The problem of biased datasets arises from various sources, reflecting existing societal inequalities and biases embedded within the data used to train AI models.

The Problem of Biased Datasets

AI systems learn from the data they are trained on. If that data reflects historical biases – for example, gender bias in hiring practices or racial bias in criminal justice data – the AI system will likely perpetuate and even amplify these biases. This can lead to discriminatory outcomes, such as loan applications being unfairly rejected or facial recognition systems misidentifying individuals based on their race or gender.

- Algorithmic bias stemming from historical inequalities is a critical concern. AI systems trained on biased data will learn to replicate and reinforce these inequalities.

- Identifying and mitigating bias in large datasets is extremely challenging, requiring sophisticated techniques and careful analysis.

- Creating truly diverse and representative datasets is essential for building fair and unbiased AI systems. This means actively seeking out and incorporating data from underrepresented groups.

- Techniques like data augmentation (adding synthetic data to balance representation) and re-weighting (adjusting the importance of different data points) can help detect and correct bias in datasets.

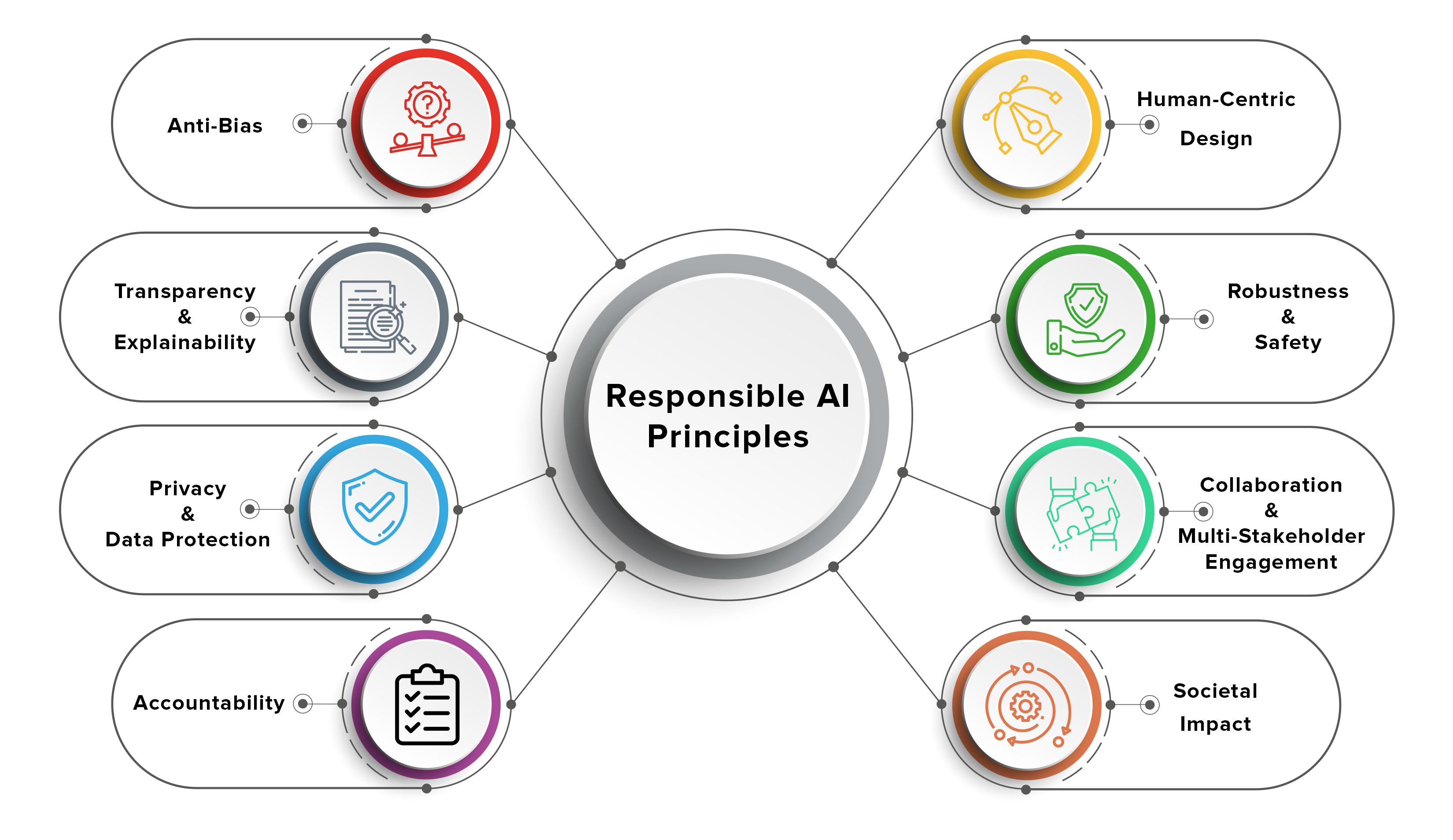

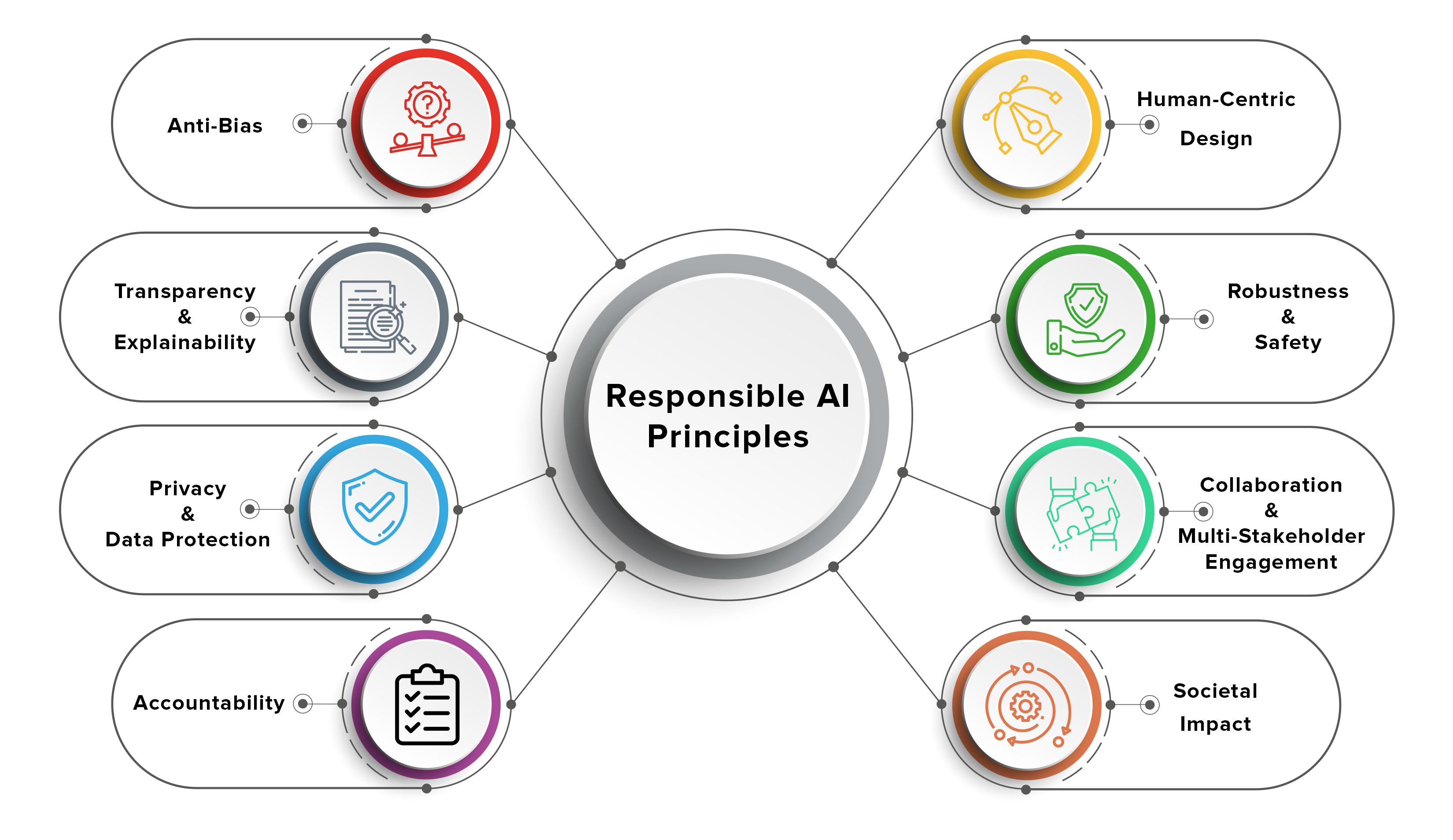

Addressing data bias is crucial for achieving AI fairness and building trustworthy AI systems. Understanding and mitigating representation bias is a fundamental step towards ethical AI development.

The Limitations of Current Machine Learning Models

Beyond data bias, inherent limitations within current machine learning models pose further challenges to ethical AI development.

Explainability and Interpretability

Many advanced AI models, particularly deep learning models, operate as "black boxes." This means it's difficult, if not impossible, to understand precisely how they arrive at their decisions. This lack of explainability and interpretability hinders trust and accountability.

- Interpreting the decision-making process of complex deep learning models is a major challenge. Understanding why an AI system made a particular decision is vital for building trust and ensuring accountability.

- The development of more explainable AI (XAI) is critical for increasing transparency and enabling better oversight.

- Techniques like LIME (Local Interpretable Model-agnostic Explanations) and SHAP (SHapley Additive exPlanations) are being developed to improve model interpretability, but these methods are not without limitations.

Generalization and Overfitting

AI models can sometimes overfit to their training data, meaning they perform exceptionally well on the data they've seen during training but poorly on new, unseen data. This lack of generalization poses a serious risk in real-world applications.

- The risk of AI models performing poorly in real-world scenarios due to overfitting is significant. A model that performs well in a controlled environment might fail miserably when confronted with the complexities of the real world.

- Regularization techniques, such as adding penalties to complex models, can help prevent overfitting.

- Robust testing and validation procedures using diverse and representative datasets are crucial for assessing the generalization capabilities of AI models and ensuring their reliability. Model robustness is essential for safe and ethical deployment.

Addressing these limitations of deep learning and other machine learning models is crucial for responsible AI development.

The Ethical Implications of AI Learning Limitations

The limitations of AI learning discussed above have significant ethical implications.

Responsibility and Accountability

When AI systems make errors or cause harm – potentially due to data bias or a lack of explainability – assigning responsibility becomes challenging.

- Clear guidelines and regulations for AI development and deployment are urgently needed to address issues of AI responsibility and accountability.

- Human oversight remains crucial in AI systems, particularly in high-stakes applications where errors can have serious consequences.

- Robust ethical frameworks are necessary to guide the development and use of AI, ensuring that these powerful technologies are used responsibly and ethically.

Transparency and User Trust

Building user trust in AI systems is paramount. Transparency about how AI systems work and their limitations is essential for fostering trust.

- Clear explanations of how AI systems function and their limitations are vital for building user trust and promoting responsible use.

- User education and awareness are crucial for empowering individuals to critically assess the capabilities and limitations of AI systems.

- Explainable AI (XAI) plays a key role in fostering trust by providing users with insights into the decision-making processes of AI systems. AI transparency is crucial for ethical AI development.

By understanding the interconnectedness of AI ethics, AI responsibility, AI accountability, and AI transparency, we can build more robust and ethical AI systems.

Conclusion

Understanding AI's learning limitations – including data bias, the limitations of current models in terms of explainability and generalization, and the subsequent ethical implications – is crucial for responsible AI development. The challenges of algorithmic bias, lack of interpretability, and the need for robust generalization capabilities all highlight the need for careful consideration of ethical principles throughout the entire AI lifecycle. By understanding AI's learning limitations, we can pave the way for a more responsible and ethical future with AI. Continue exploring resources on ethical AI, responsible AI, and mitigating AI limitations to contribute to a safer and more equitable world shaped by AI.

Featured Posts

-

Guelsen Bubikoglu Nun 50 Yillik Esi Tuerker Inanoglu Nu Anma Yazisi

May 31, 2025

Guelsen Bubikoglu Nun 50 Yillik Esi Tuerker Inanoglu Nu Anma Yazisi

May 31, 2025 -

Analyse De L Impact De L Ingenierie Castor Sur Deux Cours D Eau De La Drome

May 31, 2025

Analyse De L Impact De L Ingenierie Castor Sur Deux Cours D Eau De La Drome

May 31, 2025 -

Cassidy Hutchinsons Memoir A Jan 6 Hearing Witness Speaks Out

May 31, 2025

Cassidy Hutchinsons Memoir A Jan 6 Hearing Witness Speaks Out

May 31, 2025 -

Man Pleads Guilty Animal Pornography Case In Kelvedon Essex

May 31, 2025

Man Pleads Guilty Animal Pornography Case In Kelvedon Essex

May 31, 2025 -

Who Tagged This Painting Banksy Artwork Auctioned

May 31, 2025

Who Tagged This Painting Banksy Artwork Auctioned

May 31, 2025