Turning "Poop" Into Podcast Gold: How AI Digests Repetitive Documents

Table of Contents

Identifying the "Poop": Recognizing Repetitive Data in Your Workflow

Before we can transform data into gold, we need to identify the "poop" – the repetitive data clogging up your workflow. This often manifests in several common sources:

Common Sources of Repetitive Data:

- Meeting Transcripts: Lengthy recordings often contain redundant information and tangential discussions.

- Research Papers: Academic papers frequently repeat established facts and methodologies.

- Financial Reports: Quarterly and annual reports often contain repetitive data across different sections.

- Legal Documents: Contracts, briefs, and legal filings frequently include similar clauses and arguments.

- Customer Service Logs: Hundreds or thousands of logs might reveal common customer complaints or issues.

These documents often contain significant redundancy, forcing you to spend valuable time sifting through irrelevant information to extract the crucial details.

The Cost of Repetitive Data:

The cost of processing repetitive data goes far beyond just wasted time. It directly impacts productivity, leading to:

- Reduced Efficiency: Employees spend hours on tasks that could be automated.

- Increased Risk of Errors: Manually processing large volumes of data increases the chance of human error.

- Missed Opportunities: Time spent on repetitive tasks prevents focusing on strategic initiatives.

Studies show that employees spend an average of 5-10 hours per week on tasks related to processing repetitive data—that's a significant loss in productivity and potential revenue.

AI as the Digestive System: How AI Processes Repetitive Documents

AI acts as a powerful digestive system, breaking down complex, repetitive documents into easily digestible nuggets of information. This is primarily achieved through the power of:

Natural Language Processing (NLP): The Core Technology

NLP is the cornerstone of AI's ability to understand and analyze text data. It employs various techniques, including:

- Text Summarization: Condensing lengthy documents into concise summaries.

- Keyword Extraction: Identifying the most important terms and concepts within a document.

- Topic Modeling: Discovering underlying themes and topics within a dataset.

These NLP techniques allow AI to effectively sift through mountains of text, identifying key information and filtering out the noise.

Machine Learning for Pattern Recognition

Machine learning algorithms play a crucial role in identifying patterns and relationships within the data. By analyzing large datasets, these algorithms learn to recognize repetitive information, enabling them to:

- Identify Redundancies: Pinpointing duplicated information and eliminating unnecessary data.

- Detect Similar Documents: Grouping similar documents together for easier analysis.

- Predict Future Trends: Identifying patterns that can predict future outcomes based on historical data.

Different machine learning models, such as recurrent neural networks (RNNs), are particularly well-suited for text analysis and pattern recognition.

Different AI Tools and their Applications

Several AI tools are available to help you process different types of repetitive data:

- Summarization APIs: Services like [link to a summarization API] can generate concise summaries of lengthy documents, freeing up your time.

- Transcription Services with AI-powered summarization: Platforms like [link to a transcription service with AI summarization] offer transcription and summarization capabilities, streamlining meeting analysis.

- Data Extraction Tools: Tools like [link to a data extraction tool] can automatically extract specific data points from various document types, reducing manual data entry.

From "Poop" to Podcast Gold: Transforming Data into Actionable Insights

Once the AI has processed your "data poop," the result is valuable, actionable information – your “podcast gold.” This transformed data can be used in several ways:

Creating Concise Summaries and Reports

AI-generated summaries enable you to quickly grasp the key information in lengthy documents. This is particularly helpful for creating:

- Executive Summaries: Providing a high-level overview of complex reports for busy executives.

- Briefing Papers: Summarizing key findings and recommendations for stakeholders.

- Meeting Notes: Creating concise summaries of long meetings, highlighting key decisions and action items.

Identifying Key Trends and Patterns

AI can reveal hidden trends and patterns within your data, providing invaluable insights for decision-making. For example:

- Analyzing customer service logs to identify common complaints and improve customer satisfaction.

- Analyzing financial reports to predict future revenue trends.

- Analyzing research papers to identify breakthroughs and emerging trends in a specific field.

Automating Data Entry and Information Extraction

AI can automate tedious tasks, freeing up your time for more strategic work. This leads to:

- Increased Efficiency: Automating data entry and information extraction from repetitive documents.

- Improved Accuracy: Reducing the risk of human error in data processing.

- Cost Savings: Lowering the cost of manual data entry and processing.

Conclusion

By leveraging the power of AI, you can efficiently process repetitive data ("poop"), saving precious time and resources. Natural Language Processing (NLP) and machine learning are the key technologies behind this transformation, enabling the creation of concise summaries, identification of key trends, and automation of tedious tasks. The end result? Valuable, actionable insights – your "podcast gold." Stop wasting time on data poop! Learn how AI can turn your repetitive documents into podcast gold. Explore the AI tools mentioned above and start transforming your workflow today!

Featured Posts

-

Nederlandse Bankieren Vereenvoudigd Een Praktische Gids Voor Tikkie

May 22, 2025

Nederlandse Bankieren Vereenvoudigd Een Praktische Gids Voor Tikkie

May 22, 2025 -

Liverpool Juara Liga Inggris 2024 2025 Pelatih Pelatih Legendaris Di Balik Kesuksesan The Reds

May 22, 2025

Liverpool Juara Liga Inggris 2024 2025 Pelatih Pelatih Legendaris Di Balik Kesuksesan The Reds

May 22, 2025 -

Cest La Petite Italie De L Ouest Architecture Toscane Et Charme Inattendu

May 22, 2025

Cest La Petite Italie De L Ouest Architecture Toscane Et Charme Inattendu

May 22, 2025 -

Recent Allegations Against Blake Lively What We Know So Far

May 22, 2025

Recent Allegations Against Blake Lively What We Know So Far

May 22, 2025 -

Australian Foot Race Man Sets Record For Fastest Crossing

May 22, 2025

Australian Foot Race Man Sets Record For Fastest Crossing

May 22, 2025

Latest Posts

-

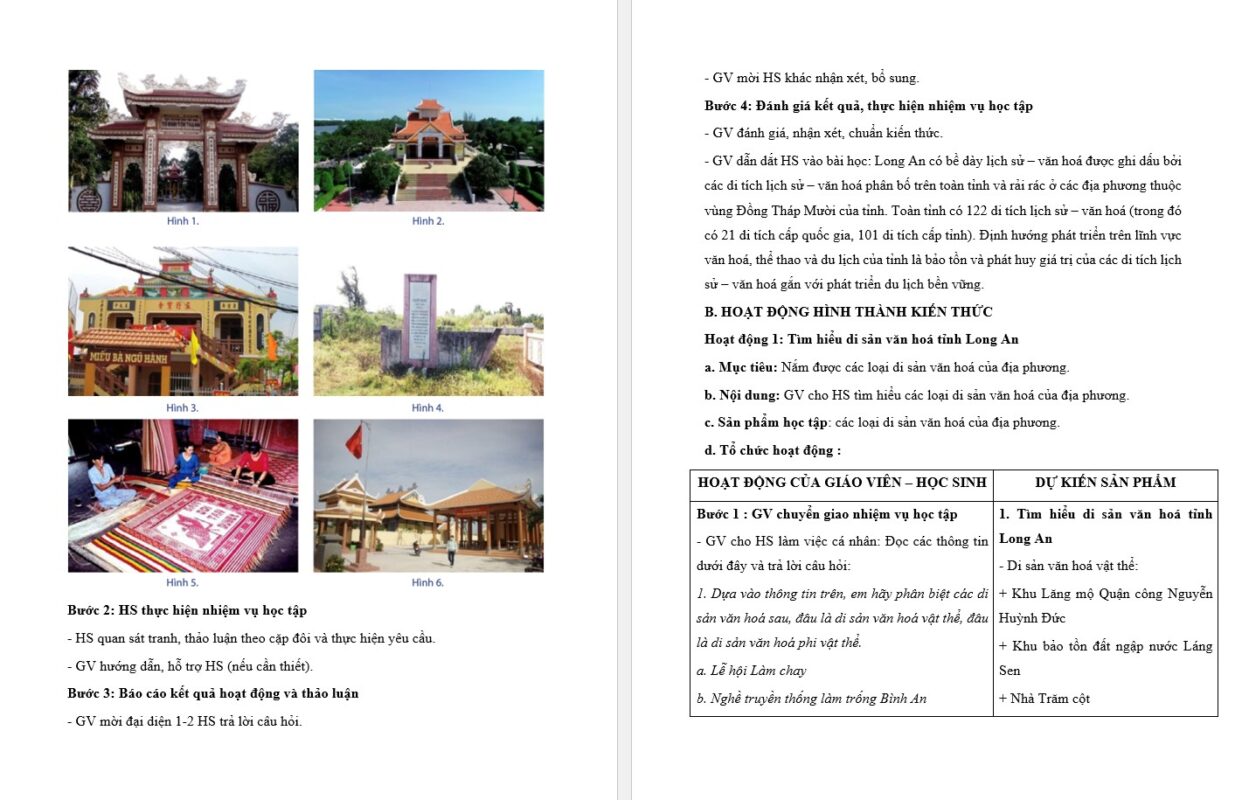

Phan Tich 7 Vi Tri Ket Noi Hap Dan Tp Hcm Long An

May 22, 2025

Phan Tich 7 Vi Tri Ket Noi Hap Dan Tp Hcm Long An

May 22, 2025 -

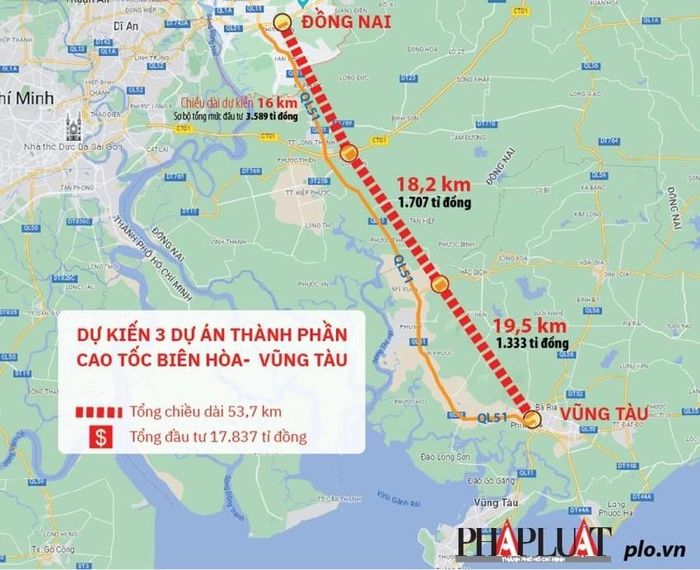

Cao Toc Bien Vung Tau Thong Xe Sap Hoan Thanh

May 22, 2025

Cao Toc Bien Vung Tau Thong Xe Sap Hoan Thanh

May 22, 2025 -

Dau Tu Ha Tang Giao Thong 7 Vi Tri Noi Tp Hcm Long An

May 22, 2025

Dau Tu Ha Tang Giao Thong 7 Vi Tri Noi Tp Hcm Long An

May 22, 2025 -

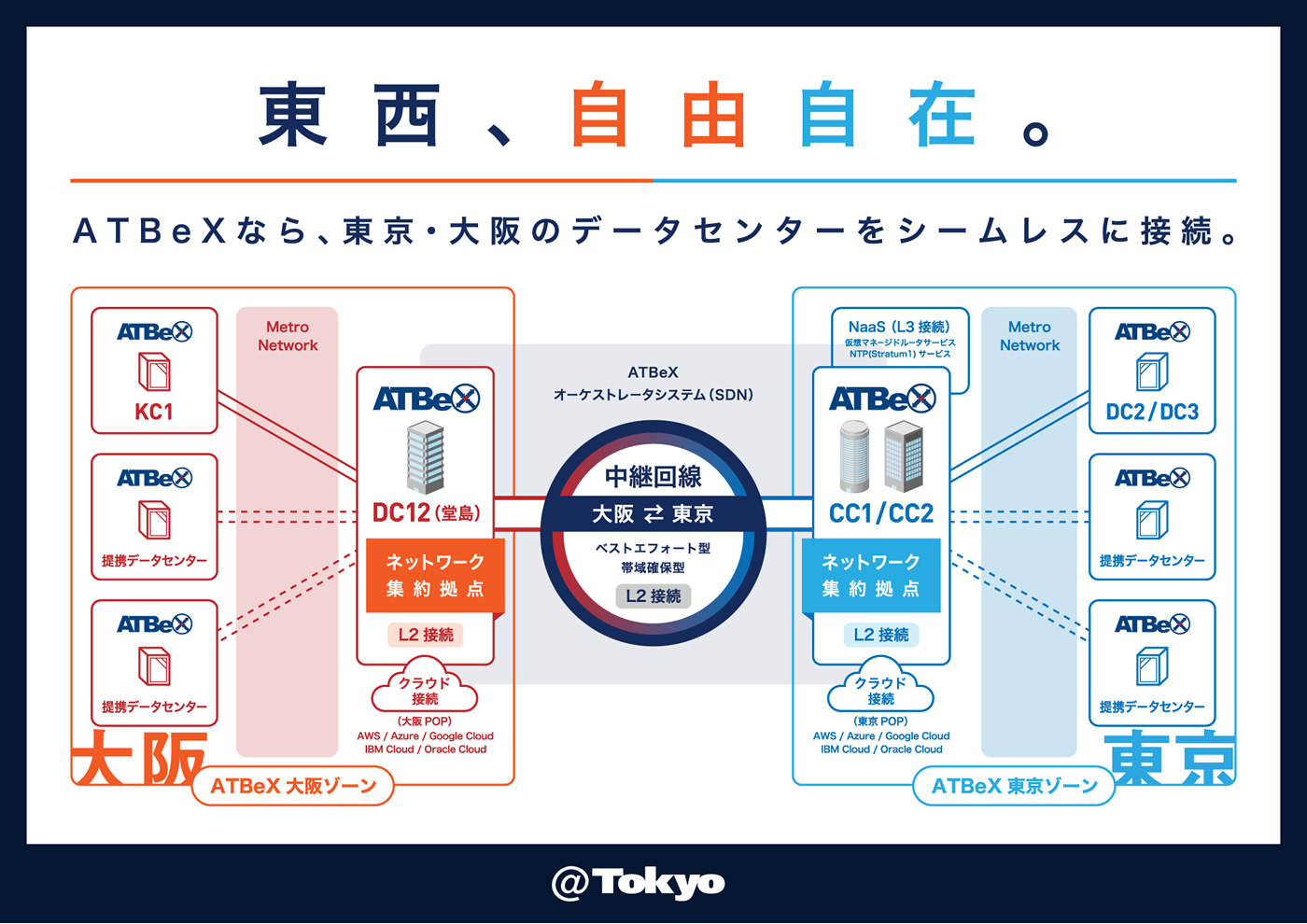

At Be X Ntt Multi Interconnect Ascii Jp

May 22, 2025

At Be X Ntt Multi Interconnect Ascii Jp

May 22, 2025 -

7 Tuyen Ket Noi Quan Trong Tp Hcm Long An Can Phat Trien

May 22, 2025

7 Tuyen Ket Noi Quan Trong Tp Hcm Long An Can Phat Trien

May 22, 2025