The Surveillance State And AI Therapy: Ethical Concerns And Risks

Table of Contents

Data Privacy and Security in AI Therapy

H3: The Collection and Use of Sensitive Patient Data:

AI therapy platforms collect vast amounts of personal and sensitive data, including conversations, emotional states, personal details, and even biometric data like voice patterns and typing rhythms. This data is incredibly valuable for training AI algorithms and personalizing treatment, but its sensitive nature necessitates stringent security measures. The potential for misuse is significant, and robust safeguards are crucial.

- Potential for data breaches and misuse: A data breach could expose highly sensitive personal information, leading to identity theft, financial loss, and severe emotional distress for patients.

- Lack of transparency regarding data storage and usage: Many AI therapy platforms lack transparency about how patient data is stored, used, and protected. This lack of transparency erodes trust and makes it difficult for patients to make informed decisions.

- Concerns about third-party access to data: Data sharing with third-party companies for research, marketing, or other purposes raises significant privacy concerns. Strict limitations and clear consent protocols are necessary.

- Difficulty in ensuring data anonymization and de-identification: Completely anonymizing and de-identifying sensitive mental health data is extremely challenging, leading to ongoing risks even with anonymization efforts.

H3: The Role of Data Brokers and Surveillance Technologies:

The integration of AI therapy with other surveillance technologies, such as location tracking, social media monitoring, and wearable sensor data, amplifies privacy concerns. This aggregation of data from various sources creates a comprehensive profile of individuals, blurring the lines between healthcare and surveillance.

- Potential for profiling and discrimination: Aggregated data could be used to create profiles that lead to discriminatory practices in healthcare access and treatment.

- Increased risk of unwarranted surveillance: The combination of AI therapy data with other surveillance data increases the risk of unwarranted surveillance and potential misuse by law enforcement or other entities.

- Erosion of trust in mental health services: The lack of transparency and potential for misuse can severely erode trust in mental health services, discouraging individuals from seeking necessary care.

- Potential for misuse of data by law enforcement or other entities: Data collected through AI therapy platforms could be misused by law enforcement or other entities for purposes unrelated to healthcare, violating patient privacy and autonomy.

Algorithmic Bias and Discrimination in AI Therapy

H3: Bias in AI Algorithms and Datasets:

AI algorithms are trained on data, and if that data reflects existing societal biases (e.g., racial, gender, socioeconomic), the algorithms will perpetuate and even amplify those biases. This can lead to unfair or inaccurate diagnoses, treatment recommendations, and overall disparities in care.

- Bias in diagnosis and treatment recommendations: Biased algorithms may lead to misdiagnosis or inappropriate treatment recommendations for certain demographic groups.

- Disparate impact on marginalized groups: Marginalized communities may experience disproportionately negative impacts from biased AI systems, exacerbating existing health disparities.

- Lack of diversity in AI development teams: A lack of diversity among AI developers can contribute to the creation of biased algorithms, as developers may not fully understand the needs and experiences of diverse populations.

- Difficulties in detecting and mitigating algorithmic bias: Detecting and mitigating algorithmic bias is a complex challenge requiring rigorous testing, ongoing monitoring, and continuous improvement.

H3: Impact on Access to Care and Equity:

AI-powered mental health solutions have the potential to improve access to care, but only if implemented equitably. Unequal access to technology and resources could exacerbate existing inequalities.

- Digital divide and access to technology: The digital divide limits access to AI therapy for individuals in underserved communities lacking reliable internet access or appropriate devices.

- Cost and affordability of AI therapy: The cost of AI therapy platforms may be prohibitive for individuals with limited financial resources, creating disparities in access to care.

- Language barriers and cultural considerations: AI therapy platforms need to be culturally sensitive and linguistically accessible to ensure equitable access for diverse populations.

- Lack of accessibility for individuals with disabilities: AI therapy platforms should be designed to be accessible to individuals with disabilities, including those with visual, auditory, or cognitive impairments.

Informed Consent and Patient Autonomy in AI Therapy

H3: Challenges in Obtaining Meaningful Informed Consent:

The complexity of AI algorithms and data processing makes it difficult for patients to fully understand how their data is being used. This poses significant challenges in obtaining truly informed consent.

- Lack of transparency about the algorithm's decision-making process: Patients need to understand how AI algorithms make decisions about their diagnosis and treatment.

- Difficulty in understanding the risks and benefits of AI therapy: Patients need clear and concise information about the potential risks and benefits of using AI therapy.

- Potential for coercion or undue influence: Patients may feel pressured to use AI therapy, particularly if it is presented as the only option available.

- Challenges in obtaining consent from vulnerable populations: Obtaining informed consent from vulnerable populations, such as children or individuals with cognitive impairments, requires special care and consideration.

H3: Maintaining Patient Autonomy and Control:

The use of AI in therapy should not diminish patient autonomy. Human oversight is critical to ensuring that AI systems are used responsibly and ethically.

- Need for human oversight and intervention: Human clinicians should always be involved in the decision-making process, ensuring appropriate oversight and intervention when necessary.

- Importance of preserving the therapeutic relationship: AI should enhance, not replace, the human therapeutic relationship. Empathy and human connection remain essential components of effective mental healthcare.

- Patient's right to access and correct their data: Patients have a right to access and correct their data stored in AI therapy platforms.

- Maintaining the patient's right to refuse AI-based interventions: Patients should always retain the right to refuse AI-based interventions if they choose.

Conclusion

The integration of AI therapy within a surveillance state presents significant ethical concerns related to data privacy, algorithmic bias, and patient autonomy. Addressing these challenges requires a multi-pronged approach, including robust data protection regulations, rigorous testing for algorithmic bias, transparent and accessible AI systems, and a commitment to ethical AI development and deployment. We must prioritize the ethical implications of AI therapy to ensure its responsible use and protect the rights and well-being of individuals seeking mental health support. The future of AI therapy hinges on fostering trust, transparency, and accountability—striking a balance between technological advancement and the preservation of individual rights within the context of the evolving surveillance state. Let's work together to build a future where AI therapy enhances mental healthcare ethically and responsibly. (Keywords: Ethical AI, responsible AI, AI ethics, mental health technology, data privacy regulations).

Featured Posts

-

Update Man Shot At Ohio City Apartment Suspect Still At Large

May 15, 2025

Update Man Shot At Ohio City Apartment Suspect Still At Large

May 15, 2025 -

Leo Carlssons Double Digit Effort In Vain As Ducks Drop Overtime Thriller To Stars

May 15, 2025

Leo Carlssons Double Digit Effort In Vain As Ducks Drop Overtime Thriller To Stars

May 15, 2025 -

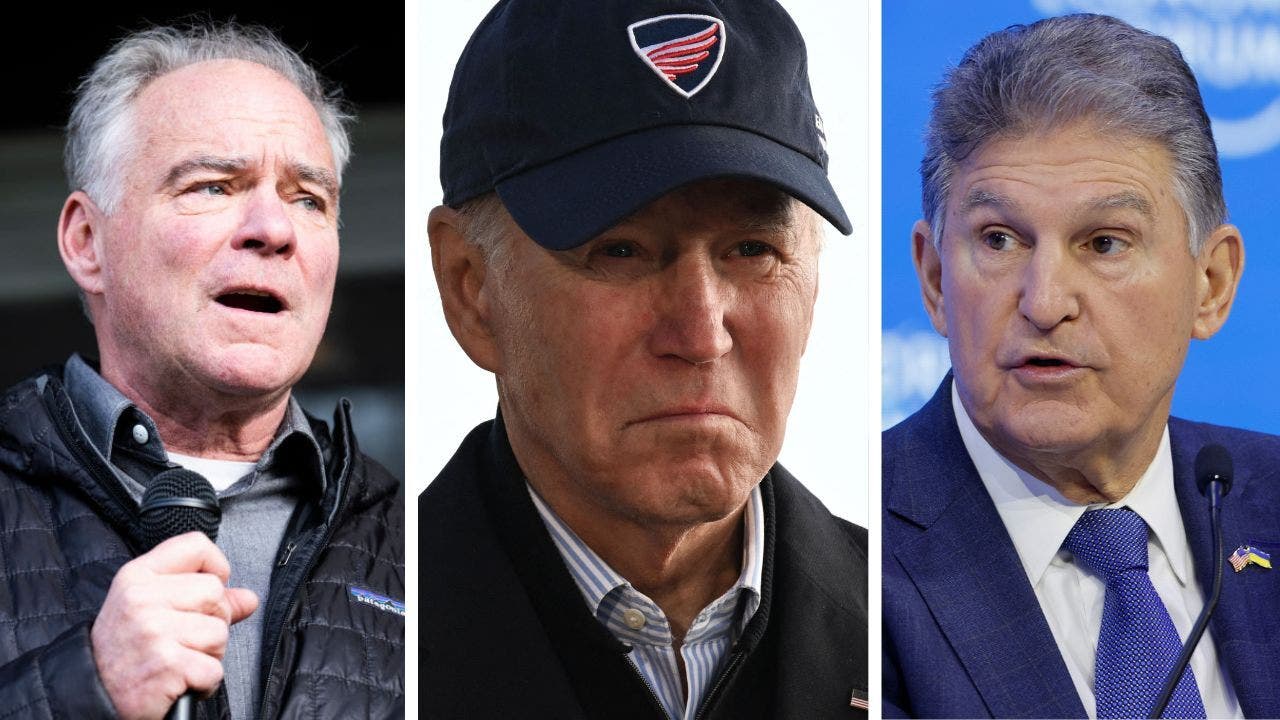

The Washington Examiners Coverage Of Joe Bidens Denials

May 15, 2025

The Washington Examiners Coverage Of Joe Bidens Denials

May 15, 2025 -

Padres Roster Update Jackson Merrills Return And Campusanos Optioning

May 15, 2025

Padres Roster Update Jackson Merrills Return And Campusanos Optioning

May 15, 2025 -

Kevin Durant Trade To Boston A Potential Nba Earthquake

May 15, 2025

Kevin Durant Trade To Boston A Potential Nba Earthquake

May 15, 2025