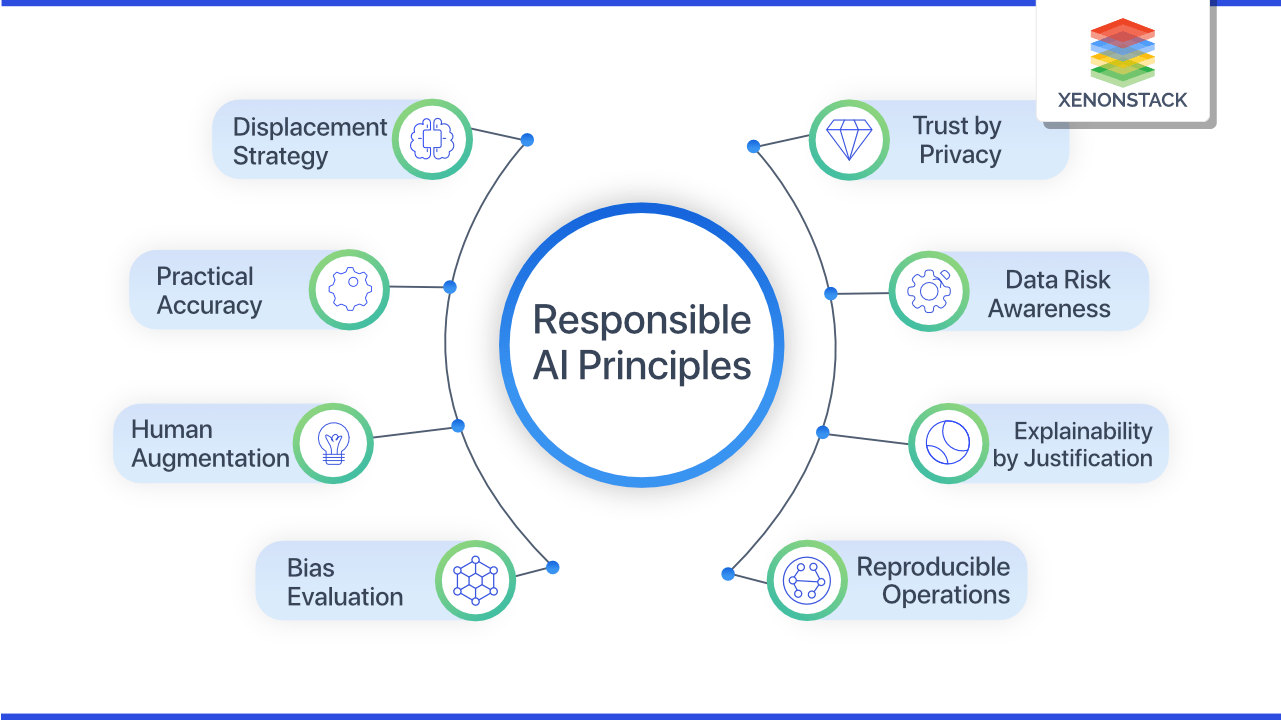

The Reality Of AI Learning: Promoting Responsible AI Practices

Table of Contents

Understanding the Biases Embedded in AI Learning

AI systems learn from data, and if that data reflects existing societal biases, the AI system will inevitably inherit and amplify those biases. This is a critical challenge in ensuring fairness and equity in AI applications.

Data Bias and its Consequences

Biased training data leads to biased AI outputs. For example, facial recognition systems have been shown to perform poorly on people of color, reflecting biases present in the datasets used to train them. This is not simply a technical issue; it has real-world consequences, impacting areas like law enforcement, loan applications, and even hiring processes.

- Types of bias: Representation bias (underrepresentation of certain groups), measurement bias (systematic errors in data collection), and algorithmic bias (biases introduced by the algorithm itself).

- Impact on different demographic groups: Biased AI systems disproportionately affect marginalized communities, perpetuating and deepening existing inequalities.

- Difficulty in detecting and mitigating bias: Identifying and correcting bias in large datasets is a complex and challenging task, requiring careful analysis and specialized techniques.

Addressing Algorithmic Bias

Mitigating bias requires a multi-pronged approach. It's not enough to simply "clean" the data; we need to address the underlying algorithmic biases as well.

- Data augmentation: Adding more data representing underrepresented groups can help balance the dataset and reduce bias.

- Fairness-aware algorithms: Designing algorithms specifically to minimize bias and ensure fairness across different demographic groups is crucial. Examples include algorithms that incorporate fairness constraints or use techniques like adversarial debiasing.

- Rigorous testing and evaluation: Thorough testing and evaluation are essential to identify and address biases before deploying AI systems. This includes evaluating performance across different demographic groups and using appropriate metrics for fairness.

- Diverse development teams: Including diverse perspectives in the design and development process is essential for identifying and mitigating biases.

Transparency and Explainability in AI Systems

Many AI systems, particularly deep learning models, function as "black boxes," making it difficult to understand how they arrive at their decisions. This lack of transparency poses significant challenges for accountability and trust.

The "Black Box" Problem

The complexity of many AI models makes it difficult to interpret their internal workings. This opacity can lead to several problems:

- Examples of "black box" AI systems: Many deep learning models, particularly those used in image recognition, natural language processing, and other complex tasks, are essentially "black boxes."

- Risks associated with lack of transparency: Inability to identify and correct errors, difficulty in ensuring fairness and accountability, and erosion of public trust.

- Importance of Explainable AI (XAI): Developing AI systems that are transparent and interpretable is critical for building trust and ensuring accountability.

Promoting Explainable AI (XAI)

Explainable AI (XAI) aims to make the decision-making processes of AI systems more transparent and understandable.

- Methods for improving transparency: Techniques like LIME (Local Interpretable Model-agnostic Explanations) and SHAP (SHapley Additive exPlanations) can help to explain individual predictions made by complex models.

- Benefits of XAI: Improved accountability, easier debugging, and increased trust in AI systems.

- Limitations of current XAI techniques: Current XAI methods are still under development and have limitations, especially when dealing with very complex models.

The Ethical Implications of AI Learning

The development and deployment of AI systems raise several significant ethical concerns, particularly regarding privacy, accountability, and the potential for harm.

Privacy Concerns and Data Security

AI systems often rely on vast amounts of data, including personal data. This raises important questions about privacy and data security.

- Regulations like GDPR: Regulations like the General Data Protection Regulation (GDPR) aim to protect individuals' privacy rights in the context of data processing.

- Anonymization techniques: Techniques like data anonymization and differential privacy can help to protect individuals' privacy while still enabling the use of data for AI training.

- Responsible use of sensitive data: Careful consideration must be given to the ethical implications of using sensitive data (e.g., health data, financial data) for AI training.

Accountability and Responsibility

When AI systems make mistakes or cause harm, determining who is responsible can be difficult. Clear guidelines and accountability frameworks are needed.

- Legal and ethical considerations: The legal and ethical ramifications of AI errors need careful consideration.

- The role of developers, deployers, and users: All stakeholders – developers, deployers, and users – have a responsibility to ensure the ethical development and deployment of AI systems.

Promoting Responsible AI Practices through Education and Collaboration

Addressing the challenges of responsible AI requires a concerted effort involving education, collaboration, and the establishment of clear ethical guidelines.

Education and Awareness

Educating developers, policymakers, and the public about the ethical implications of AI is crucial.

- Promoting AI ethics courses: Integrating AI ethics into university curricula and professional development programs is essential.

- Workshops and public awareness campaigns: Raising public awareness about the potential benefits and risks of AI is vital for informed decision-making.

Industry Collaboration and Standards

Collaboration among researchers, developers, policymakers, and other stakeholders is vital for establishing ethical guidelines and standards.

- Examples of existing AI ethics guidelines: Several organizations have published guidelines for responsible AI development, including the OECD Principles on AI and the IEEE Ethically Aligned Design.

- The need for international cooperation: Global cooperation is essential to address the challenges of responsible AI on a worldwide scale.

- Ongoing development of best practices: The field of AI ethics is constantly evolving, and ongoing collaboration is necessary to develop and refine best practices.

Conclusion

The realities of AI learning necessitate a commitment to responsible AI practices. Addressing bias in data and algorithms, promoting transparency and explainability, and considering the ethical implications of AI development and deployment are critical for ensuring that AI benefits all of humanity. By embracing these responsible AI practices, we can harness the transformative potential of AI while mitigating its potential risks. Let's work together to build a future where AI benefits all of humanity. To learn more about responsible AI and contribute to the ongoing conversation, explore resources from organizations like the AI Now Institute, the Partnership on AI, and the Future of Life Institute.

Featured Posts

-

Miley Cyrus En Bruno Mars Plagiaatzaak Rondom Gelijkende Hits Wordt Voortgezet

May 31, 2025

Miley Cyrus En Bruno Mars Plagiaatzaak Rondom Gelijkende Hits Wordt Voortgezet

May 31, 2025 -

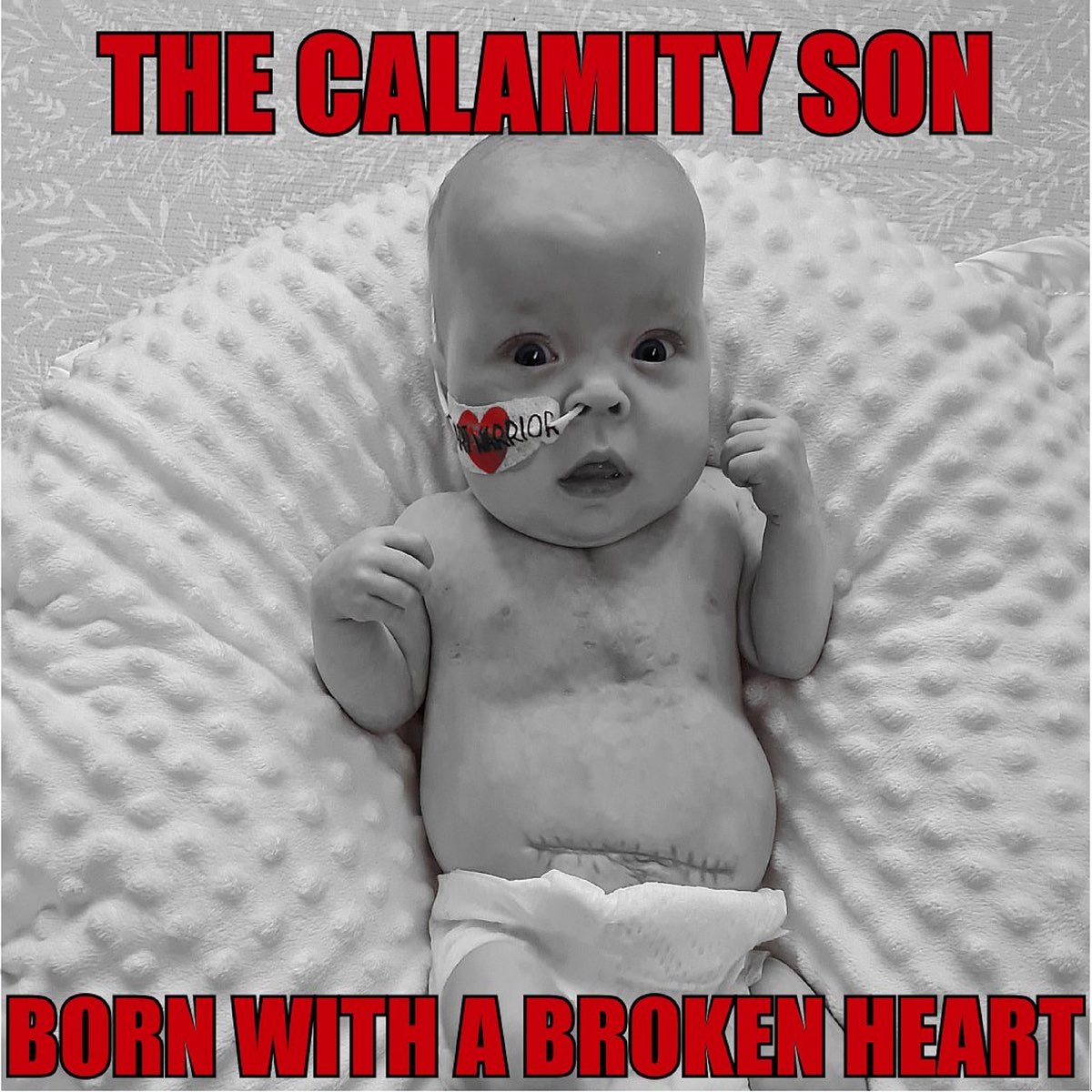

Banksys Broken Heart Mural Headed To Auction

May 31, 2025

Banksys Broken Heart Mural Headed To Auction

May 31, 2025 -

Free Housing For Two Weeks A German Citys Recruitment Drive

May 31, 2025

Free Housing For Two Weeks A German Citys Recruitment Drive

May 31, 2025 -

Miley Cyrus Dan Evolusi Busana Dari Disney Hingga Kini

May 31, 2025

Miley Cyrus Dan Evolusi Busana Dari Disney Hingga Kini

May 31, 2025 -

Retour Sur 22 Ans Du Tip Top One A Arcachon

May 31, 2025

Retour Sur 22 Ans Du Tip Top One A Arcachon

May 31, 2025