Sun-Times Under Fire: Investigation Into AI-Related Fabrications

Table of Contents

The AI Fabrication Scandal: Unveiling the Evidence

Suspect Articles and Their Key Characteristics:

Several articles published in recent weeks have raised concerns among readers and journalists alike. Key characteristics pointing towards AI authorship include:

- Article 1: "Local Bakery Wins National Award": This article featured unusually perfect sentence structure, lacking the colloquialisms and nuanced descriptions expected in human-written local news. It also contained several minor factual inaccuracies regarding the bakery's history.

- Article 2: "City Council Approves Controversial Zoning Bill": This piece exhibited repetitive sentence structures and a lack of detail in the descriptions of the council members' reactions. Inconsistencies were noted when comparing its information to official council meeting minutes.

- Article 3: "Record-Breaking Heatwave Sweeps the Midwest": While the core facts of the heatwave were accurate, the article's tone was remarkably even and lacked the emotional depth expected in human reporting of a significant weather event. Online discussions erupted, with readers questioning the article's authenticity due to its seemingly emotionless and overly generalized descriptions.

Public reaction to these articles has been swift and critical, with many expressing concern about the potential for AI-generated misinformation to undermine public trust in the newspaper. Social media discussions are rife with speculation, hashtags like #AISunTimes and #FakeNewsSunTimes trending prominently.

The Sun-Times' Response and Internal Investigation:

The Sun-Times issued a statement acknowledging the concerns and stating they are conducting a thorough internal investigation into the authorship of the suspect articles. Details of the investigation remain limited, but the statement promises transparency and accountability. There has been no public announcement regarding disciplinary actions, though internal reviews and potential policy changes are expected.

Expert Analysis of AI-Generated Content:

Experts in AI and journalism have weighed in, analyzing the suspect articles using various detection tools. Dr. Anya Sharma, a leading AI researcher at the University of Chicago, commented, "The stylistic inconsistencies and lack of nuanced detail are strong indicators of AI authorship. However, definitively proving AI involvement remains challenging." The difficulty lies in the increasing sophistication of AI writing tools, making it difficult to distinguish AI-generated text from human-written content. Current detection methods rely on identifying patterns in sentence structure, vocabulary, and overall writing style, but these patterns are constantly evolving as AI technology advances.

The Broader Implications for Journalism and Fact-Checking

The Threat of AI-Generated Misinformation:

The Sun-Times incident highlights the potential for widespread use of AI to create and disseminate false information. AI-generated propaganda and deepfakes are already a significant concern, posing a threat to democratic processes and social stability. The ease with which AI can produce convincing narratives makes it difficult to discern truth from fabrication, demanding greater vigilance from the public and news organizations.

The Future of Fact-Checking and Verification:

The incident underscores the urgent need for improved fact-checking methods and verification processes. This necessitates investment in new technologies and training programs for journalists. News organizations must adopt more rigorous fact-checking protocols, potentially including AI detection tools, to ensure accuracy and prevent the publication of AI-generated fabrications. Collaborations between news organizations, fact-checking initiatives, and AI researchers are crucial in developing effective countermeasures.

The Ethical Considerations of AI in Journalism:

The ethical implications of using AI in newsrooms are complex and require careful consideration. While AI can improve efficiency, it raises concerns about potential biases embedded within algorithms and the importance of human oversight to maintain journalistic integrity. Clear ethical guidelines are needed to govern the use of AI in news production and fact-checking processes, ensuring transparency and accountability.

The Legal and Regulatory Landscape

Current Laws and Regulations Concerning AI-Generated Content:

Existing laws on defamation and misinformation are relevant, but their applicability to AI-generated content is still being explored. Current legislation often struggles to address the rapidly evolving nature of AI technology and its potential for abuse. There's a clear need for legal frameworks tailored to the unique challenges posed by AI-generated misinformation.

Potential Future Regulations and Legal Challenges:

The future likely holds greater regulatory scrutiny of AI-generated content. This could involve legislation mandating transparency about AI usage in news production, stricter penalties for the dissemination of AI-generated misinformation, or potentially even licensing requirements for AI writing tools. News organizations might face legal challenges related to liability for publishing AI-generated content that proves to be false or defamatory. Finding the balance between freedom of speech and protecting the public from AI-generated misinformation will be a central challenge for lawmakers.

Conclusion:

The Sun-Times AI fabrication scandal serves as a stark warning about the potential dangers of unchecked AI technology in journalism. The ease with which AI can generate believable yet false information necessitates a thorough reassessment of journalistic practices, fact-checking methods, and legal frameworks. The future of truthful reporting hinges on adapting to this rapidly evolving technological landscape. We must develop robust mechanisms for detecting and mitigating the spread of AI-generated misinformation. Only through proactive measures can we ensure the integrity of news reporting and protect the public from the harmful effects of AI-related fabrications. Stay informed and continue to critically evaluate the news you consume, especially in light of the increasing sophistication of AI-generated content. Learn more about AI-generated content and its impact on journalism by following our future articles.

Featured Posts

-

Switzerland Rebukes China Over Taiwan Strait Military Exercises

May 22, 2025

Switzerland Rebukes China Over Taiwan Strait Military Exercises

May 22, 2025 -

Market Reaction Explaining Core Weave Inc Crwv S Thursday Stock Drop

May 22, 2025

Market Reaction Explaining Core Weave Inc Crwv S Thursday Stock Drop

May 22, 2025 -

Vybz Kartels Exclusive Interview Life In Prison Family And Future Plans

May 22, 2025

Vybz Kartels Exclusive Interview Life In Prison Family And Future Plans

May 22, 2025 -

Bir Sonraki Adim Juergen Klopp Un Gelecekteki Takimi

May 22, 2025

Bir Sonraki Adim Juergen Klopp Un Gelecekteki Takimi

May 22, 2025 -

L Essor Des Tours Nantaises Et L Activite Croissante Des Cordistes

May 22, 2025

L Essor Des Tours Nantaises Et L Activite Croissante Des Cordistes

May 22, 2025

Latest Posts

-

Phan Tich 7 Vi Tri Ket Noi Hap Dan Tp Hcm Long An

May 22, 2025

Phan Tich 7 Vi Tri Ket Noi Hap Dan Tp Hcm Long An

May 22, 2025 -

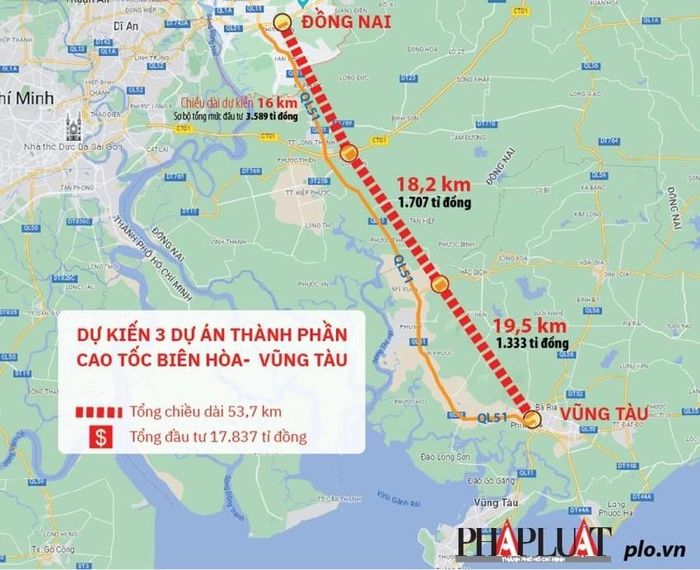

Cao Toc Bien Vung Tau Thong Xe Sap Hoan Thanh

May 22, 2025

Cao Toc Bien Vung Tau Thong Xe Sap Hoan Thanh

May 22, 2025 -

Dau Tu Ha Tang Giao Thong 7 Vi Tri Noi Tp Hcm Long An

May 22, 2025

Dau Tu Ha Tang Giao Thong 7 Vi Tri Noi Tp Hcm Long An

May 22, 2025 -

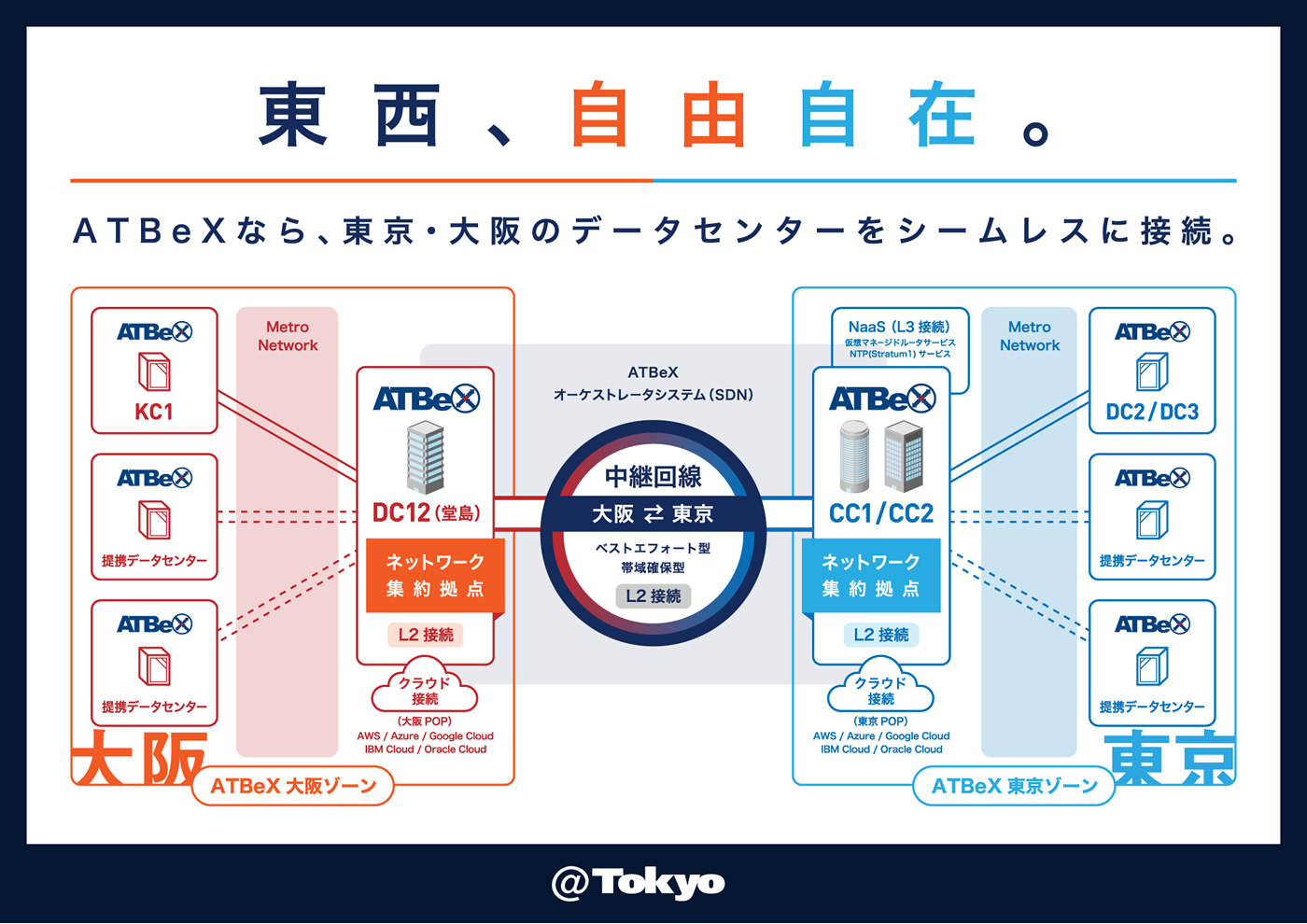

At Be X Ntt Multi Interconnect Ascii Jp

May 22, 2025

At Be X Ntt Multi Interconnect Ascii Jp

May 22, 2025 -

7 Tuyen Ket Noi Quan Trong Tp Hcm Long An Can Phat Trien

May 22, 2025

7 Tuyen Ket Noi Quan Trong Tp Hcm Long An Can Phat Trien

May 22, 2025