Responsible AI: Acknowledging The Limits Of Current AI Learning Capabilities

Table of Contents

The Data Dependency of AI: A Major Limitation

AI's power is inextricably linked to the data it's trained on. This data dependency, however, presents significant limitations. Poor or biased data leads to flawed and unreliable AI systems, highlighting the critical need for responsible data handling in AI development.

Bias in Training Data

Biased datasets are a major source of problems in AI. If the data used to train an AI model reflects existing societal biases, the AI will inevitably perpetuate and even amplify these biases.

- Examples of biased datasets: Datasets lacking representation from diverse racial, gender, and socioeconomic groups can lead to skewed outputs. For instance, a facial recognition system trained primarily on images of white faces may perform poorly on individuals with darker skin tones. Similarly, loan application algorithms trained on historical data reflecting discriminatory lending practices might unfairly deny loans to certain demographics.

- Consequences of biased AI: The consequences can be severe, leading to unfair loan applications, discriminatory hiring practices, and even biased outcomes in the criminal justice system. This underscores the urgency of addressing bias in AI development.

- Mitigating Bias: Addressing bias requires careful attention to data diversity and the implementation of bias mitigation techniques. This includes actively seeking diverse datasets, employing techniques like data augmentation to balance representation, and using algorithmic fairness constraints during model training.

Data Scarcity and Generalization Challenges

Another significant limitation stems from the scarcity of data in many domains. Training robust and reliable AI models requires vast amounts of data, a resource that's often unavailable, particularly in specialized areas.

- Examples of domains with limited data: Research on rare diseases or the development of AI systems for low-resource languages often face the challenge of limited data availability. This scarcity hinders the ability to train effective AI models.

- Addressing Data Scarcity: Techniques like data augmentation (creating synthetic data to supplement real data) and transfer learning (applying knowledge learned from one domain to another) can help address data scarcity. However, these methods are not always sufficient.

- Impact of Data Scarcity: Limited data leads to AI systems that struggle to generalize to new, unseen data, resulting in unreliable and inconsistent performance. This directly impacts the robustness and reliability of AI systems, raising significant concerns for responsible AI deployment.

The Lack of Explainability and Transparency in AI

Many sophisticated AI models, particularly deep neural networks, operate as "black boxes." Their internal workings are opaque, making it difficult to understand how they arrive at their decisions. This lack of transparency poses significant challenges for responsible AI development.

The "Black Box" Problem

The complexity of deep learning models makes it hard to interpret their internal decision-making processes. This opacity hinders our ability to understand why an AI system made a particular decision, making it challenging to identify and correct errors or biases.

- Examples of opaque AI models: Deep neural networks are notoriously difficult to interpret, making it hard to pinpoint the factors influencing their predictions.

- Challenges in Interpreting Model Outputs: The lack of transparency makes it difficult to debug AI systems and ensure their reliability and fairness.

The Need for Interpretable AI for Responsible Decision-Making

The use of opaque AI systems in high-stakes applications raises serious ethical concerns. Without understanding how an AI system arrives at its conclusions, it's impossible to ensure that its decisions are fair, accurate, and ethically sound.

- Examples of high-stakes applications: AI systems used in medical diagnosis, criminal justice, and autonomous driving require a high degree of transparency and explainability.

- Risks of relying on unexplainable AI: Blindly trusting the output of an opaque AI system in these contexts could have potentially devastating consequences.

- Explainable AI (XAI): The field of explainable AI is actively developing methods to make AI models more transparent and interpretable. However, these methods still have limitations and are not always applicable to all types of AI models.

The Current Limitations of AI in Handling Complex Reasoning and Common Sense

Current AI systems often struggle with tasks requiring nuanced reasoning, contextual understanding, and common sense – abilities that humans possess effortlessly. This limitation necessitates a cautious approach to deploying AI in complex scenarios.

The Challenge of Reasoning and Contextual Understanding

AI excels at pattern recognition and specific tasks but frequently falters when presented with situations requiring complex reasoning or contextual understanding.

- Examples of AI failures in common-sense reasoning: AI systems may struggle to understand sarcasm, metaphors, or nuanced language. They can also fail to incorporate common-sense knowledge into their decision-making.

- Challenges in handling ambiguous situations: AI systems often require clearly defined inputs and struggle with ambiguity or uncertainty, which are common features of real-world situations.

- Narrow vs. General AI: Current AI systems are largely "narrow" AI, excelling in specific, well-defined tasks. Developing "general" AI, with human-like reasoning and common sense, remains a significant long-term challenge.

The Importance of Human Oversight and Collaboration

Given the limitations of current AI, human oversight and collaboration are crucial. Human intervention can help mitigate the risks associated with AI and ensure responsible development and deployment.

- Examples of effective human-in-the-loop systems: Systems where human experts review and validate AI-generated recommendations before final decisions are made can significantly enhance safety and reliability.

- Importance of human verification and validation: Human oversight is crucial for identifying and correcting errors, biases, and unintended consequences in AI systems.

- Hybrid AI systems: Combining human expertise with AI capabilities creates hybrid systems that leverage the strengths of both, resulting in more robust and reliable outcomes.

Conclusion

This article has highlighted three key limitations of current AI learning capabilities: data dependency, lack of explainability, and challenges in complex reasoning. These limitations underscore the critical need for responsible AI development. By acknowledging these limitations and proactively addressing them through ethical data practices, the development of explainable AI, and the integration of human oversight, we can harness the transformative power of AI while mitigating its risks. By embracing the principles of responsible AI, we can contribute to a future where AI benefits all of humanity. Learn more about building responsible AI systems and contribute to shaping a future where AI is used ethically and safely.

Featured Posts

-

Apples Operating System Renaming What We Know

May 31, 2025

Apples Operating System Renaming What We Know

May 31, 2025 -

Streaming The Giro D Italia 2025 Free And Easy Methods

May 31, 2025

Streaming The Giro D Italia 2025 Free And Easy Methods

May 31, 2025 -

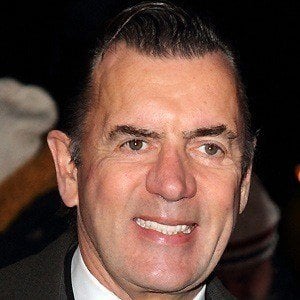

Duncan Bannatynes Support For Moroccan Childrens Charity

May 31, 2025

Duncan Bannatynes Support For Moroccan Childrens Charity

May 31, 2025 -

Emerging Covid 19 Variant Whos Analysis And Global Implications

May 31, 2025

Emerging Covid 19 Variant Whos Analysis And Global Implications

May 31, 2025 -

Understanding Dangerous Climate Whiplash And Its Impact On Urban Centers

May 31, 2025

Understanding Dangerous Climate Whiplash And Its Impact On Urban Centers

May 31, 2025