OpenAI And ChatGPT: An FTC Investigation

Table of Contents

Concerns Regarding Data Privacy and ChatGPT

ChatGPT's ability to generate human-quality text stems from its vast training data, raising significant data privacy concerns. The model ingests enormous quantities of text and code from the internet, including potentially sensitive personal information. This raises questions about whether OpenAI's data collection practices comply with existing laws like the Children's Online Privacy Protection Act (COPPA) and the General Data Protection Regulation (GDPR).

-

Data collection practices during training and usage: ChatGPT collects data both during its training phase and during user interactions. This data could include personally identifiable information (PII), inadvertently scraped from the internet during training or explicitly provided by users during conversations.

-

Data security measures employed by OpenAI: OpenAI claims to implement robust security measures to protect user data. However, the sheer volume of data and the complexity of AI systems make complete data security a significant challenge.

-

Transparency regarding data usage and user rights: The extent to which users understand how their data is used and what rights they have regarding their data remains unclear. Greater transparency is crucial to build trust and ensure compliance with data privacy regulations.

-

Potential risks of unauthorized data access or misuse: The potential for data breaches or misuse of collected data is a major concern. Malicious actors could potentially exploit vulnerabilities in OpenAI's systems to access and misuse sensitive information. Keywords: ChatGPT data privacy, OpenAI data security, COPPA, GDPR, AI data protection.

Algorithmic Bias and Fairness in ChatGPT

ChatGPT's responses are shaped by the data it was trained on, and this data may reflect existing societal biases. This can lead to the model generating outputs that are sexist, racist, or otherwise discriminatory. This algorithmic bias poses significant ethical concerns and raises questions about fairness and equity in AI systems.

-

Examples of identified biases in ChatGPT's outputs: Various studies have documented instances where ChatGPT exhibits biases in its responses, reflecting the biases present in its training data. These biases can manifest in different ways, such as stereotypical portrayals of certain groups or unfair treatment of specific topics.

-

OpenAI's efforts to mitigate bias and promote fairness: OpenAI has acknowledged the issue of bias and has undertaken efforts to mitigate it, including refining training data and developing techniques to detect and correct biased outputs. However, eliminating bias completely remains a significant challenge.

-

The challenge of detecting and correcting algorithmic bias: Identifying and correcting bias in large language models is complex and computationally expensive. The subtle and often implicit nature of bias makes it difficult to detect and address effectively.

-

The broader societal impact of biased AI systems: Biased AI systems can perpetuate and amplify existing societal inequalities, leading to discriminatory outcomes in various domains, including hiring, loan applications, and even criminal justice. Keywords: ChatGPT bias, AI fairness, algorithmic bias, AI ethics, discrimination, AI responsibility.

Misinformation and the Spread of False Information via ChatGPT

ChatGPT's ability to generate human-quality text can be misused to create and spread misinformation. The model can convincingly generate fake news articles, propaganda, or other forms of misleading content, posing a serious threat to public trust and information integrity.

-

Examples of ChatGPT generating false information: There have been documented instances where ChatGPT has generated factually incorrect information, sometimes with a high degree of confidence. This highlights the risk of the model being used to create and disseminate misinformation.

-

OpenAI's strategies for detecting and preventing the generation of misinformation: OpenAI is actively working on methods to detect and prevent the generation of false information, including fact-checking mechanisms and improved training data. However, these strategies are still under development and face significant challenges.

-

The limitations of current fact-checking methods for AI-generated content: Traditional fact-checking methods are often inadequate for dealing with the sophisticated and rapidly evolving nature of AI-generated misinformation. New techniques are needed to effectively combat this growing problem.

-

The broader implications for public trust and information integrity: The proliferation of AI-generated misinformation poses a significant threat to public trust and the integrity of information ecosystems. This necessitates the development of robust safeguards and ethical guidelines for the development and deployment of AI systems. Keywords: ChatGPT misinformation, AI disinformation, fake news, deepfakes, AI safety, responsible AI.

The FTC's Investigative Powers and Potential Outcomes

The FTC has broad authority to investigate unfair or deceptive business practices. The investigation into OpenAI and ChatGPT could lead to various outcomes, including substantial fines, mandated changes to OpenAI's data practices, and the establishment of new regulations governing the development and deployment of AI systems.

-

Specific FTC regulations potentially violated: The FTC could allege violations of various consumer protection laws and data privacy regulations, depending on the findings of their investigation.

-

Possible remedies the FTC could impose: Possible remedies include financial penalties, cease-and-desist orders, and requirements for increased transparency and data security measures.

-

The precedent this investigation could set for future AI regulation: The outcome of the FTC's investigation will have significant implications for the future regulation of AI. It could set a precedent for how other regulatory bodies approach the oversight of AI systems. Keywords: FTC enforcement, AI regulation, data privacy law, consumer protection, antitrust laws.

Conclusion: The Future of OpenAI and ChatGPT Under Scrutiny

The FTC's investigation into OpenAI and ChatGPT highlights the critical need for responsible AI development and ethical considerations. Concerns surrounding data privacy, algorithmic bias, and the spread of misinformation are not merely technical issues; they have significant societal implications. The investigation's outcome will shape the future of OpenAI, influence the development of future AI systems, and set a precedent for AI regulation worldwide. Stay informed about the OpenAI and ChatGPT FTC investigation and its ongoing developments. Engage in the crucial discussion surrounding AI regulation and responsible AI development. The future of AI depends on it. Keywords: OpenAI future, ChatGPT regulation, AI ethics, responsible AI development, AI accountability.

Featured Posts

-

Laura Kenny Announces Birth Of Baby Girl After Fertility Struggles

May 07, 2025

Laura Kenny Announces Birth Of Baby Girl After Fertility Struggles

May 07, 2025 -

E Bay And Section 230 Legal Implications Of Selling Banned Chemicals

May 07, 2025

E Bay And Section 230 Legal Implications Of Selling Banned Chemicals

May 07, 2025 -

Celebrity Special Winning Strategies On Who Wants To Be A Millionaire

May 07, 2025

Celebrity Special Winning Strategies On Who Wants To Be A Millionaire

May 07, 2025 -

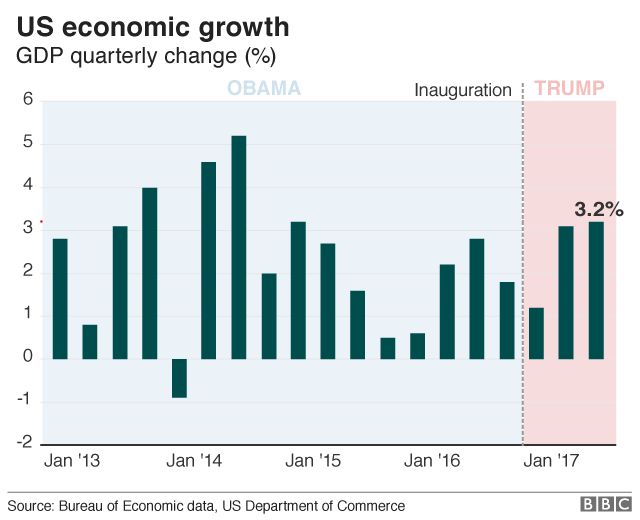

Trumps Impact Did His Presidency Revitalize The Us Film Industry

May 07, 2025

Trumps Impact Did His Presidency Revitalize The Us Film Industry

May 07, 2025 -

Paws And Love Tom Hollands Sweet Euphoria Set Visit To Zendaya

May 07, 2025

Paws And Love Tom Hollands Sweet Euphoria Set Visit To Zendaya

May 07, 2025

Latest Posts

-

The White Lotus Season 3 Identifying The Voice Actor For Kenny

May 07, 2025

The White Lotus Season 3 Identifying The Voice Actor For Kenny

May 07, 2025 -

The White Lotus Season 3 Unmasking The Voice Of Kenny

May 07, 2025

The White Lotus Season 3 Unmasking The Voice Of Kenny

May 07, 2025 -

White Lotus Latest Episode Features Surprise Oscar Winner

May 07, 2025

White Lotus Latest Episode Features Surprise Oscar Winner

May 07, 2025 -

Oscar Winner Makes White Lotus Appearance A Deeper Look

May 07, 2025

Oscar Winner Makes White Lotus Appearance A Deeper Look

May 07, 2025 -

Ke Huy Quans The White Lotus Cameo A Hidden Gem For Fans

May 07, 2025

Ke Huy Quans The White Lotus Cameo A Hidden Gem For Fans

May 07, 2025