Improving Apple's LLM Siri: Key Challenges And Solutions

Table of Contents

Data Scarcity and Bias in Siri's Training Data

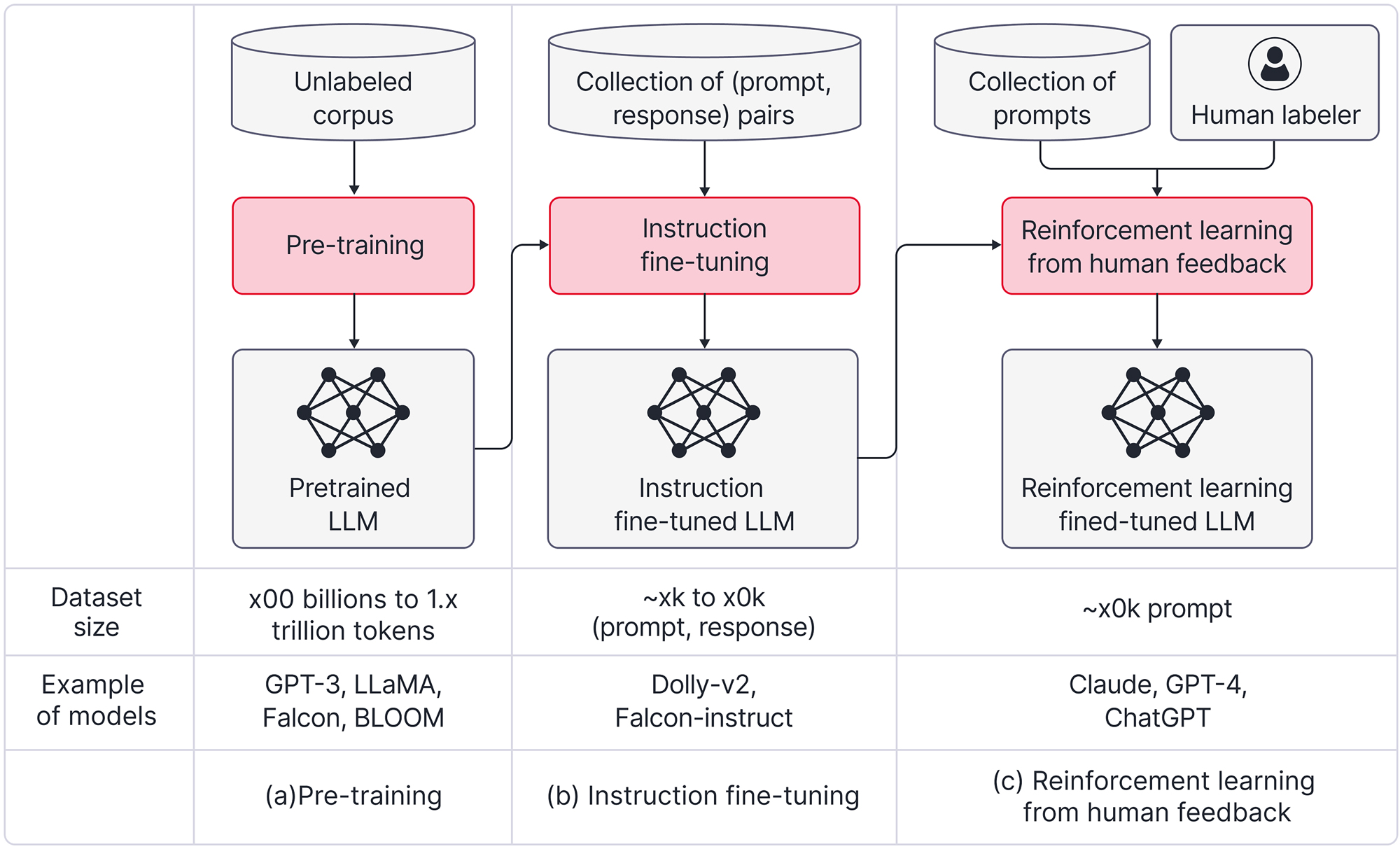

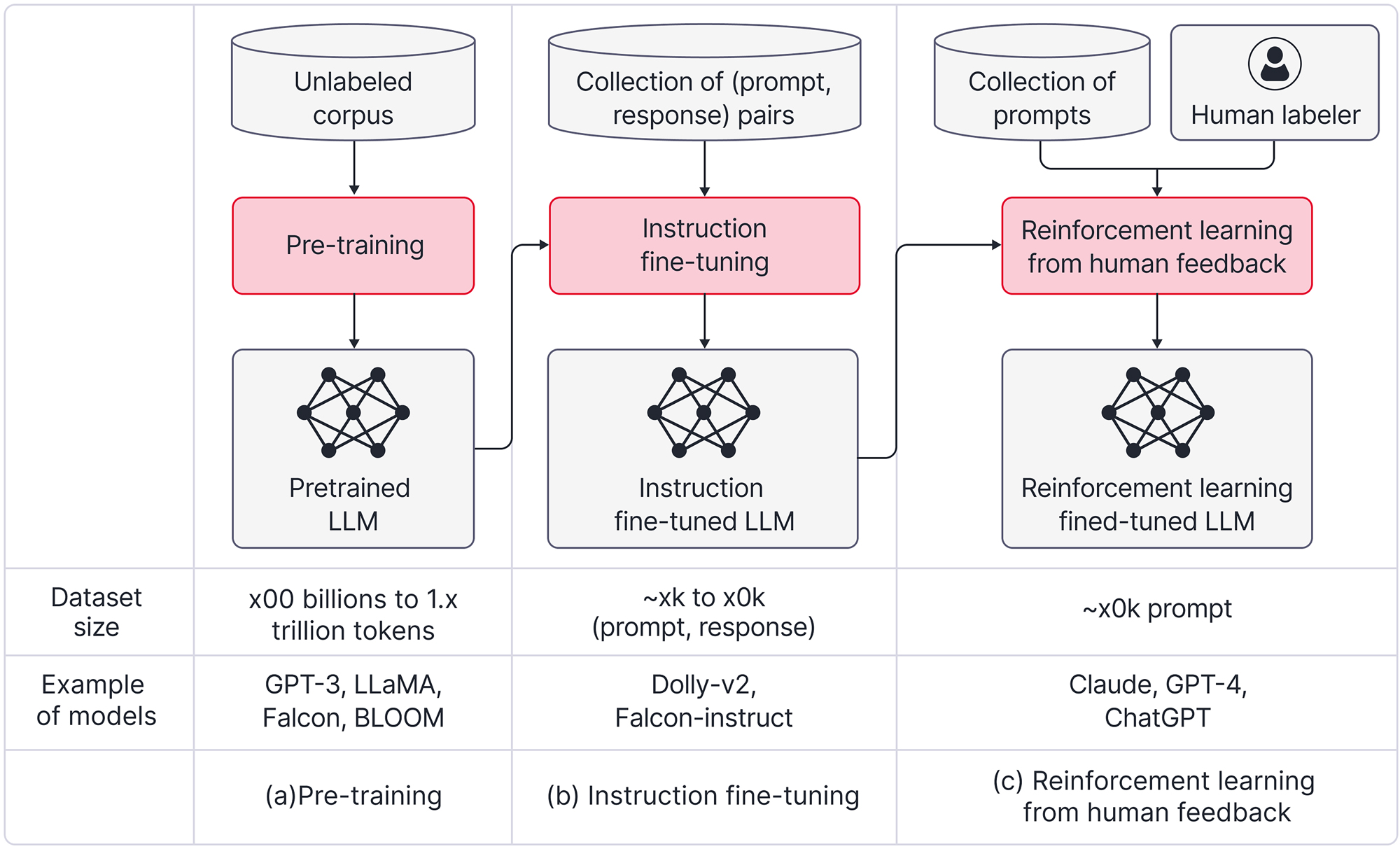

One major hurdle in improving Siri's performance with LLMs is the quality and quantity of its training data. The sheer volume of data needed to train effective LLMs is immense, and ensuring this data is diverse and unbiased is crucial for creating a fair and accurate virtual assistant.

The Need for High-Quality, Diverse Datasets

Siri's current training data likely suffers from limitations in size and representativeness. This can lead to significant biases in its responses.

- Gender Bias: Siri might respond differently to male versus female voices or exhibit gender stereotypes in its suggestions.

- Geographical Bias: Its understanding of local dialects and cultural nuances might be limited to specific regions.

- Socioeconomic Bias: Siri's responses might reflect biases based on socioeconomic backgrounds, leading to unequal or unfair interactions.

Biased data results in biased AI, which can perpetuate harmful stereotypes and inequalities. Fairness and inclusivity are paramount in developing responsible AI, necessitating more representative datasets that accurately reflect the diversity of the global population.

Strategies for Data Augmentation and Improvement

Addressing data scarcity and bias requires a multi-pronged approach:

- Synthetic Data Generation: Creating artificial data that complements real-world data can significantly increase training data volume while maintaining privacy.

- Data Cleaning Techniques: Implementing robust data cleaning processes can eliminate noisy, irrelevant, or biased data points from the training set.

- Crowdsourcing: Leveraging human annotators to improve data quality and collect diverse examples is a valuable approach, although it can be costly and time-consuming.

- Partnerships with Data Providers: Collaborating with reputable data providers can access large, high-quality datasets while ensuring ethical data sourcing.

- Privacy-Preserving Data Collection Methods: Employing techniques like federated learning can enable data collection without compromising user privacy.

Choosing the best data augmentation strategy involves careful consideration of cost, efficiency, and the potential for introducing new biases.

Computational Limitations and Efficiency of LLM Integration with Siri

Integrating powerful LLMs into Siri presents significant computational challenges. LLMs are computationally expensive, requiring substantial processing power and memory. This poses difficulties in ensuring real-time processing for a smooth user experience.

The Challenge of Real-time Processing and Low-Latency Responses

The sheer size of LLMs poses a significant hurdle for on-device processing, particularly on resource-constrained mobile devices. Achieving real-time responses with low latency is crucial for a positive user experience.

- Large Model Sizes: The large parameter counts of LLMs require significant computational resources, impacting response speed.

- On-Device Processing: Processing requests entirely on the device minimizes latency but demands efficient model compression techniques.

- Accuracy vs. Efficiency: Balancing model accuracy with computational efficiency is a critical trade-off.

Optimizing Siri's Architecture for LLM Integration

Optimizing Siri's architecture for efficient LLM integration is crucial:

- Modular Design: A modular architecture allows for selective loading and utilization of LLM components, improving efficiency.

- Hybrid Approaches: Combining rule-based systems with LLMs can handle simple requests efficiently while utilizing LLMs for more complex tasks.

- Efficient Data Flow: Optimizing data flow between different components minimizes processing overhead and latency.

These architectural improvements can lead to a more responsive and efficient Siri, even with complex LLM integration.

Addressing the Complexity of Natural Language Understanding and Generation

Siri faces significant challenges in understanding and responding to the nuances of human language. Ambiguity and context are crucial factors that heavily influence the accuracy and relevance of responses.

Handling Ambiguity and Context in User Requests

Interpreting ambiguous user requests requires advanced NLP techniques.

- Ambiguous Queries: Phrases with multiple meanings pose significant challenges in accurately determining user intent.

- Context Modeling: Maintaining context across multiple turns in a conversation is critical for coherent and relevant responses.

- Knowledge Representation: Integrating external knowledge bases and leveraging knowledge graphs can enhance Siri’s understanding of the world.

Advanced NLP techniques, such as contextual embeddings and memory mechanisms, are critical in improving Siri's ability to handle ambiguity and maintain context.

Generating Coherent and Engaging Responses

Generating natural-sounding and relevant responses is essential for a positive user experience.

- Fluency and Coherence: Siri's responses should flow naturally and maintain logical coherence.

- Engagement: Responses should be engaging and avoid repetitive or canned answers.

- Personalization: Adapting responses to individual user preferences and contexts improves user satisfaction.

Techniques like reinforcement learning can fine-tune LLMs to generate more natural, engaging, and personalized responses.

Conclusion

Improving Apple's LLM Siri presents significant challenges in data acquisition, computational efficiency, and natural language understanding. Addressing these challenges requires a multi-faceted approach including data augmentation strategies, architectural optimizations, and advanced NLP techniques. The future of Siri depends on continued research and development in these areas, leading to more powerful and engaging virtual assistant experiences. Improving Apple's LLM Siri requires a collaborative effort from researchers, engineers, and users alike. Let's continue the conversation about improving Apple's LLM Siri and explore innovative solutions to propel this technology forward.

Featured Posts

-

Philippines Steps Up Military Exercises With Us In Upcoming Balikatan Drills

May 20, 2025

Philippines Steps Up Military Exercises With Us In Upcoming Balikatan Drills

May 20, 2025 -

Dusan Tadic Fenerbahce Tarihine Gececek Bir Ilk

May 20, 2025

Dusan Tadic Fenerbahce Tarihine Gececek Bir Ilk

May 20, 2025 -

Abc News Show Future In Jeopardy After Layoffs

May 20, 2025

Abc News Show Future In Jeopardy After Layoffs

May 20, 2025 -

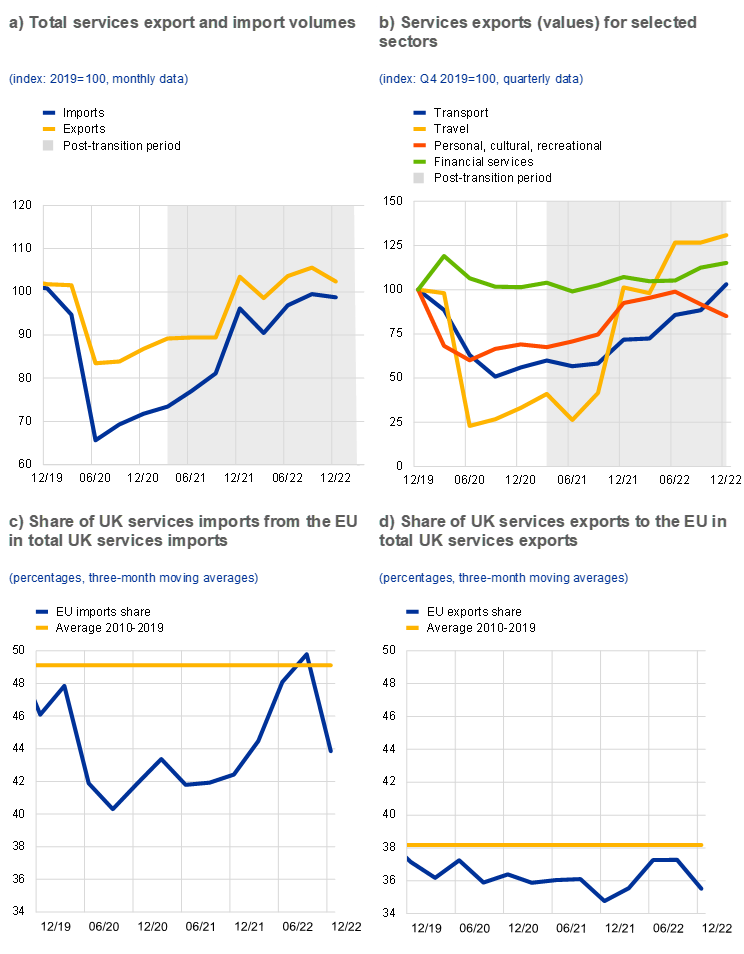

Brexits Toll Uk Luxury Exports Lag Behind In Eu Market

May 20, 2025

Brexits Toll Uk Luxury Exports Lag Behind In Eu Market

May 20, 2025 -

Presidentielle Cameroun 2032 La Strategie De Macron

May 20, 2025

Presidentielle Cameroun 2032 La Strategie De Macron

May 20, 2025

Latest Posts

-

Trumps Trade Policies And Gretzkys Allegiance A Look At The Stirred Debate

May 20, 2025

Trumps Trade Policies And Gretzkys Allegiance A Look At The Stirred Debate

May 20, 2025 -

Paulina Gretzkys Latest Look A Leopard Dress Inspired By The Sopranos

May 20, 2025

Paulina Gretzkys Latest Look A Leopard Dress Inspired By The Sopranos

May 20, 2025 -

Trumps Tariffs Gretzkys Loyalty A Canada Us Hockey Debate Ignited

May 20, 2025

Trumps Tariffs Gretzkys Loyalty A Canada Us Hockey Debate Ignited

May 20, 2025 -

Get To Know Paulina Gretzky Dustin Johnsons Wife Her Career And Kids

May 20, 2025

Get To Know Paulina Gretzky Dustin Johnsons Wife Her Career And Kids

May 20, 2025 -

Paulina Gretzkys Topless Selfie And Other Revealing Photos

May 20, 2025

Paulina Gretzkys Topless Selfie And Other Revealing Photos

May 20, 2025