If Algorithms Radicalize Mass Shooters: Are Tech Companies Liable?

Table of Contents

The Role of Algorithms in Online Radicalization

Algorithms, the complex sets of rules governing online platforms, are not inherently malicious, but their design can unintentionally contribute to the radicalization process. This occurs through several key mechanisms.

Echo Chambers and Filter Bubbles

Algorithms create personalized content feeds, often leading to "echo chambers" and "filter bubbles." These reinforce pre-existing beliefs, limiting exposure to diverse perspectives and isolating individuals within extremist viewpoints.

- Examples: Facebook's News Feed, YouTube's recommendation system, and Twitter's "For You" page all utilize algorithms that personalize content, potentially creating echo chambers.

- Impact: Personalized content feeds, while designed to enhance user experience, can inadvertently isolate users within extremist online communities, hindering their access to counter-narratives and critical thinking.

- Studies: Numerous studies have shown a correlation between exposure to algorithmically-curated extremist content and increased radicalization. These studies highlight the urgent need for algorithmic reform to mitigate this risk.

Algorithmic Amplification of Hate Speech

Algorithms prioritize engagement, often inadvertently amplifying hate speech and extremist content to maximize user interaction. This makes harmful material more visible and accessible, accelerating the radicalization process.

- Examples: Trending topics on social media platforms frequently showcase inflammatory content, often regardless of its factual accuracy or harmful nature.

- Challenges: Content moderation remains a significant challenge. While AI-powered detection systems are improving, they are far from perfect, struggling to identify nuanced forms of hate speech and extremist ideologies.

- Platform Failures: Numerous instances exist where platforms have failed to adequately address hate speech and extremist content, contributing to the spread of harmful ideologies and potentially facilitating radicalization.

The Spread of Conspiracy Theories and Misinformation

Algorithms significantly contribute to the rapid spread of conspiracy theories and misinformation, often linked to violence and extremism. These narratives can fuel hatred and distrust, potentially motivating individuals towards violent acts.

- Examples: Conspiracy theories about government overreach, societal manipulation, and the existence of hidden enemies have been linked to several mass shootings.

- Recommendation Systems: Recommendation systems, designed to suggest related content, often promote the spread of misinformation by connecting users to increasingly extreme viewpoints.

- Distinguishing Harmful Content: The inherent difficulty in distinguishing between legitimate debate and harmful misinformation adds another layer of complexity to the challenge of content moderation and algorithmic accountability.

Legal and Ethical Considerations of Tech Company Liability

Determining tech company liability in cases where algorithms contribute to mass shooter radicalization presents significant legal and ethical challenges.

Section 230 and its Implications

Section 230 of the Communications Decency Act protects online platforms from liability for user-generated content. However, its application in cases involving algorithmic amplification of extremist content is highly debated.

- Arguments for Revision: Critics argue that Section 230 shields tech companies from responsibility for the harmful consequences of their algorithms, while proponents emphasize its crucial role in protecting free speech.

- Legal Ramifications: Altering Section 230 could have profound implications for the online landscape, potentially impacting innovation and free speech while potentially increasing tech company accountability.

- Legal Precedents: Existing legal precedents related to online speech and incitement to violence offer limited guidance in addressing the unique challenges posed by algorithmically-driven radicalization.

Negligence and Foreseeability

The legal concept of negligence could be applied if it can be shown that tech companies failed to take reasonable steps to prevent the foreseeable harm caused by their algorithms.

- Foreseeability of Harm: A key question is whether tech companies should have foreseen the potential for their algorithms to contribute to radicalization and violence. This is a complex issue with no easy answers.

- Burden of Proof: Establishing negligence requires proving that a duty of care existed, that this duty was breached, and that this breach directly caused the harm. This burden of proof is significant.

- Legal Strategies: Potential legal strategies for holding tech companies accountable include class-action lawsuits, government regulations, and civil suits alleging negligence or intentional infliction of emotional distress.

Ethical Responsibilities of Tech Companies

Beyond legal liabilities, tech companies have a significant ethical responsibility to mitigate harm caused by their algorithms. This extends beyond simply complying with the law.

- Corporate Social Responsibility: Tech companies must embrace a proactive approach to corporate social responsibility, prioritizing the safety and well-being of their users above profit maximization.

- Transparency and Accountability: Increased transparency regarding algorithm design and content moderation practices is crucial to build trust and accountability.

- Solutions: Potential solutions include improved content moderation techniques, more ethical algorithm design that prioritizes safety and well-being, and independent audits of algorithmic systems.

Conclusion

The relationship between algorithms, online radicalization, and mass shootings is undeniably complex. While the legal landscape remains unclear, and Section 230 provides significant protection, the ethical responsibility of tech companies to prevent the misuse of their platforms is undeniable. The lack of clear legal precedents highlights the urgent need for ongoing discussion, debate, and potentially legislative action. We must work together to understand how "algorithms radicalize mass shooters" and demand greater responsibility from tech companies. Contact your representatives and advocate for policy changes to address this critical issue. The lives saved by preventative action are far too valuable to ignore.

Featured Posts

-

Reeves Economic Policies Echoes Of Scargills Militancy

May 31, 2025

Reeves Economic Policies Echoes Of Scargills Militancy

May 31, 2025 -

Former Nypd Commissioner Bernard Kerik Dead At 69 Remembering His Service

May 31, 2025

Former Nypd Commissioner Bernard Kerik Dead At 69 Remembering His Service

May 31, 2025 -

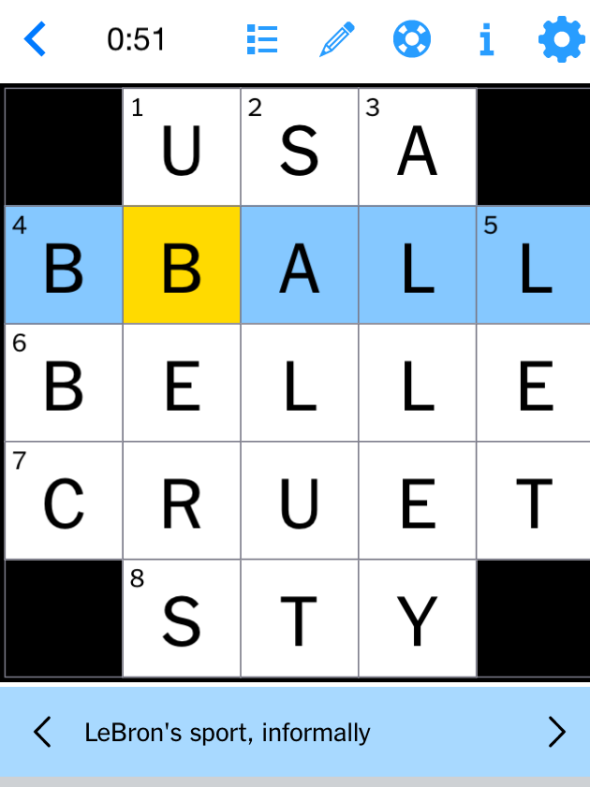

Solve The Nyt Mini Crossword Answers And Clues For March 24 2025

May 31, 2025

Solve The Nyt Mini Crossword Answers And Clues For March 24 2025

May 31, 2025 -

Understanding The Drug Addiction Crisis Among Houstons Rats

May 31, 2025

Understanding The Drug Addiction Crisis Among Houstons Rats

May 31, 2025 -

La Receta Aragonesa Mas Sencilla 3 Ingredientes Siglo Xix

May 31, 2025

La Receta Aragonesa Mas Sencilla 3 Ingredientes Siglo Xix

May 31, 2025