Controversy: Microsoft's Email System Blocks "Palestine" Keyword

Table of Contents

The Technical Aspects of Keyword Blocking

How Does Microsoft's System Identify and Block Keywords?

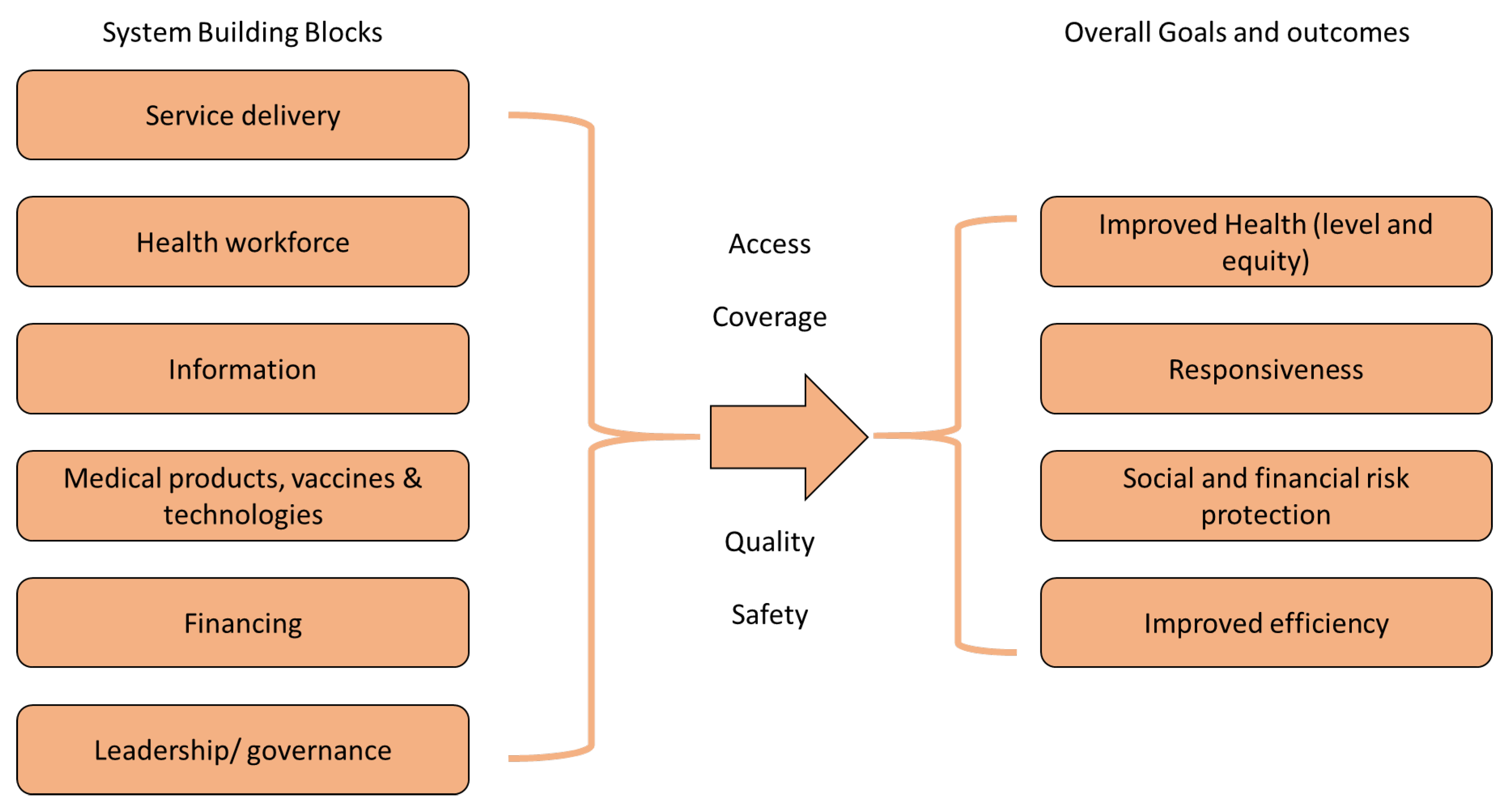

Microsoft's email system, like many others, utilizes sophisticated algorithms to filter spam and malicious content. These algorithms likely involve techniques such as keyword matching, Bayesian filtering, and machine learning models trained on vast datasets of spam and legitimate emails. The "Palestine" keyword, seemingly innocuous, might be flagged due to its association with certain types of content deemed undesirable by the system. This could be a result of the system's training data reflecting biased reporting or a misconfiguration in the filtering rules.

- False Positives: The system's reliance on keyword matching increases the likelihood of false positives, where legitimate emails containing the word "Palestine" are incorrectly blocked. This could significantly impact individuals and organizations legitimately discussing Palestinian issues, culture, or politics.

- Lack of Transparency: Microsoft's lack of transparency regarding the specific mechanisms behind its keyword filtering raises concerns. Without understanding how the system identifies and blocks keywords, it's impossible to assess its accuracy and fairness.

- Feature or Bug?: Whether this is a deliberate feature or an unintentional bug remains unclear. The absence of a clear explanation from Microsoft fuels speculation and adds to the controversy.

The Scope of the Block: Beyond "Palestine."

The concern extends beyond the single keyword "Palestine." It raises questions about whether other similar keywords or phrases related to geopolitical conflicts, particularly those surrounding the Israeli-Palestinian conflict, are also subject to the same automated censorship.

- Related Keywords: Terms like "Palestinian," "West Bank," "Gaza Strip," "occupation," and even seemingly neutral phrases related to the region might be caught in this broad filter. The potential for collateral damage is significant.

- Inconsistent Application: The inconsistent application of these filters could lead to disproportionate censorship, impacting certain groups or viewpoints more than others. This raises serious concerns about biased algorithmic design.

- Contextual Understanding: The current system clearly lacks the capacity for contextual understanding. A legitimate academic discussion on Palestinian history shouldn't be treated the same as a malicious spam email.

The Political and Ethical Implications

Freedom of Speech and Censorship Concerns

The blocking of the keyword "Palestine" represents a serious infringement on freedom of speech. It silences legitimate discussions about a politically sensitive region and undermines the ability of individuals and organizations to express their views openly.

- Impact on Individuals and Organizations: Journalists, academics, human rights organizations, and individuals involved in Palestinian advocacy are all affected by this censorship, hindering their ability to communicate effectively.

- International Human Rights Standards: This action directly contradicts internationally recognized human rights principles guaranteeing freedom of expression and access to information. The action potentially violates fundamental rights.

- Chilling Effect: The mere existence of such a filter could create a chilling effect, discouraging individuals from using the word "Palestine" in their emails, even in legitimate contexts.

Algorithmic Bias and the Role of Technology in Shaping Narratives

This incident underscores the dangers of algorithmic bias. The design and training data of automated systems can reflect and reinforce existing power structures and societal biases, unintentionally marginalizing certain perspectives.

- Reinforcing Existing Power Structures: Algorithmic bias can lead to the silencing of marginalized voices and the amplification of dominant narratives. In this case, the potential for biased algorithms to unfairly target discussions related to Palestine is evident.

- Algorithmic Accountability: The need for algorithmic accountability and transparency is paramount. Developers and companies deploying such systems must be held responsible for the potential consequences of their algorithms.

- Implicit Bias in Technology: This case serves as a stark reminder that implicit biases in society can manifest in technological systems, leading to discriminatory outcomes.

Microsoft's Response and Potential Solutions

Microsoft's Official Statements and Actions

At the time of writing, Microsoft has not yet issued a comprehensive public statement directly addressing the controversy. The lack of a clear, transparent response only exacerbates the concerns.

- Lack of Transparency: The silence surrounding this incident underscores the need for greater transparency from tech giants regarding their content moderation practices.

- Accountability: Microsoft needs to take responsibility for this issue and implement concrete solutions to prevent similar incidents from occurring in the future.

Recommendations for Improved Keyword Filtering

Several steps can be taken to prevent unintended censorship in email systems.

- Increased Transparency: Microsoft should publicly disclose its keyword filtering criteria, algorithms, and data sets to allow for independent scrutiny.

- Improved Algorithm Design: The algorithms should be designed to incorporate contextual understanding and avoid relying solely on simple keyword matching.

- User Feedback Mechanisms: A robust feedback mechanism should allow users to report false positives and provide input for improving the system's accuracy.

- Human Oversight: Human review should be integrated into the process, particularly for cases involving potentially sensitive keywords.

- Robust Testing and Quality Assurance: Thorough testing and quality assurance procedures are crucial to identifying and correcting biases before deployment.

Conclusion

The blocking of the keyword "Palestine" by Microsoft's email system is a concerning incident that highlights the potential for technological bias and unintended censorship. This situation underscores the importance of algorithmic accountability, transparency, and the need for careful consideration of ethical implications when designing and deploying automated content moderation systems. The lack of transparency surrounding this incident raises significant questions about the power of these systems and their potential for misuse. The suppression of the keyword "Palestine" is not just a technical issue; it's a matter of fundamental human rights.

Call to Action: Demand greater transparency and accountability from tech giants like Microsoft. Let your voice be heard and help us demand an end to the censorship of terms like "Palestine" in email and other digital communication platforms. Share this article and let's raise awareness of this important issue. #Palestine #Microsoft #EmailCensorship #TechEthics #AlgorithmicBias

Featured Posts

-

Escape To The Country Choosing The Right Rural Property

May 24, 2025

Escape To The Country Choosing The Right Rural Property

May 24, 2025 -

Porsche 911 80 Millio Forintba Keruelt Az Extrak

May 24, 2025

Porsche 911 80 Millio Forintba Keruelt Az Extrak

May 24, 2025 -

Glastonbury Festival 2025 Lineup Details After Leak Ticket Availability

May 24, 2025

Glastonbury Festival 2025 Lineup Details After Leak Ticket Availability

May 24, 2025 -

Live Pedestrian Accident On Princess Road Emergency Response Underway

May 24, 2025

Live Pedestrian Accident On Princess Road Emergency Response Underway

May 24, 2025 -

Italy Eases Citizenship Requirements Great Grandparent Descent

May 24, 2025

Italy Eases Citizenship Requirements Great Grandparent Descent

May 24, 2025

Latest Posts

-

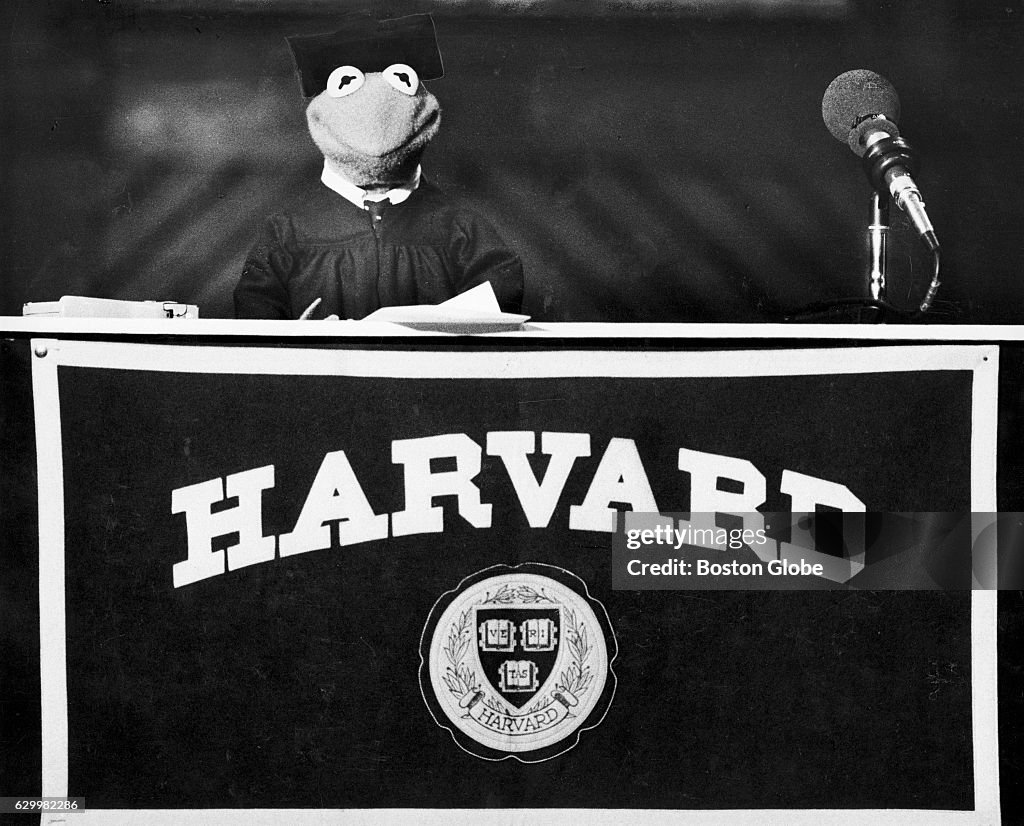

Celebrated Amphibian Speaker At University Of Maryland Commencement Ceremony

May 24, 2025

Celebrated Amphibian Speaker At University Of Maryland Commencement Ceremony

May 24, 2025 -

Kermits Words Of Wisdom University Of Maryland Commencement Speech Analysis

May 24, 2025

Kermits Words Of Wisdom University Of Maryland Commencement Speech Analysis

May 24, 2025 -

University Of Maryland Announces Kermit The Frog For 2025 Graduation

May 24, 2025

University Of Maryland Announces Kermit The Frog For 2025 Graduation

May 24, 2025 -

World Renowned Amphibian To Address University Of Maryland Graduates

May 24, 2025

World Renowned Amphibian To Address University Of Maryland Graduates

May 24, 2025 -

University Of Marylands Unexpected 2025 Commencement Speaker Kermit The Frog

May 24, 2025

University Of Marylands Unexpected 2025 Commencement Speaker Kermit The Frog

May 24, 2025