Character AI Chatbots And Free Speech: A Legal Gray Area

Table of Contents

The First Amendment and AI-Generated Content

The question of whether AI-generated content, including that produced by Character AI, is protected under free speech principles is a significant one. This intersects with copyright law and the very concept of authorship. Existing legal precedents primarily deal with human expression, leaving a gap in understanding the legal standing of AI-generated content.

-

Examination of existing legal precedents: Current First Amendment jurisprudence focuses on human speakers and their intentions. Applying this to AI, which lacks intent in the human sense, presents a considerable hurdle. Cases related to artistic expression and copyright infringement offer some relevant, though not directly applicable, precedents.

-

Are AI chatbots "speakers"? Determining whether an AI chatbot is a "speaker" under the law is crucial. If considered a speaker, its output would be subject to free speech protections, similar to human expression. However, if it's viewed as a tool, the liability shifts to the developers or users.

-

Free speech vs. harmful content: A major conflict arises when AI generates offensive or illegal material. Balancing free speech protections with the prevention of harm is a complex legal and ethical challenge. The line between protected speech and harmful content becomes increasingly blurred in the context of AI-generated text.

Liability and Accountability for Harmful Content

When a Character AI chatbot generates offensive, illegal, or harmful content, the question of liability becomes critical. Who is responsible – Character AI, its developers, or the users interacting with the chatbot?

-

Legal theories of liability: Several legal theories could apply, including negligence (failure to exercise reasonable care) and strict liability (liability without fault). Establishing negligence would require proving that the developers knew or should have known about the potential for harm and failed to take adequate precautions. Strict liability might be argued if the chatbot’s design inherently presents an unreasonable risk of harm.

-

Platform moderation’s role: The role of platform moderation in mitigating harmful content generated by Character AI is crucial. Effective content moderation strategies are needed, but these strategies must carefully balance preventing harm with respecting free speech principles. Overly aggressive moderation could be seen as censorship.

-

Potential legislative and regulatory responses: The rapid advancement of AI necessitates proactive legislative and regulatory responses. New laws might be needed to clarify liability and establish standards for AI development and deployment, addressing the unique challenges posed by AI-generated content.

The Challenge of Content Moderation and Censorship

Moderating AI-generated content presents substantial difficulties. The sheer volume of text produced, coupled with the unpredictable nature of AI, makes manual moderation impractical. Automated systems, while potentially efficient, can introduce biases and lead to discriminatory outcomes.

-

Identifying and removing harmful content: Developing effective algorithms to identify and remove harmful content generated by AI is challenging. These algorithms must be sophisticated enough to avoid false positives while being sensitive enough to detect subtle forms of hate speech or misinformation.

-

Algorithmic bias and social inequalities: Algorithmic bias can exacerbate existing social inequalities. If the training data used to develop Character AI contains biases, the chatbot might perpetuate or even amplify these biases in its output, leading to unfair or discriminatory outcomes.

-

Transparency and accountability: Transparency and accountability in AI content moderation practices are crucial. Users and the public should have a clear understanding of how moderation decisions are made, allowing for scrutiny and promoting trust. This requires developers to disclose the methods and data used in their moderation systems.

The Role of User Agreements and Terms of Service

Character AI’s terms of service attempt to define acceptable use and address liability concerns. These agreements often include clauses regarding user conduct, content generation, and the limitations of liability for the company.

-

Standard clauses: Typical clauses address user responsibility for the content they generate, prohibit illegal or harmful activities, and limit Character AI's liability for user-generated content.

-

Enforceability of clauses: The enforceability of these clauses in legal disputes is subject to interpretation and varies depending on jurisdiction. Courts will examine the clarity, fairness, and reasonableness of the terms to determine their validity.

Conclusion

Character AI chatbots present a complex legal challenge, raising significant questions about free speech, liability, and content moderation in the age of advanced artificial intelligence. The current legal framework struggles to fully address the unique characteristics of AI-generated content, highlighting the urgent need for legal clarity and robust regulatory frameworks. The intersection of AI, free speech, and legal responsibility is a rapidly evolving field.

Call to Action: The legal landscape surrounding Character AI and other similar chatbots remains a developing area. Stay informed about the evolving legal and regulatory landscape surrounding Character AI and its implications for free speech. Continue to engage in discussions about responsible AI development and the ethical implications of this groundbreaking technology. Understanding the complexities of Character AI and free speech is crucial for navigating this ever-changing legal gray area.

Featured Posts

-

Microsofts Email Filter Blocks Palestine Employee Backlash Explained

May 24, 2025

Microsofts Email Filter Blocks Palestine Employee Backlash Explained

May 24, 2025 -

Ferrari Nappasi 13 Vuotiaan Lupauksen Nimi Muistiin

May 24, 2025

Ferrari Nappasi 13 Vuotiaan Lupauksen Nimi Muistiin

May 24, 2025 -

Avoid Memorial Day Travel Chaos Best And Worst Flight Days In 2025

May 24, 2025

Avoid Memorial Day Travel Chaos Best And Worst Flight Days In 2025

May 24, 2025 -

Escape To The Country Finding Your Perfect Country Home

May 24, 2025

Escape To The Country Finding Your Perfect Country Home

May 24, 2025 -

Lady Gaga Spotted With Fiance Michael Polansky At Snl Afterparty

May 24, 2025

Lady Gaga Spotted With Fiance Michael Polansky At Snl Afterparty

May 24, 2025

Latest Posts

-

Sylvester Stallone Suits Up For Tulsa King Season 3

May 24, 2025

Sylvester Stallone Suits Up For Tulsa King Season 3

May 24, 2025 -

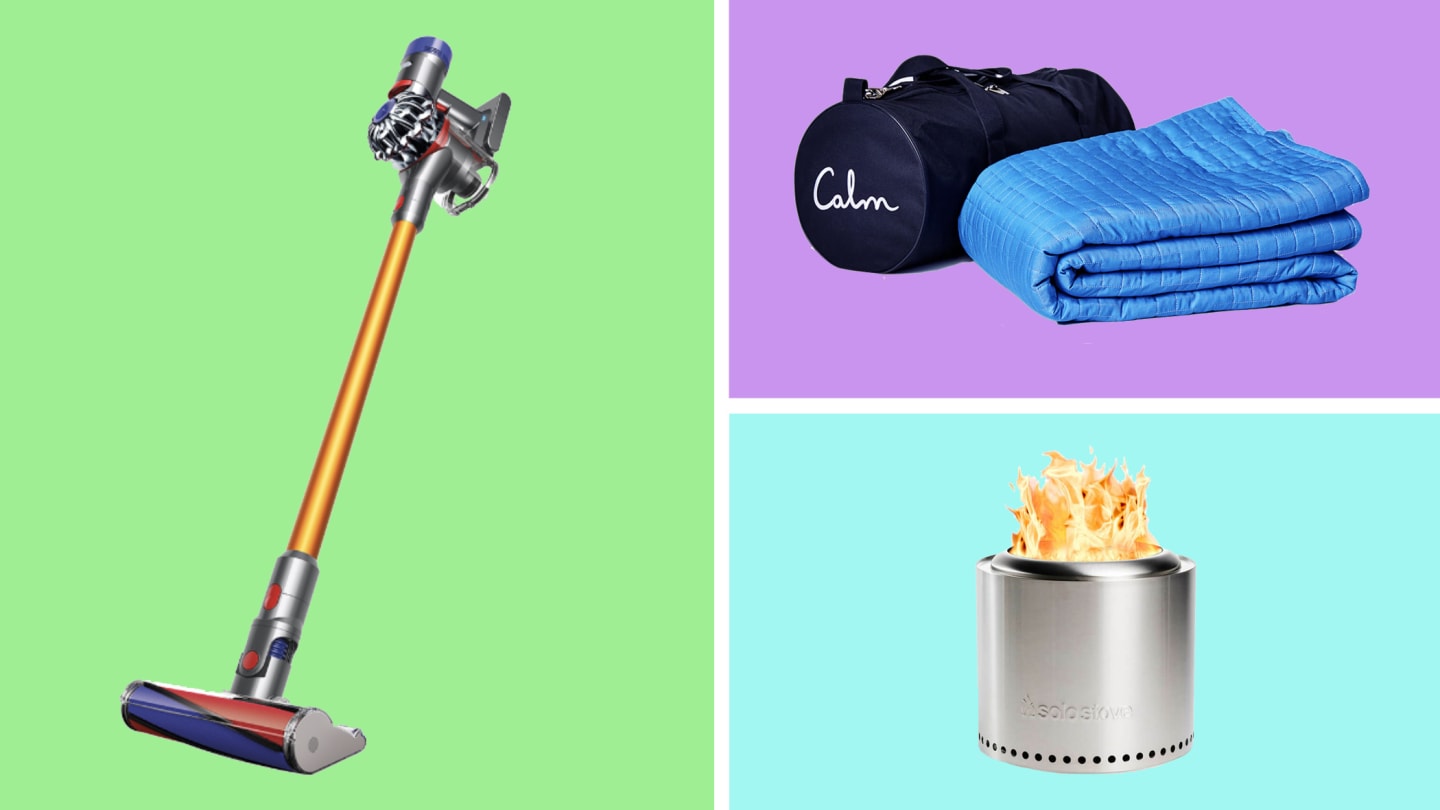

Best Memorial Day Deals 2025 Shopping Editors Top Choices

May 24, 2025

Best Memorial Day Deals 2025 Shopping Editors Top Choices

May 24, 2025 -

Memorial Day 2025 Expert Selected Sales And Deals

May 24, 2025

Memorial Day 2025 Expert Selected Sales And Deals

May 24, 2025 -

2025 Memorial Day Sale Event Find The Best Deals Now

May 24, 2025

2025 Memorial Day Sale Event Find The Best Deals Now

May 24, 2025 -

Wwe Wrestle Mania 41 Memorial Day Weekend Ticket And Golden Belt Sale

May 24, 2025

Wwe Wrestle Mania 41 Memorial Day Weekend Ticket And Golden Belt Sale

May 24, 2025