Building Voice Assistants: OpenAI's 2024 Breakthrough

Table of Contents

Enhanced Natural Language Understanding (NLU) with OpenAI's Models

OpenAI's advancements in Natural Language Understanding (NLU) are revolutionizing the accuracy and capabilities of voice assistants. Their improved models significantly impact how these assistants interpret and respond to user requests. This enhanced understanding stems from several key improvements:

-

Improved Contextual Awareness in Conversations: OpenAI's models now exhibit a much stronger grasp of context within a conversation. This means the voice assistant can remember previous interactions and tailor its responses accordingly, leading to more natural and fluid dialogue. For example, if a user asks about the weather and then follows up with a question about travel plans, the assistant can intelligently connect these topics.

-

Reduced Ambiguity in Interpreting User Requests: Ambiguity is a common hurdle in NLU. OpenAI's models have made significant strides in reducing this, better understanding the nuances of human language, even with colloquialisms and incomplete sentences. This leads to fewer misinterpretations and more accurate responses.

-

Enhanced Ability to Handle Complex and Nuanced Language: Modern voice assistants can now handle more sophisticated requests, understanding complex sentences with multiple clauses and implicit meanings. This improved comprehension is crucial for tasks requiring detailed instructions or intricate queries.

-

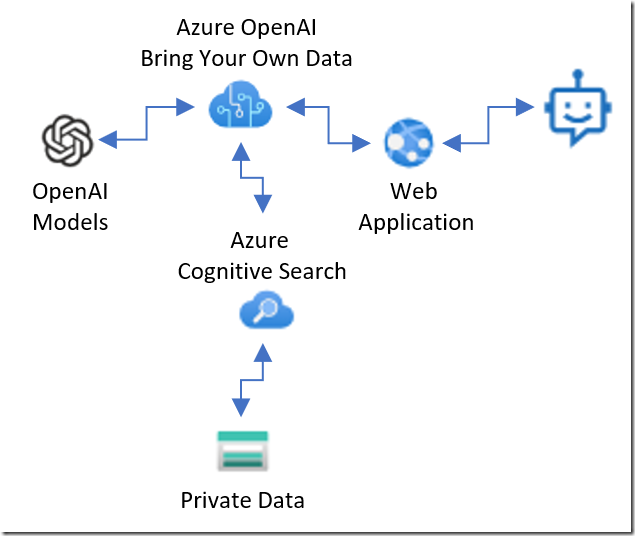

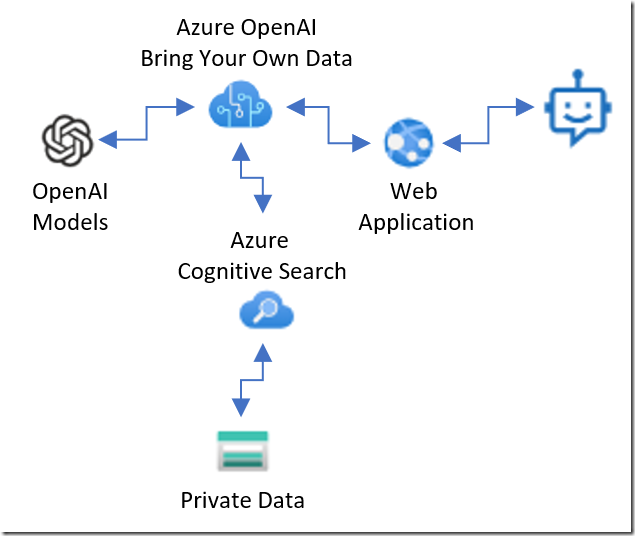

Integration with Other OpenAI Tools for Enhanced Functionality: OpenAI's NLU models seamlessly integrate with other tools in their ecosystem, like GPT models. This allows for more natural and contextually relevant responses. GPT can generate more human-like replies, enhancing the overall conversational experience. Keywords: Natural Language Understanding, NLU, Contextual Awareness, OpenAI GPT models, Conversational AI accuracy.

Advanced Speech-to-Text and Text-to-Speech Capabilities

OpenAI's contributions extend beyond NLU to encompass significant improvements in speech processing. Their advancements in both speech-to-text and text-to-speech technologies are crucial for creating truly effective voice assistants.

-

Reduction in Errors in Noisy Environments: OpenAI's improved speech recognition algorithms exhibit enhanced robustness in noisy environments. This means the voice assistant can accurately transcribe speech even with background noise, making it more usable in real-world scenarios.

-

Improved Accuracy with Different Accents and Dialects: OpenAI's models are trained on diverse datasets, leading to significantly improved accuracy across various accents and dialects. This inclusivity makes voice assistants more accessible to a wider global audience.

-

More Natural and Expressive Synthetic Voices: Text-to-speech synthesis has also seen significant advancements. OpenAI's models generate more natural-sounding voices with better intonation, inflection, and emotional expression, enhancing the user experience.

-

Real-time Transcription Capabilities with Minimal Latency: The speed and accuracy of real-time transcription are crucial for seamless interaction. OpenAI's models provide real-time transcription with minimal latency, ensuring a fluid and responsive conversational experience. Keywords: Speech-to-Text, Text-to-Speech, Speech Recognition, Voice Synthesis, Real-time Transcription.

Personalized Voice Assistant Experiences

OpenAI's technology enables the creation of truly personalized voice assistant experiences, moving beyond generic responses to tailored interactions. This personalization is achieved through:

-

Adaptive Learning Based on User Interaction: The voice assistant learns from each interaction, adapting its responses and behavior to the user's preferences and communication style. This ongoing learning leads to a more personalized and intuitive experience over time.

-

Customization of Voice and Personality: Users might be able to customize the voice and even the personality of their voice assistant, choosing from a range of options or even training the assistant to adopt a specific communication style.

-

Integration with User Data for Tailored Responses: By integrating with user data (with appropriate privacy measures), the voice assistant can provide more relevant and personalized information and assistance. This might involve tailoring recommendations, reminders, or responses based on the user's calendar, contacts, or other relevant data.

-

Proactive Assistance Based on Learned User Preferences: Instead of simply reacting to requests, a personalized voice assistant can proactively offer assistance based on learned user preferences and patterns. This could involve suggesting tasks, reminders, or information relevant to the user's schedule and habits. Keywords: Personalized Voice Assistants, Adaptive Learning, User Preferences, AI Personalization, User Data.

Ethical Considerations and Bias Mitigation in OpenAI's Voice Assistant Development

The development of advanced voice assistants raises significant ethical considerations. OpenAI is actively working to mitigate potential biases and risks:

-

Addressing Potential Biases in Training Data: Bias in training data can lead to unfair or discriminatory outcomes. OpenAI is committed to addressing this by carefully curating datasets and employing techniques to detect and mitigate biases.

-

Ensuring Fairness and Equity in Voice Assistant Responses: OpenAI is striving to ensure fairness and equity in the responses generated by its voice assistants, avoiding perpetuating stereotypes or discriminatory outcomes.

-

Promoting Transparency and User Control Over Data: Transparency about data usage and providing users with control over their data are critical for building trust. OpenAI is focused on developing responsible data handling practices.

-

Mitigating the Risk of Misuse and Harmful Applications: OpenAI is actively working to mitigate the risk of its technology being misused for harmful purposes, such as spreading misinformation or creating deepfakes. Keywords: Ethical AI, Bias Mitigation, AI Ethics, Responsible AI, Fairness in AI.

Conclusion

OpenAI's breakthroughs in 2024 have significantly advanced the capabilities of voice assistants, paving the way for more natural, intuitive, and personalized interactions. By enhancing natural language understanding, speech processing, and personalization, OpenAI is driving the evolution of conversational AI. The ethical considerations highlighted underscore the importance of responsible development in this rapidly growing field. To stay ahead in the world of innovative voice technology, keep exploring the latest advancements in building voice assistants and the exciting possibilities offered by OpenAI's contributions. Further research into these areas will be crucial in unlocking the full potential of voice assistant technology.

Featured Posts

-

Blake Lively Vs Anna Kendrick Tracing The Rumors Of A Hollywood Rift

May 04, 2025

Blake Lively Vs Anna Kendrick Tracing The Rumors Of A Hollywood Rift

May 04, 2025 -

Singapore Election 2024 Assessing The Ruling Partys Strength

May 04, 2025

Singapore Election 2024 Assessing The Ruling Partys Strength

May 04, 2025 -

Nigel Farage Prefers Snp Win Reform Partys Shocking Stance

May 04, 2025

Nigel Farage Prefers Snp Win Reform Partys Shocking Stance

May 04, 2025 -

Another Simple Favor Blake Lively And Anna Kendricks Red Carpet Duo

May 04, 2025

Another Simple Favor Blake Lively And Anna Kendricks Red Carpet Duo

May 04, 2025 -

Marvels Thunderbolts A Critical Analysis Of The New Team

May 04, 2025

Marvels Thunderbolts A Critical Analysis Of The New Team

May 04, 2025

Latest Posts

-

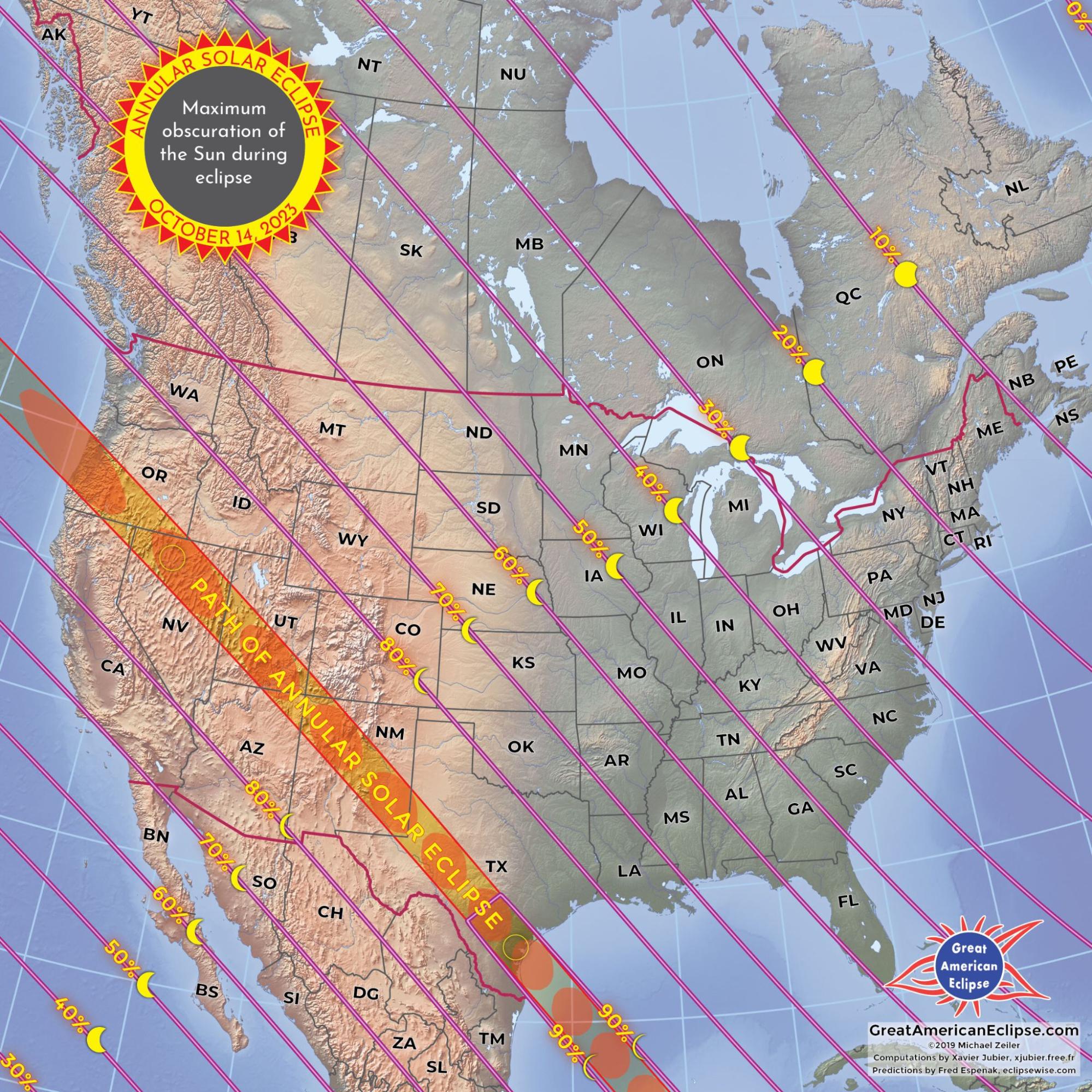

Saturdays Partial Solar Eclipse In Nyc Time Viewing Tips And Safety

May 04, 2025

Saturdays Partial Solar Eclipse In Nyc Time Viewing Tips And Safety

May 04, 2025 -

West Bengal Weather Four Districts Face Extreme Heatwave Conditions

May 04, 2025

West Bengal Weather Four Districts Face Extreme Heatwave Conditions

May 04, 2025 -

Temperature Drop In West Bengal Weather Forecast And Advisory

May 04, 2025

Temperature Drop In West Bengal Weather Forecast And Advisory

May 04, 2025 -

Heatwave Emergency Weather Update For Five South Bengal Districts

May 04, 2025

Heatwave Emergency Weather Update For Five South Bengal Districts

May 04, 2025 -

Heatwave Alert In West Bengal Four Districts On High Alert

May 04, 2025

Heatwave Alert In West Bengal Four Districts On High Alert

May 04, 2025