Building Voice Assistants Made Easy: OpenAI's 2024 Developer Showcase

Table of Contents

Streamlined Development with OpenAI's APIs

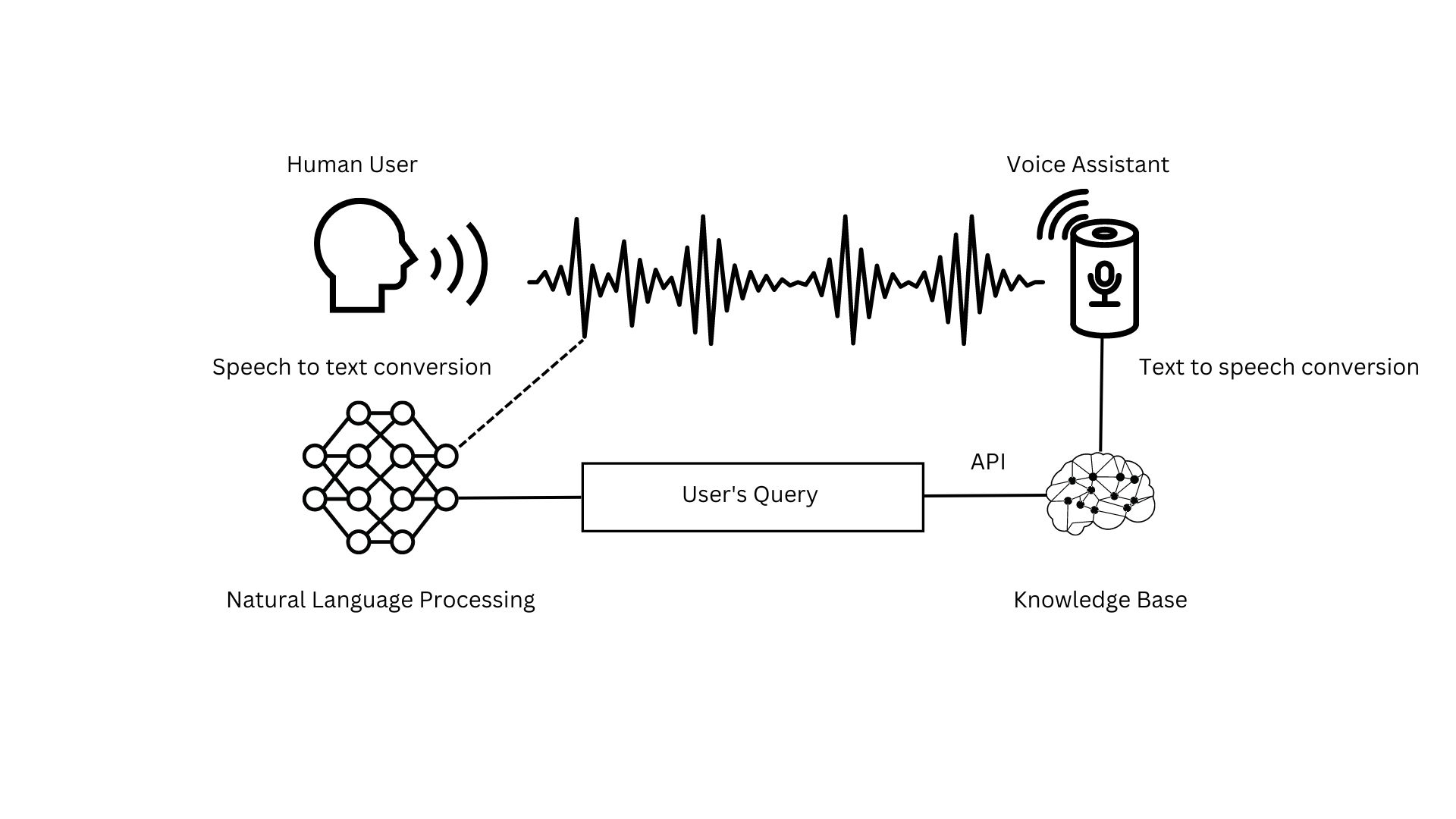

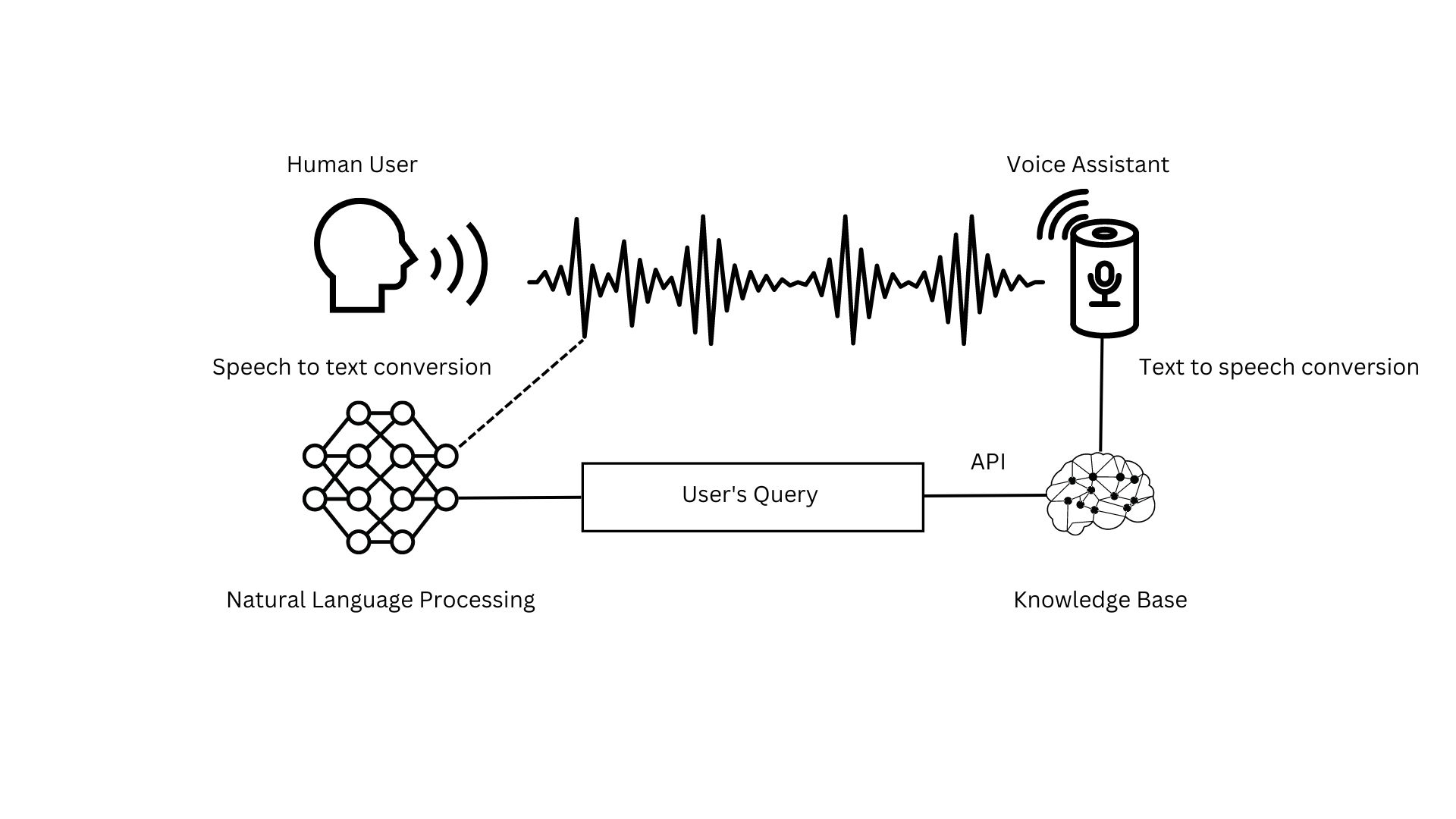

OpenAI's APIs significantly reduce the development time and complexity associated with building voice assistants. They offer a comprehensive suite of tools that handle many of the traditionally challenging aspects of voice technology, allowing developers to focus on the unique features of their applications.

-

Pre-trained models for speech-to-text and text-to-speech: OpenAI's Whisper model, for example, provides state-of-the-art speech-to-text capabilities, dramatically cutting down the time and effort needed to build this core functionality. Similarly, their text-to-speech models offer high-quality, natural-sounding voices, enhancing the user experience. This significantly reduces the need for extensive training data and complex model development.

-

Easy integration with existing platforms and frameworks: OpenAI's APIs are designed for seamless integration with popular programming languages and development frameworks, making it straightforward to incorporate voice assistant functionality into existing projects. This interoperability simplifies the development workflow and reduces the learning curve.

-

Natural Language Understanding (NLU) capabilities for improved voice assistant comprehension: OpenAI's advanced NLU capabilities, powered by models like GPT, enable voice assistants to understand the nuances of human language, including context, intent, and entities. This allows for more natural and intuitive interactions, moving beyond simple keyword matching.

-

Specific APIs and their applications: The Whisper API excels at converting speech to text accurately, even in noisy environments. GPT models, on the other hand, are crucial for understanding user intent and generating appropriate responses. These APIs work in tandem, creating a robust and responsive voice assistant. For example, a simple code snippet using the Python library might look like this (simplified for illustration):

import openai

# ... (API key setup) ...

response = openai.Audio.transcribe("audio.mp3", model="whisper-1")

text = response["text"]

# Process text using GPT for intent recognition and response generation...

This simple example showcases the ease of integrating OpenAI's APIs into a voice assistant project.

Enhanced Natural Language Processing (NLP) for Smarter Assistants

OpenAI's advancements in NLP are at the heart of creating smarter, more human-like voice assistants. These improvements translate to significantly enhanced user experiences.

-

Improved context understanding and handling of complex queries: OpenAI's models can maintain context across multiple turns in a conversation, leading to more natural and fluid interactions. This allows users to ask complex, multi-part questions without the assistant losing track of the conversation's thread.

-

More accurate intent recognition and entity extraction: The models are better at identifying the user's intent behind their requests and extracting relevant information (entities) from their speech. This results in more accurate and relevant responses.

-

Reduced reliance on keyword-based triggers: Modern voice assistants rely less on pre-programmed keywords and more on understanding the semantic meaning of user input. This makes the assistants more flexible and adaptable to different phrasing and conversational styles.

-

Examples of OpenAI's NLP models used for improved dialogue management: GPT models are at the forefront of this improvement. Their ability to generate contextually relevant and coherent responses is key to creating engaging and helpful voice assistants.

Cost-Effective Solutions for Voice Assistant Development

Building voice assistants can be expensive, but OpenAI's tools and services offer accessible pricing models, making them a cost-effective solution for developers.

-

Pay-as-you-go pricing for API usage: Developers only pay for the API calls they make, eliminating the need for large upfront investments.

-

Free tier options for experimentation and prototyping: OpenAI provides free tiers for its APIs, allowing developers to experiment and prototype their voice assistant ideas without incurring any costs initially.

-

Reduced infrastructure costs compared to building from scratch: Building a voice assistant from scratch requires significant infrastructure investment, including servers, databases, and specialized hardware. OpenAI's cloud-based services eliminate this need, significantly reducing overall costs.

-

Cost-effectiveness compared to traditional methods: A detailed cost comparison between using OpenAI's services and building a voice assistant using traditional methods reveals significant savings in development time, infrastructure, and ongoing maintenance.

OpenAI's Community and Support for Developers

OpenAI fosters a vibrant community that supports developers throughout the voice assistant development lifecycle.

-

Access to forums, documentation, and tutorials: OpenAI provides comprehensive documentation, tutorials, and active online forums where developers can ask questions, share their experiences, and find solutions to common problems.

-

Opportunities to network with other developers: The community provides opportunities to connect with other developers working on similar projects, fostering collaboration and knowledge sharing.

-

OpenAI's support channels for assistance with technical issues: OpenAI offers various support channels to help developers resolve any technical issues they encounter during the development process.

Conclusion

OpenAI's 2024 Developer Showcase has undeniably lowered the barrier to entry for building voice assistants. By providing powerful, easy-to-use APIs, advanced NLP capabilities, and a supportive community, OpenAI empowers developers of all skill levels to create innovative and engaging voice experiences. Ready to start building your own intelligent voice assistant? Explore OpenAI's resources today and unlock the potential of voice technology. Begin your journey in building voice assistants now!

Featured Posts

-

Ali Larter And Angela Norris Respond To Landman Criticism Billy Bob Thorntons Support

May 13, 2025

Ali Larter And Angela Norris Respond To Landman Criticism Billy Bob Thorntons Support

May 13, 2025 -

Top Efl Highlights Must Watch Goals And Matches

May 13, 2025

Top Efl Highlights Must Watch Goals And Matches

May 13, 2025 -

Atalanta Vs Bologna En Vivo Serie A Fecha 32 Minuto A Minuto

May 13, 2025

Atalanta Vs Bologna En Vivo Serie A Fecha 32 Minuto A Minuto

May 13, 2025 -

A Critical Look At Elsbeth Season 2 Episode 15 Psychic Murderess Arc Falls Short

May 13, 2025

A Critical Look At Elsbeth Season 2 Episode 15 Psychic Murderess Arc Falls Short

May 13, 2025 -

Gerard Butlers Return In Den Of Thieves 2 A 523 Million Franchise Gets Even Bigger

May 13, 2025

Gerard Butlers Return In Den Of Thieves 2 A 523 Million Franchise Gets Even Bigger

May 13, 2025