Building Voice Assistants Made Easy: Key Announcements From OpenAI's 2024 Event

Table of Contents

Simplified Natural Language Processing (NLP) APIs

OpenAI's 2024 announcements significantly enhance the core capabilities of building voice assistants through improved NLP APIs. These improvements directly translate to faster development cycles and more robust, user-friendly voice interfaces.

Enhanced Speech-to-Text and Text-to-Speech Capabilities

OpenAI has dramatically improved its speech recognition and text-to-speech capabilities. These advancements are crucial for building natural and intuitive voice assistants.

- Improved Accuracy: The new APIs boast significantly higher accuracy rates, minimizing errors and improving the overall user experience. This means less time spent on error correction and more time on core functionality.

- Multilingual Support: OpenAI's expanded multilingual support enables developers to build voice assistants for a global audience, breaking down language barriers and expanding market reach. Support for a wider range of dialects and accents has also been improved.

- Reduced Latency: Lower latency ensures a more responsive and natural conversation flow, enhancing the user experience and making the interaction feel more seamless.

- Easier Integration: The APIs are designed for easy integration with popular development platforms and frameworks, streamlining the development process and reducing integration complexities. Specific improvements include simplified SDKs and improved documentation for common platforms like iOS and Android.

These enhancements directly simplify the development process. For example, the improved accuracy reduces the need for extensive error handling, saving developers significant time and resources. The streamlined integration with existing platforms minimizes the need for custom code, lowering the barrier to entry for developers of all skill levels.

Streamlined Intent Recognition and Dialogue Management

Building engaging conversations requires sophisticated intent recognition and dialogue management. OpenAI has addressed this challenge by introducing several key improvements:

- New Tools for Easier Intent Classification: The new APIs include powerful tools for more accurately classifying user intents, allowing voice assistants to understand complex requests and respond appropriately. This includes advanced machine learning models tailored for intent recognition.

- Improved Context Handling: The updated APIs offer superior context handling, enabling voice assistants to maintain context over longer conversations, leading to more natural and engaging interactions. This significantly reduces the need for repeated clarifications.

- Simplified Dialogue Flow Design: OpenAI has introduced new frameworks and libraries that simplify the design of complex dialogue flows, making it easier to create sophisticated conversational experiences with less coding.

- Pre-built Models for Common Tasks: Developers can leverage pre-built models for common voice assistant tasks, such as scheduling appointments, setting reminders, or playing music. This accelerates the development process and allows developers to focus on unique aspects of their voice assistant.

These advancements allow developers to build more sophisticated conversational interfaces with less effort. For instance, the simplified dialogue flow design lets developers focus on the conversational logic instead of wrestling with complex code. The pre-built models significantly reduce development time, allowing for quicker deployment and iteration.

Pre-trained Models and Customizable Templates

OpenAI's 2024 event highlighted a significant shift towards more accessible voice assistant development through pre-trained models and intuitive templates.

Ready-to-Use Voice Assistant Models

OpenAI now offers a range of pre-trained models for various use cases, drastically reducing development time and complexity.

- Variety of Pre-trained Models: Models are available for various applications, including smart home control, customer service bots, and information retrieval. This means developers can quickly adapt these models for their specific needs.

- Customizable Parameters: These models offer customizable parameters, enabling developers to fine-tune them to match the specifics of their application, ensuring optimal performance.

- Ease of Deployment: The models are designed for easy deployment, minimizing the complexities involved in integrating them into existing systems.

Using pre-trained models offers numerous benefits. They dramatically reduce development time and associated costs. Developers can leverage the accuracy and sophistication of these models, improving the overall quality of their voice assistants without starting from scratch. Customization options allow developers to tailor the models to their exact specifications, ensuring a seamless integration with their systems.

Intuitive Template-Based Development

OpenAI's new template-based development environment makes creating voice assistants accessible even to those with limited coding experience.

- Drag-and-Drop Interface: A user-friendly drag-and-drop interface allows developers to visually design conversational flows, eliminating the need for extensive coding.

- Visual Workflow Design: Developers can visualize the entire conversational flow, simplifying the process of creating complex interactions.

- Pre-built Conversational Flows: Pre-built conversational flows provide a starting point for common use cases, speeding up the development process.

- Easy Integration with Other Services: Templates are designed for seamless integration with other services and APIs, enabling developers to easily incorporate additional functionality.

These intuitive tools democratize voice assistant development. The visual workflow design and drag-and-drop interface make it easy for even non-programmers to create functional voice assistants. This lowers the barrier to entry for individuals and small businesses, allowing them to participate in the burgeoning voice technology market.

Improved Developer Tools and Resources

OpenAI's commitment to developer success is evident in the improved tools and resources announced at the 2024 event.

Comprehensive Documentation and Tutorials

OpenAI has significantly enhanced its developer resources to ensure a smoother onboarding experience.

- Detailed API Documentation: Comprehensive API documentation provides clear explanations, examples, and code snippets, allowing developers to quickly integrate the APIs into their projects.

- Step-by-Step Tutorials: A series of step-by-step tutorials guides developers through the process of building various types of voice assistants, making it easy to learn the necessary skills.

- Example Code Snippets: Ready-to-use code snippets provide practical examples, accelerating development and reducing the need for extensive coding from scratch.

- Community Forums for Support: Active community forums provide a platform for developers to connect, share knowledge, and seek assistance from peers and OpenAI experts.

These resources significantly reduce the learning curve for developers. Detailed documentation and tutorials accelerate the development process, allowing developers to build voice assistants quickly and efficiently. The community forums provide invaluable support, ensuring that developers can overcome challenges and efficiently solve problems.

Enhanced Debugging and Monitoring Tools

OpenAI has also introduced advanced debugging and monitoring tools to enhance developer productivity.

- Real-time Performance Monitoring: Real-time monitoring allows developers to track the performance of their voice assistants and identify potential issues promptly.

- Error Detection and Logging: Robust error detection and logging mechanisms help developers identify and resolve errors efficiently, reducing downtime and improving stability.

- Integrated Testing Tools: Integrated testing tools simplify the process of testing voice assistants, ensuring they function correctly before deployment.

- Improved Debugging Capabilities: Enhanced debugging capabilities provide developers with detailed information, making it easier to pinpoint and resolve issues.

These tools optimize the development workflow. Real-time monitoring and error detection allow for immediate problem resolution, preventing larger issues from occurring. Integrated testing tools reduce deployment time and increase application reliability.

Conclusion

OpenAI's 2024 announcements mark a significant leap forward in making voice assistant development more accessible and efficient. The simplified APIs, pre-trained models, intuitive templates, and enhanced developer tools lower the barrier to entry for individuals and businesses alike. Whether you're a seasoned developer or a complete novice, the resources unveiled at the event empower you to build sophisticated voice assistants with ease. Start building your own voice assistant today by exploring the new OpenAI tools and resources. Don't miss out on this revolution in voice technology – begin building your voice assistant now!

Featured Posts

-

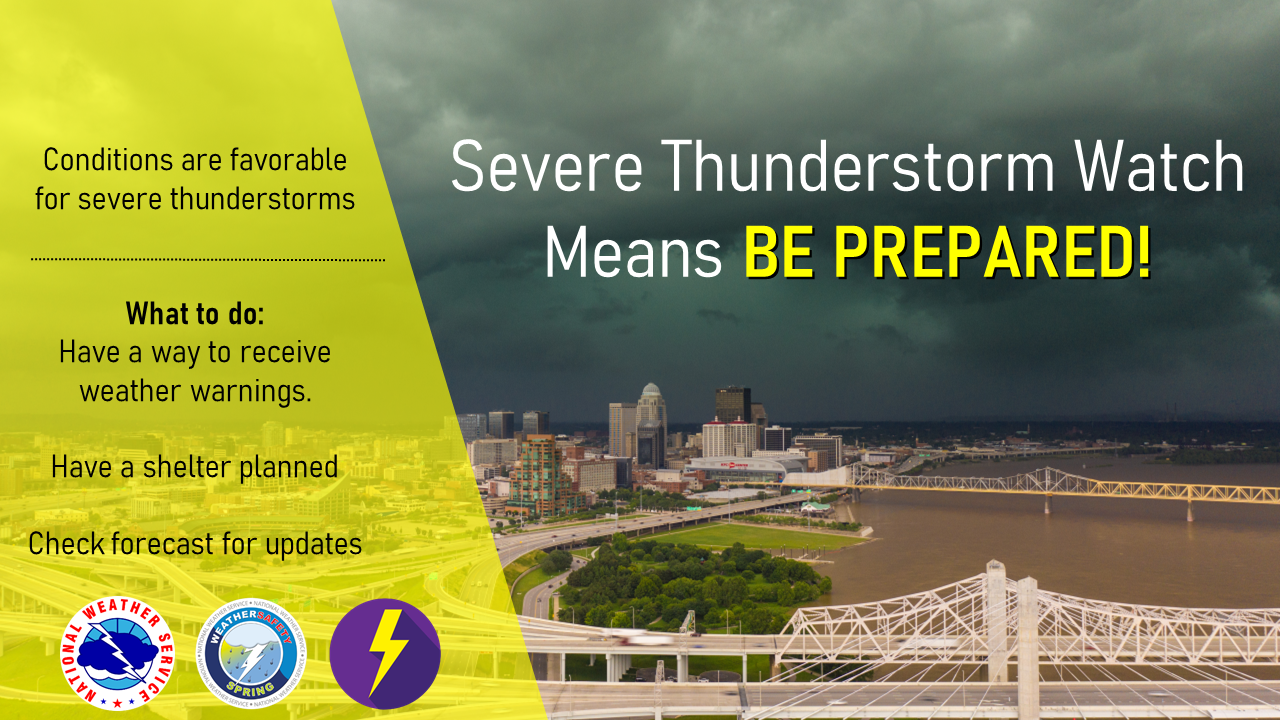

Why Are Kentucky Storm Damage Assessments Delayed

Apr 29, 2025

Why Are Kentucky Storm Damage Assessments Delayed

Apr 29, 2025 -

Concerns Rise Over Willie Nelsons Health And Rigorous Tour Life

Apr 29, 2025

Concerns Rise Over Willie Nelsons Health And Rigorous Tour Life

Apr 29, 2025 -

Kentucky Severe Weather Awareness Week Nws Preparedness

Apr 29, 2025

Kentucky Severe Weather Awareness Week Nws Preparedness

Apr 29, 2025 -

Khaznas Saudi Expansion Post Silver Lake Deal Data Center Strategy

Apr 29, 2025

Khaznas Saudi Expansion Post Silver Lake Deal Data Center Strategy

Apr 29, 2025 -

Open Ai And The Ftc Examining The Ongoing Investigation Into Chat Gpt

Apr 29, 2025

Open Ai And The Ftc Examining The Ongoing Investigation Into Chat Gpt

Apr 29, 2025

Latest Posts

-

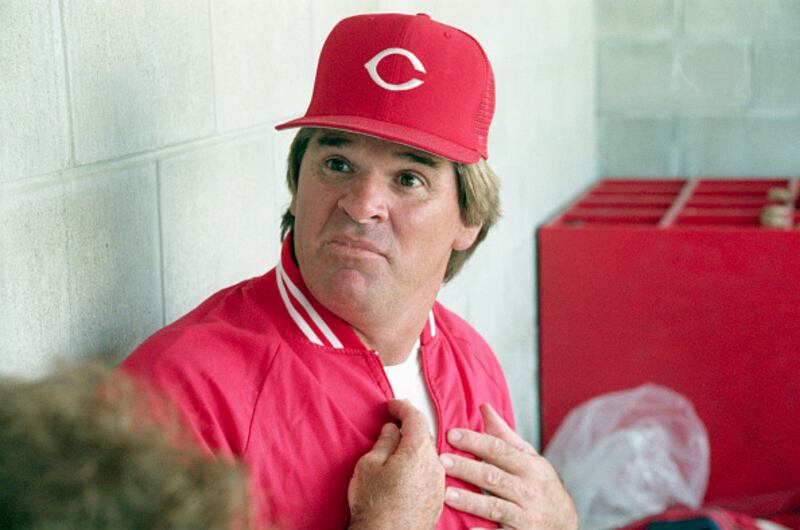

Posthumous Pardon For Pete Rose Understanding Trumps Decision

Apr 29, 2025

Posthumous Pardon For Pete Rose Understanding Trumps Decision

Apr 29, 2025 -

Snow Fox Operational Status Tuesday February 11th

Apr 29, 2025

Snow Fox Operational Status Tuesday February 11th

Apr 29, 2025 -

February 11th Snow Fox Updates Delays And Closings

Apr 29, 2025

February 11th Snow Fox Updates Delays And Closings

Apr 29, 2025 -

Pete Rose Pardon Trumps Announcement And Public Reaction

Apr 29, 2025

Pete Rose Pardon Trumps Announcement And Public Reaction

Apr 29, 2025 -

Snow Fox Tuesday February 11th Closures And Delays Announced

Apr 29, 2025

Snow Fox Tuesday February 11th Closures And Delays Announced

Apr 29, 2025