AI-Powered Therapy: A Threat To Privacy In An Increasingly Surveilled World

Table of Contents

Data Collection and Storage Practices in AI-Powered Therapy Platforms

AI-powered therapy platforms, encompassing chatbots and virtual assistants offering therapeutic support, collect vast amounts of sensitive data. Understanding the nature of this data and how it's handled is crucial.

The types of data collected include:

- Demographic information: Age, gender, location, etc.

- Mental health history: Diagnoses, treatment history, medication details.

- Conversation transcripts: Detailed records of every interaction with the AI.

- Emotional responses: Analysis of text and voice tone to gauge emotional state.

Data storage practices vary widely. While some platforms utilize robust encryption and security measures, others may fall short, raising concerns about:

- Data breaches: The risk of unauthorized access to sensitive mental health data.

- Unauthorized access: Potential vulnerabilities allowing hackers or malicious actors to obtain personal information.

- Data retention policies: The length of time data is stored and the procedures for data deletion.

- Lack of transparency: Many platforms lack clear and easily accessible information about their data collection and security protocols, making it difficult for users to understand how their data is being used and protected.

Algorithmic Bias and Discrimination in AI-Powered Therapy

A major concern with AI-powered therapy is the potential for algorithmic bias. AI systems are trained on datasets, and if these datasets reflect existing societal biases, the algorithms will inevitably perpetuate them. This can lead to unfair or discriminatory outcomes in therapy.

- Limited representation in training data: If the training data predominantly features information from a specific demographic, the AI may not accurately assess or respond to the needs of individuals from underrepresented groups.

- Reinforcement of harmful stereotypes: Algorithms might inadvertently reinforce negative stereotypes about certain groups based on the biases present in the data they are trained on.

- Lack of human oversight: Without proper human review and intervention, algorithms can make decisions that are discriminatory or harmful to specific users. The lack of human judgment in the therapeutic process is a significant risk.

The Erosion of Therapist-Patient Confidentiality

The traditional therapist-patient relationship is built on confidentiality. The introduction of AI raises crucial questions about the preservation of this sacred bond. AI systems involved in therapy may compromise confidentiality in several ways:

- Data breaches: If a platform suffers a security breach, sensitive personal information can be exposed to unauthorized individuals.

- Potential for misuse of data by third parties: Data shared with insurance companies, researchers, or other third parties could be misused or inappropriately disclosed.

- The legal and ethical implications of compromising confidentiality: Existing legal frameworks may not fully address the unique challenges presented by AI-powered therapy, leaving patients vulnerable.

Regulatory Frameworks and Data Protection

Current data protection laws like GDPR (General Data Protection Regulation) and HIPAA (Health Insurance Portability and Accountability Act) aim to protect personal information, but their applicability to the nuances of AI-powered therapy is often unclear.

- Gaps in existing regulations: Regulations often lag behind technological advancements, leaving significant gaps in protecting data collected and used by AI-powered therapy platforms.

- The need for stronger data protection laws: Specific legislation addressing the unique challenges of AI in mental health is urgently needed.

- International cooperation on data privacy standards: Harmonizing data protection regulations across borders is essential, as AI systems often operate globally.

Mitigating Privacy Risks in AI-Powered Therapy

Addressing the privacy concerns associated with AI-powered therapy requires a multi-pronged approach. Several strategies can be implemented to enhance user privacy:

- Implementing robust data encryption and security protocols: Strong encryption and robust security measures are crucial to protect data from unauthorized access.

- Promoting transparency in data collection and usage practices: Clear, concise, and accessible information about data handling practices is vital for building user trust.

- Developing ethical guidelines for the development and deployment of AI in therapy: Ethical guidelines should address data privacy, algorithmic bias, and the preservation of therapist-patient confidentiality.

- Employing privacy-enhancing technologies: Techniques like differential privacy and federated learning can help preserve privacy while still allowing for data analysis.

Conclusion: Protecting Privacy in the Age of AI-Powered Therapy

AI-powered therapy offers significant potential benefits, but the privacy risks it poses cannot be ignored. The collection and use of highly sensitive personal data, the potential for algorithmic bias, and the erosion of traditional therapist-patient confidentiality necessitate stronger regulations and ethical guidelines. We must demand transparency from AI-powered therapy providers and advocate for legislation that prioritizes user privacy in this rapidly evolving field. Your mental health deserves protection. Demand accountability and transparency – your privacy depends on it.

Featured Posts

-

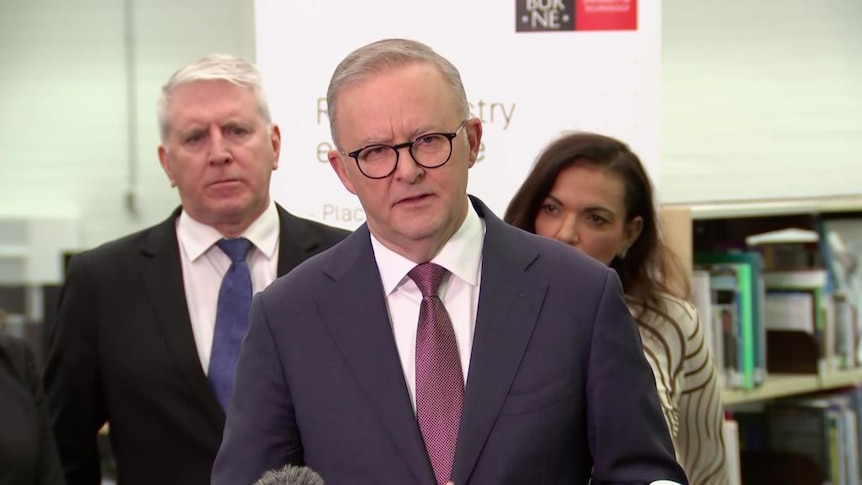

The Albanese Dutton Debate A Deep Dive Into Their Campaign Strategies

May 15, 2025

The Albanese Dutton Debate A Deep Dive Into Their Campaign Strategies

May 15, 2025 -

Full Andor Season 2 Trailer Analysis Death Star Yavin 4 And Beyond

May 15, 2025

Full Andor Season 2 Trailer Analysis Death Star Yavin 4 And Beyond

May 15, 2025 -

Joe And Jill Biden On The View Interview Time And How To Watch

May 15, 2025

Joe And Jill Biden On The View Interview Time And How To Watch

May 15, 2025 -

The Growing Trend Of Apple Watches Among Nhl Referees

May 15, 2025

The Growing Trend Of Apple Watches Among Nhl Referees

May 15, 2025 -

Sergey Bobrovskiy 5 Y Shat Aut V Pley Off N Kh L

May 15, 2025

Sergey Bobrovskiy 5 Y Shat Aut V Pley Off N Kh L

May 15, 2025