AI And The Illusion Of Learning: Strategies For Ethical AI Development

Table of Contents

Understanding the Illusion of Learning in AI

The limitations of current AI models:

AI, particularly machine learning models, excels at pattern recognition. However, this pattern recognition shouldn't be mistaken for genuine comprehension. Current deep learning models, while powerful, have significant limitations in understanding context, nuance, and the real-world implications of their actions. This "illusion of learning" is a critical ethical concern.

- AI systems lack common sense reasoning: AI struggles with tasks that humans find trivial, such as understanding implicit meanings or applying knowledge across different contexts. They often fail to grasp the broader implications of their actions.

- AI systems can be easily fooled by adversarial examples: Minor, almost imperceptible, changes to input data can drastically alter an AI's output, highlighting the fragility of these systems and their vulnerability to manipulation.

- Current AI struggles with generalizability and transfer learning: An AI trained on one dataset may perform poorly on a slightly different one, limiting its applicability and raising concerns about its reliability in real-world scenarios.

- Overreliance on statistical correlations can lead to inaccurate or biased outputs: AI systems identify patterns in data, but these patterns may not reflect true causality. This can lead to inaccurate predictions and perpetuate existing biases.

The impact of biased data:

AI systems are trained on data, and if that data reflects existing societal biases, the AI will inevitably perpetuate and amplify those biases. This leads to unfair or discriminatory outcomes, undermining the fairness and equity of AI applications.

- Examples of AI bias in facial recognition, loan applications, and criminal justice: Biased algorithms have led to misidentification of individuals in facial recognition systems, discriminatory loan approvals, and biased risk assessments in the criminal justice system.

- The importance of diverse and representative datasets: Creating AI systems that are fair and equitable requires training them on data that accurately reflects the diversity of the population. This involves addressing underrepresentation and biases within datasets.

- Techniques for identifying and mitigating bias in training data: Various techniques, including data augmentation, re-weighting, and adversarial debiasing, can help to identify and mitigate bias in training data. However, these methods require careful consideration and often involve trade-offs.

Strategies for Ethical AI Development

Prioritizing Transparency and Explainability:

Explainable AI (XAI) is crucial for building trust and accountability. Understanding how an AI system arrives at its decisions is essential for identifying and correcting errors, mitigating bias, and ensuring responsible use. Transparency in algorithms and data is equally vital.

- Methods for building explainable AI models: Techniques like LIME (Local Interpretable Model-agnostic Explanations) and SHAP (SHapley Additive exPlanations) help to interpret the predictions of complex AI models.

- The benefits of transparent AI systems for accountability and trust: Transparency allows for scrutiny and builds public trust, ensuring that AI systems are used responsibly and ethically.

- Challenges in achieving explainability in complex AI models: Achieving full explainability in highly complex models remains a significant challenge. The inherent complexity of some AI architectures can make it difficult to trace the reasoning behind their outputs.

Ensuring Accountability and Responsibility:

Mechanisms are needed to hold developers and deployers of AI systems accountable for their actions and the impact of their creations. This requires a multi-faceted approach.

- Establishing ethical guidelines and regulations for AI development: Clear ethical guidelines and regulations are needed to guide AI development and deployment, ensuring responsible innovation.

- Implementing robust auditing and monitoring processes: Regular audits and monitoring of AI systems are necessary to identify and address potential biases, errors, and ethical concerns.

- Developing mechanisms for redress and compensation in case of AI-related harm: Clear processes for redress and compensation should be in place to address any harm caused by AI systems.

Human-in-the-loop systems and human oversight:

Human oversight is critical, particularly in high-stakes applications. AI should augment, not replace, human judgment.

- Examples of successful human-in-the-loop AI systems: Many successful AI systems incorporate human oversight, allowing humans to review and validate AI outputs before decisions are made.

- The role of human expertise in validating AI outputs: Human expertise is essential for identifying potential biases or errors in AI outputs and ensuring that the AI's decisions align with ethical and legal standards.

- The importance of maintaining human control and agency: It is crucial to ensure that humans retain control over AI systems and that AI is used to empower, not supplant, human decision-making.

Conclusion

The illusion of learning in AI highlights the critical need for ethical considerations in its development. The risks of bias, lack of transparency, and potential for harm are real and require proactive measures. The strategies discussed – prioritizing transparency and explainability, ensuring accountability and responsibility, and incorporating human-in-the-loop systems – are crucial steps towards responsible AI development. By fostering ethical AI development, we can harness the power of AI for good while mitigating the associated risks. The development of truly beneficial AI requires a proactive approach to ethical considerations. Let's work together to build a future where AI empowers humanity responsibly – let's foster ethical AI development.

Featured Posts

-

Russell Brand Not Guilty Plea In Rape And Sexual Assault Case

May 31, 2025

Russell Brand Not Guilty Plea In Rape And Sexual Assault Case

May 31, 2025 -

I Woke Up To A Banksy Now What

May 31, 2025

I Woke Up To A Banksy Now What

May 31, 2025 -

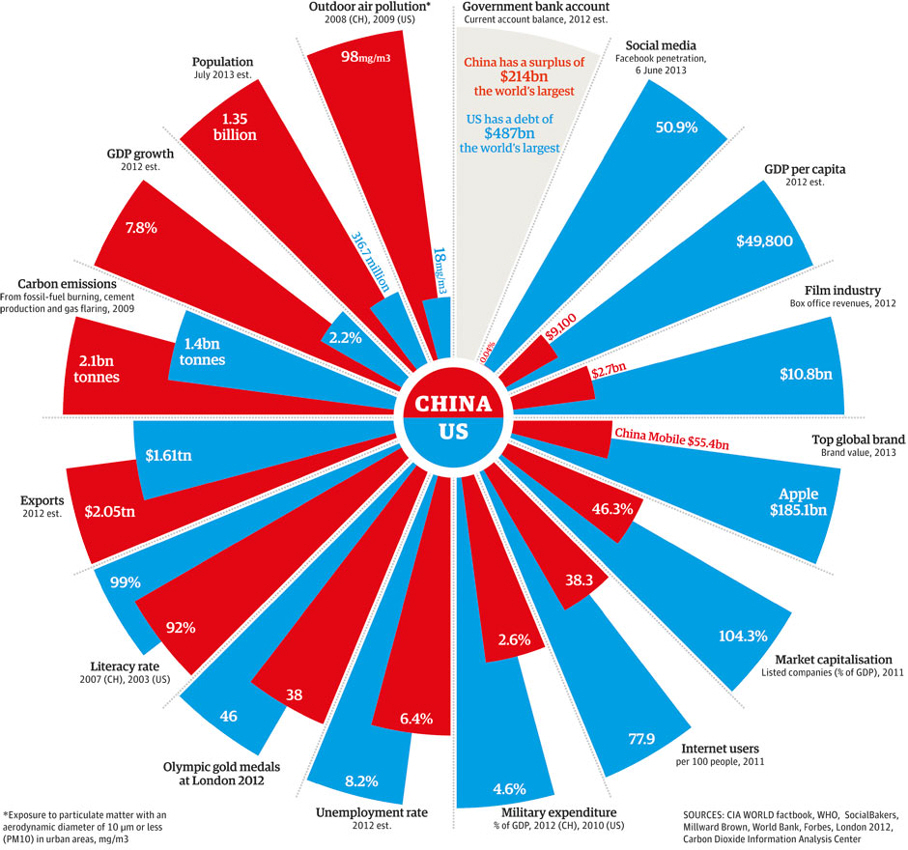

America Vs China A Military Power Comparison And Analysis

May 31, 2025

America Vs China A Military Power Comparison And Analysis

May 31, 2025 -

Zverev Vs Griekspoor Bmw Open 2025 Quarter Final Showdown

May 31, 2025

Zverev Vs Griekspoor Bmw Open 2025 Quarter Final Showdown

May 31, 2025 -

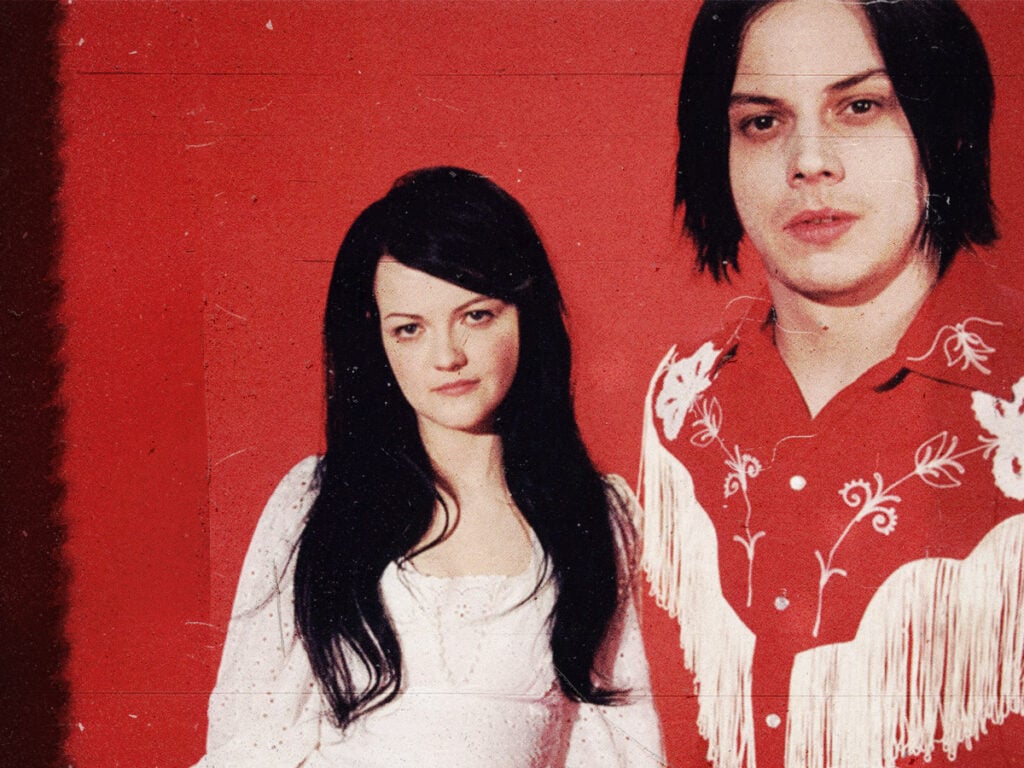

White Stripes Jack White Discusses Baseball And The Hall Of Fame On Tigers Broadcast

May 31, 2025

White Stripes Jack White Discusses Baseball And The Hall Of Fame On Tigers Broadcast

May 31, 2025